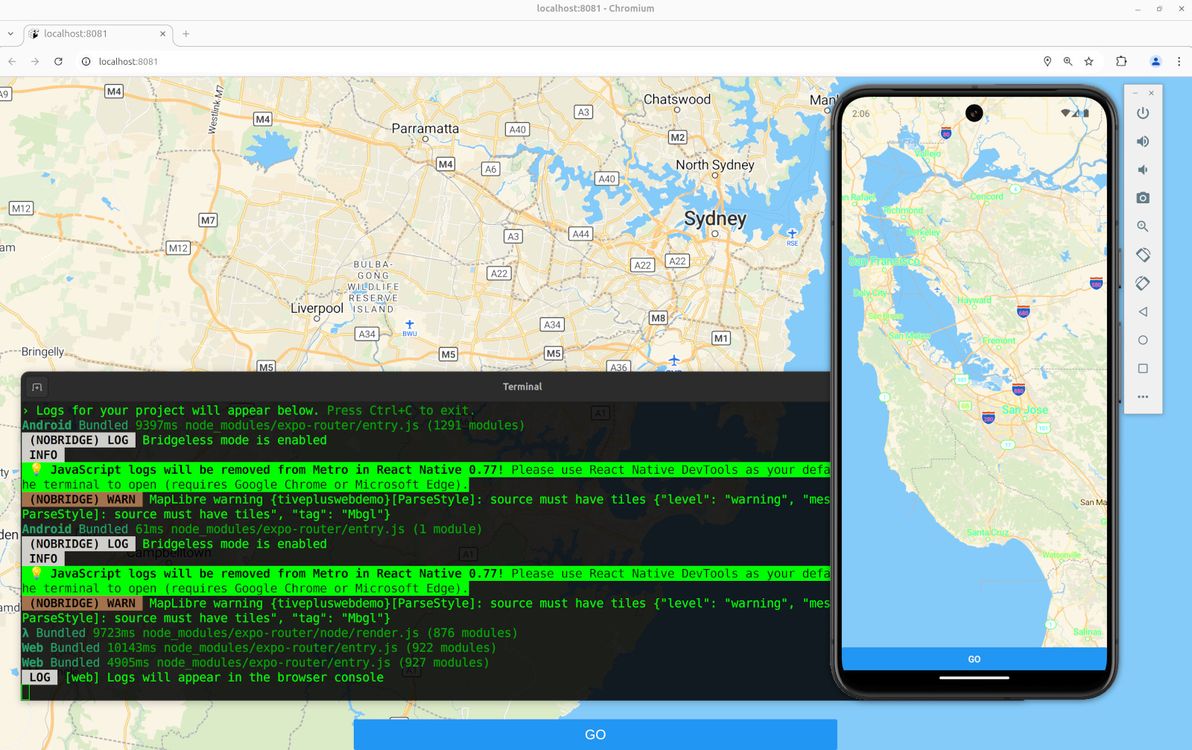

Below is my humble lil' guide to getting MapLibre working for both native and web in Expo. Note: if you want to skip the step-by-step shpiel, and you just want a working example with all the code, feel free to head straight to the Expo MapLibre native + web demo on GitHub.

Map library options

First of all, a quick rundown of the options that one has at one's disposal, when wanting to add a map to an Expo app.

The simplest and the most recommended option is to use react-native-maps. This is the only solution that works with Expo Go, and it's the only one that's documented in the official Expo docs.

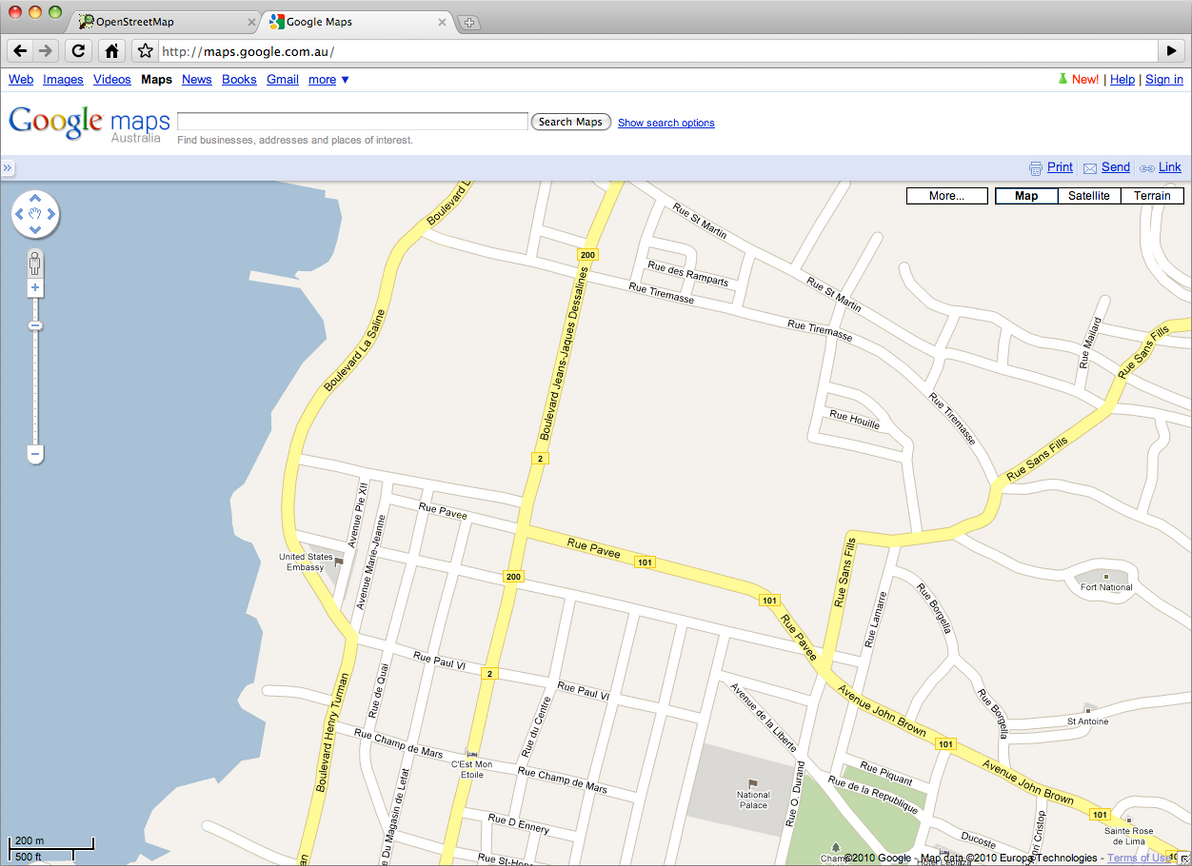

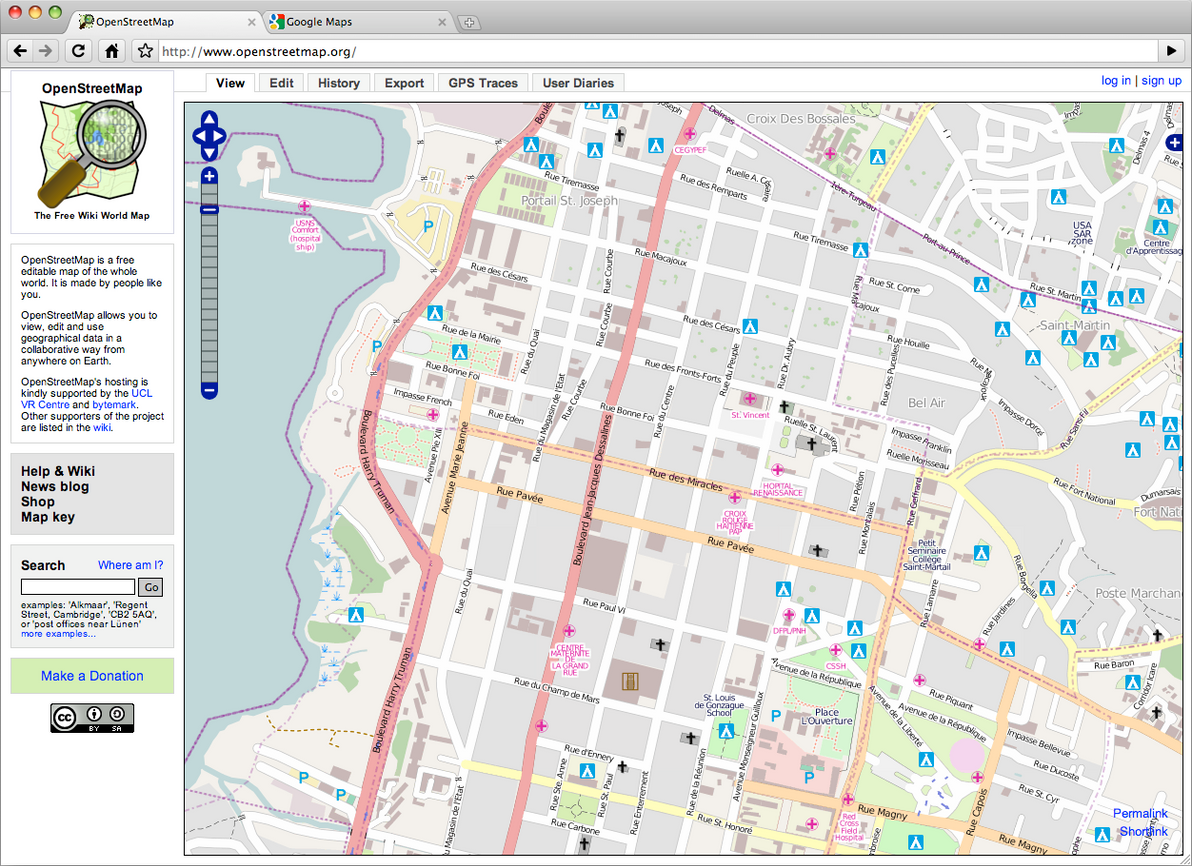

However, it's explicitly stated that react-native-maps isn't web-compatible, so if you used it and you also wanted maps on web, your only choice would be to fall back to something like react-google-maps for web. Also, you'd (potentially) have to deal with Apple Maps on iOS vs Google Maps on Android. And – my main reason for steering clear of this option – you'd have to live with the these-days-horrific pricing and draconian ToS of the Google Maps API.

The next option is to use rnmapbox. This is the solution that I instinctively chose first up, and that I stuck with for quite a while, mainly because (for the past several years) I've become accustomed to using Mapbox instead of Google Maps anyway, for maps on old-skool web sites. Plus, rnmapbox claims to (somewhat) support Expo Web.

Unfortunately, "somewhat" is in my opinion an overly optimistic assessment of rnmapbox's web support – basically, instead of trying to go down that route, you should instead fall back to react-map-gl with mapbox-gl-js for web. Plus, I was surprised to learn that Mapbox is no longer the mapping provider of choice for hobbyists, since it decided to stop open-sourcing its mapping library.

Which led me to MapLibre, which is a fork of Mapbox (v1) before the folks at Mapbox decided to release v2 with a non-open-source license. So, with MapLibre (plus MapTiler), I don't have to worry about disagreeable pricing / ToS. And I get basically the same map library on native and web. Although not exactly the same library – it inherits the limitations of the Mapbox libraries, so you need to use maplibre-react-native for native, and fall back to react-map-gl with maplibre-gl-js for web.

Map features

What I needed, and what I'm demo'ing here, is a pretty simple map. It shows the user's location on load (if the user grants location permissions, otherwise it falls back to showing latitude / longitude 0,0 on load). And when the user presses the "Go" button, it grabs the current centre position of the map, and shows the latitude / longitude coordinates of that centre position. That's it!

You're likely to need more functionality than that for a map in your own app. Hopefully this provides you with a humble base to start off from. Good luck getting other bells and whistles working (for native and web)!

Walkthrough

To start off, you'll need an Expo project. If you don't already have one, you can create one with:

npx create-expo-app@latest MyAppThen, you'll need to add both the native and the web mapping libraries as dependencies:

npm install --save @maplibre/maplibre-react-native

npm install --save maplibre-gl

npm install --save react-map-glI like to put everything inside a src/ directory, which is supported but which is not the default for Expo. And I like a structure with various other directories under src/ (see link). My example code from here on assumes that structure. Feel free to suit to your tastes.

You'll need to sign up to MapTiler for an API key. Edit your .env.local file to include this:

EXPO_PUBLIC_MAPTILER_API_KEY=yourmaptilerkeygoeshereDefine this variable in e.g. src/core/config.ts:

export const MAPTILER_API_KEY = process.env.EXPO_PUBLIC_MAPTILER_API_KEY;And define this constant in e.g. src/core/constants.ts:

export const MAPTILER_STYLE_URL =

"https://api.maptiler.com/maps/streets-v2/style.json?key=MAPTILER_API_KEY";The code from here on depends on various utility components, for styling of text and for positioning of elements. I won't go through all those components in this article, I leave it to you to refer to the src/components/ directory.

Before we get into the map code, we need to request location permission, and to get the user's current location (if the user grants permission). I originally had all of this inside the map components, but I then refactored the meat of it out into a utility function, which is very similar to the code in the expo-location docs, and which you can put at e.g. src/core/locationUtils.ts:

import * as Location from "expo-location";

import { Dispatch, SetStateAction } from "react";

export const setCurrentLocationIfAvailable = async (

setLocation: Dispatch<SetStateAction<Location.LocationObjectCoords>>,

setIsLocationUnavailable: Dispatch<SetStateAction<boolean>>,

) => {

let { status } = await Location.requestForegroundPermissionsAsync();

if (status !== "granted") {

setIsLocationUnavailable(true);

return;

}

try {

const currentLocation = await Location.getCurrentPositionAsync({});

setLocation(currentLocation.coords);

} catch (_e) {

setIsLocationUnavailable(true);

}

};Now for the map itself. Let's start by putting the code to render the map for native into a component. This code goes at e.g. src/components/NativeMapView.tsx:

import { StyleSheet } from "react-native";

import * as Location from "expo-location";

import MapLibreGL from "@maplibre/maplibre-react-native";

import { Camera, MapView, MapViewRef } from "@maplibre/maplibre-react-native";

import { Ref, useEffect, useState } from "react";

import { MAPTILER_API_KEY } from "../core/config";

import { MAPTILER_STYLE_URL } from "../core/constants";

import { setCurrentLocationIfAvailable } from "../core/locationUtils";

import { LoadingText } from "./LoadingText";

interface NativeMapViewProps {

mapRef?: Ref<MapViewRef>;

}

export const NativeMapView = (props: NativeMapViewProps) => {

const [location, setLocation] =

useState<Location.LocationObjectCoords | null>(null);

const [isLocationUnavailable, setIsLocationUnavailable] = useState(false);

useEffect(() => {

MapLibreGL.setAccessToken(null);

setCurrentLocationIfAvailable(setLocation, setIsLocationUnavailable);

}, []);

if (!location && !isLocationUnavailable) {

return <LoadingText />;

}

return (

<MapView

ref={props.mapRef}

style={styles.map}

styleURL={MAPTILER_STYLE_URL.replace(

"MAPTILER_API_KEY",

MAPTILER_API_KEY,

)}

>

<Camera

centerCoordinate={

location ? [location.longitude, location.latitude] : [0, 0]

}

zoomLevel={location ? 12 : 2}

animationDuration={0}

/>

</MapView>

);

};

const styles = StyleSheet.create({

map: {

flex: 1,

},

});This just renders the map, centred and zoomed at the user's current location, without any additional behaviour defined. It's important that we only import from @maplibre/maplibre-react-native in this file, and not in any other files that get loaded for both native and web, because web will freak out if it sees that import.

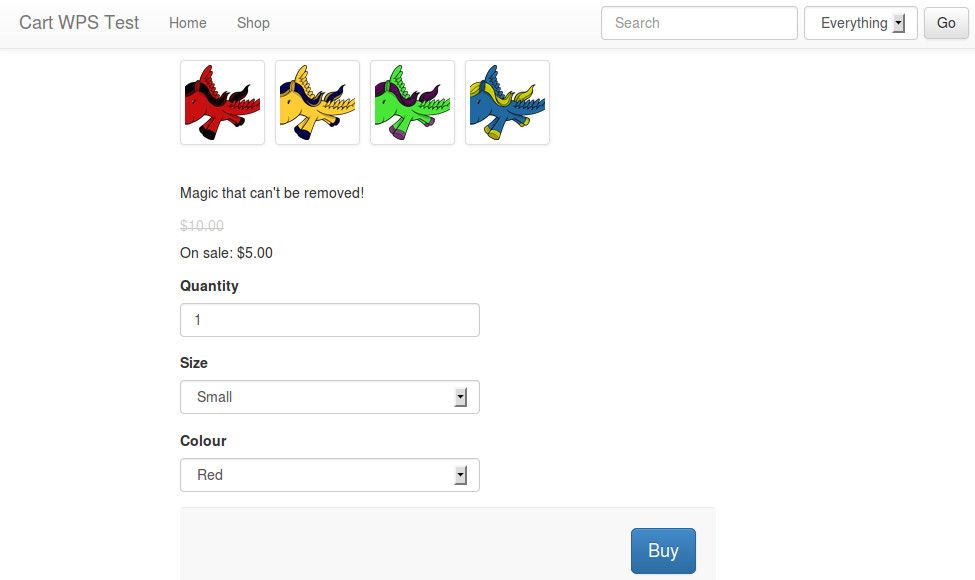

Next comes the code to render the map for web into a component. This code goes at e.g. src/components/WebMapView.tsx:

import { Ref, useEffect, useState } from "react";

import Map, { MapRef } from "react-map-gl/maplibre";

import * as Location from "expo-location";

import { MAPTILER_API_KEY } from "../core/config";

import { MAPTILER_STYLE_URL } from "../core/constants";

import { setCurrentLocationIfAvailable } from "../core/locationUtils";

import { LoadingText } from "./LoadingText";

interface WebMapViewProps {

mapRef?: Ref<MapRef>;

}

export const WebMapView = (props: WebMapViewProps) => {

const [location, setLocation] =

useState<Location.LocationObjectCoords | null>(null);

const [isLocationUnavailable, setIsLocationUnavailable] = useState(false);

useEffect(() => {

setCurrentLocationIfAvailable(setLocation, setIsLocationUnavailable);

}, []);

if (!location && !isLocationUnavailable) {

return <LoadingText />;

}

return (

<Map

ref={props.mapRef}

initialViewState={{

latitude: location ? location.latitude : 0,

longitude: location ? location.longitude : 0,

zoom: location ? 12 : 2,

}}

style={{ width: "100%", height: "100%" }}

mapStyle={MAPTILER_STYLE_URL.replace(

"MAPTILER_API_KEY",

MAPTILER_API_KEY,

)}

/>

);

};Once again, this just renders the map, no additional behaviour. And it's important that we only import from react-map-gl/maplibre in this file, because native will freak out if it sees that import.

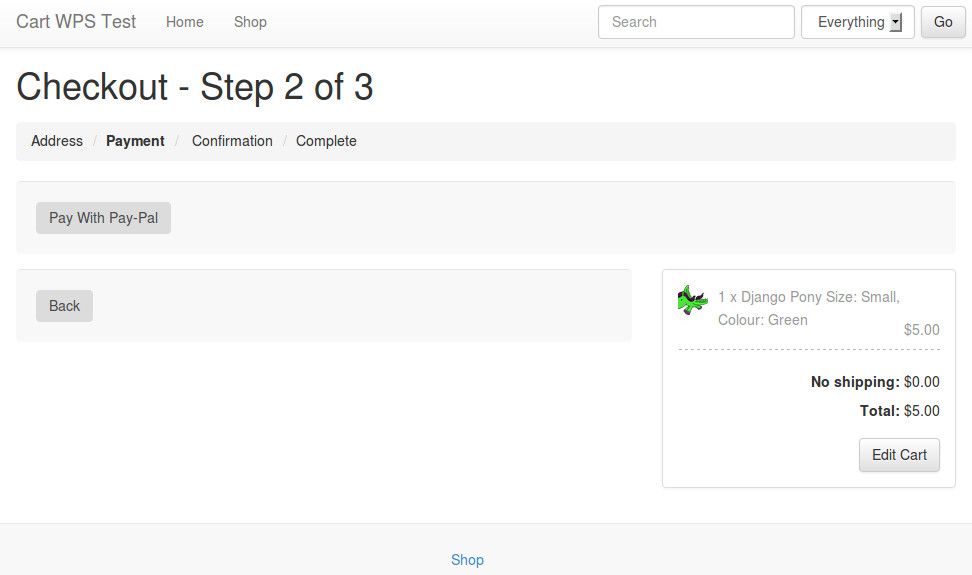

Now we're going to render the map together with a "Go" button, and we're going to add some additional behaviour, such that when the button is pressed, we grab the current centre coordinates of the map, and then trigger an event using those coordinates. First, the native code for all that, at e.g. src/components/LatLonMap.tsx:

import { useRef } from "react";

import { Button } from "react-native";

import { CenteredContainer } from "./CenteredContainer";

import { FloatingContainer } from "./FloatingContainer";

import { FullWidthContainer } from "./FullWidthContainer";

import { FullWidthAndHeightContainer } from "./FullWidthAndHeightContainer";

import { NativeMapView } from "./NativeMapView";

import { MapViewRef } from "@maplibre/maplibre-react-native";

interface LatLonMapProps {

onPress?: (latitude: number, longitude: number) => Promise<void>;

}

export const LatLonMap = (props: LatLonMapProps) => {

const mapRef = useRef<MapViewRef>(null);

return (

<FullWidthAndHeightContainer>

<NativeMapView mapRef={mapRef} />

<FloatingContainer>

<CenteredContainer>

<FullWidthContainer>

<Button

onPress={async () => {

if (!mapRef.current) {

throw new Error("Missing mapRef");

}

const center = await mapRef.current.getCenter();

if (props.onPress) {

await props.onPress(center[1], center[0]);

}

}}

title="Go"

/>

</FullWidthContainer>

</CenteredContainer>

</FloatingContainer>

</FullWidthAndHeightContainer>

);

};And the web code for all that, at e.g. src/components/LatLonMap.web.tsx:

import { useRef } from "react";

import { Button } from "react-native";

import { CenteredContainer } from "./CenteredContainer";

import { FloatingContainer } from "./FloatingContainer";

import { FullWidthContainer } from "./FullWidthContainer";

import { FullWidthAndHeightContainer } from "./FullWidthAndHeightContainer";

import { WebMapView } from "./WebMapView";

import { MapRef } from "react-map-gl/maplibre";

interface LatLonMapProps {

onPress?: (latitude: number, longitude: number) => Promise<void>;

}

export const LatLonMap = (props: LatLonMapProps) => {

const mapRef = useRef<MapRef>(null);

return (

<FullWidthAndHeightContainer>

<WebMapView mapRef={mapRef} />

<FloatingContainer>

<CenteredContainer>

<FullWidthContainer>

<Button

onPress={async () => {

if (!mapRef.current) {

throw new Error("Missing mapRef");

}

const center = mapRef.current.getCenter();

if (props.onPress) {

await props.onPress(center.lat, center.lng);

}

}}

title="Go"

/>

</FullWidthContainer>

</CenteredContainer>

</FloatingContainer>

</FullWidthAndHeightContainer>

);

};A few key things to note with the above code samples. First of all, we're using Expo's built-in system of platform-specific filename prefixes, to write both a native version (the "default" version ending in .tsx) and a web version (ending in .web.tsx) of the same component. We're importing our NativeMapView component in one version, and our WebMapView component in the other version. The mapRef variable is of a different type, and has a slightly different interface, in each version.

And, finally, we're defining onPress as a prop that gets passed in (and that gets given the coordinates as simple integer parameters when it's called), rather than defining what happens on button press directly in this component, so that we can implement the "on button press" behaviour just once in the calling code (and so that the calling code, rather than this component, gets to decide what happens on button press, thus making this component more reusable).

We're now done writing components. Let's use our new native- and web-compatible map component on our home screen – code goes at e.g. src/app/index.tsx:

import { router } from "expo-router";

import { LatLonMap } from "../components/LatLonMap";

export default function HomeScreen() {

return (

<LatLonMap

onPress={async (latitude: number, longitude: number) => {

router.replace(`/lat-lon?lat=${latitude}&lon=${longitude}`);

}}

/>

);

}The above code is where we implement the "on button press" behaviour. In this case, the behaviour is to redirect to the /lat-lon screen, and to pass the latitude and longitude values as URL parameters.

Lucky last step is to then display the latitude and longitude to the user – code goes at e.g. src/app/(app)/lat-lon.tsx:

import { Button, StyleSheet } from "react-native";

import { useLocalSearchParams } from "expo-router";

import { Link } from "expo-router";

import { ThemedText } from "../../components/ThemedText";

import { ThemedView } from "../../components/ThemedView";

export default function LatLonScreen() {

const { lat, lon } = useLocalSearchParams();

if (typeof lat !== "string") {

throw new Error("lat is not a string");

}

if (typeof lon !== "string") {

throw new Error("lon is not a string");

}

const latVal = parseFloat(lat);

const lonVal = parseFloat(lon);

return (

<ThemedView style={styles.container}>

<ThemedText>Latitude: {latVal}</ThemedText>

<ThemedText>Longitude: {lonVal}</ThemedText>

<Link href="/" asChild>

<Button onPress={() => {}} title="Back" />

</Link>

</ThemedView>

);

}

const styles = StyleSheet.create({

container: {

flex: 1,

alignItems: "center",

justifyContent: "center",

padding: 20,

},

});Map done

There you have it: a map that looks and behaves virtually the same, on both native and web, implemented in a single codebase, with minimal platform-specific code required. I haven't thoroughly looked into how performant, how buggy, or overall how effective this solution is on all platforms, but hey, it's a start. Hope this helps you in your own Expo mapping endeavours.

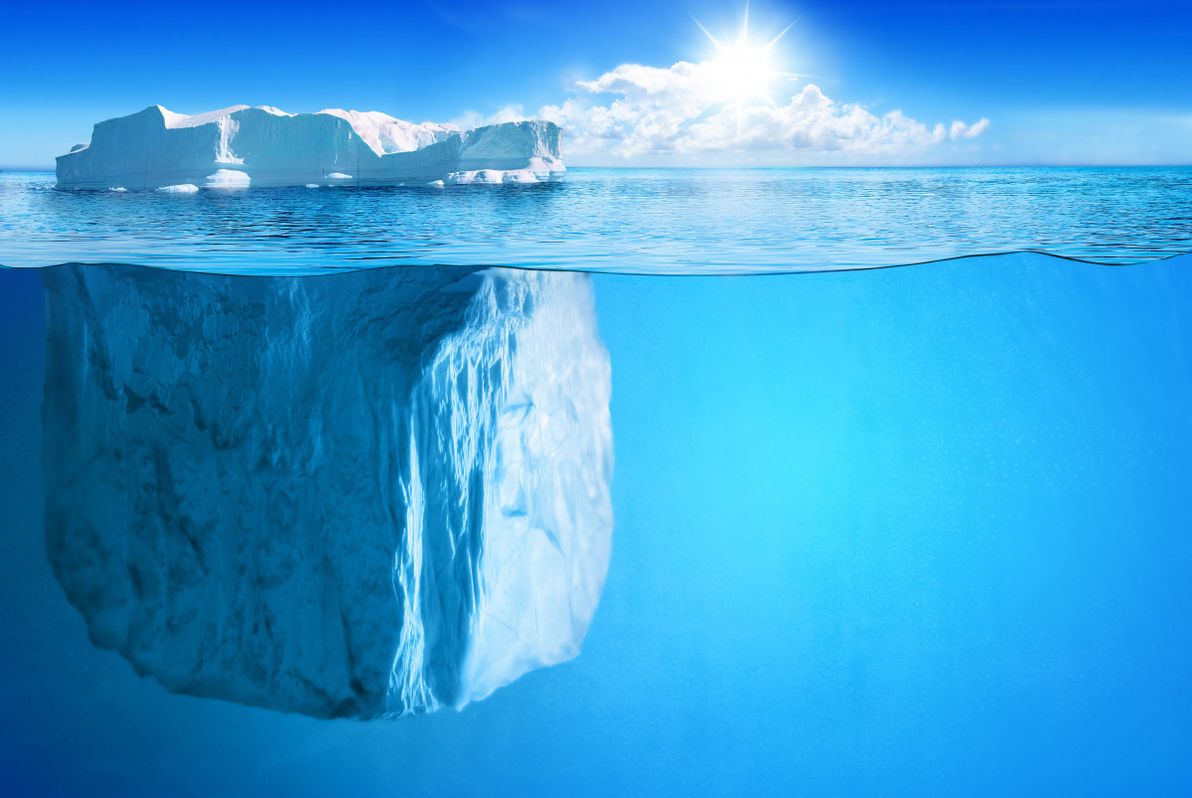

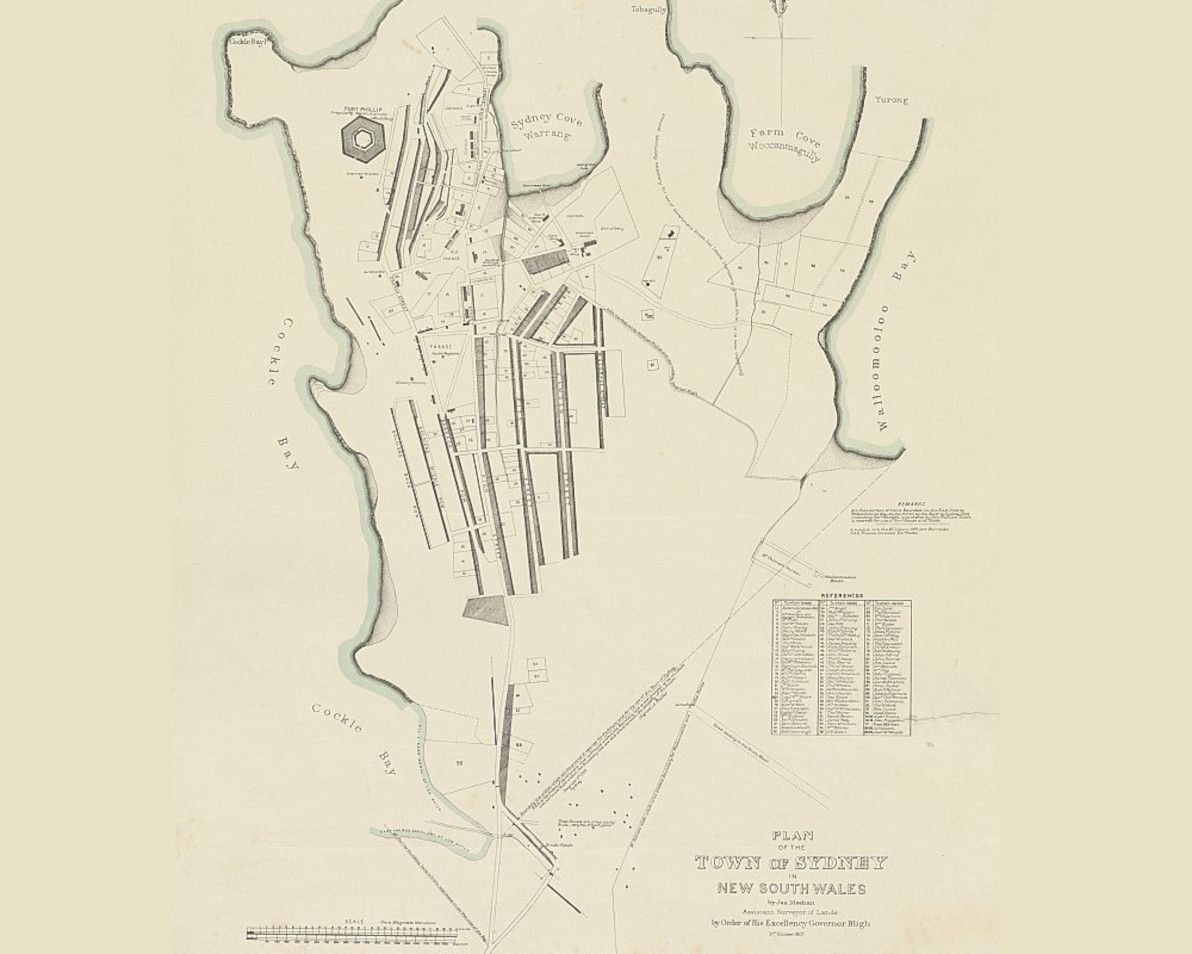

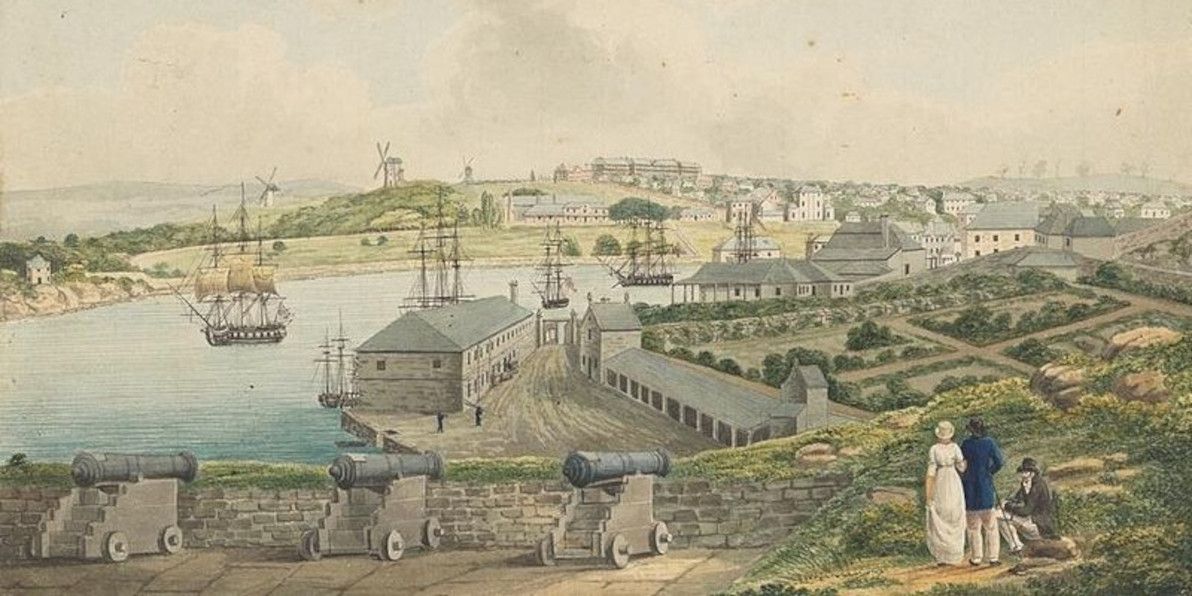

]]>To be fair to those folks, it's true that – as they claim – the water level of various landmarks around the world, such as the Statue of Liberty, Plymouth Rock, and (in my own stomping ground of Sydney Harbour) Fort Denison, has not "visibly" risen since they were erected.

The fact, per the consensus of reputable scientists, is that the global average sea level has risen by 15-25cm (6-10") since 1900. Now, I'm willing to entertain the notion that, alright, for all practical purposes, that's not much. And that's just the average. So I consider it not unreasonable to concede that there are numerous places in the world today, where the sea level has barely, if at all, risen.

But.

Image source: PxHere.

The thing is, sea level rise lags behind global warming by several decades. So (if you'll excuse my hitting-rather-close-to-home choice of pun), what we've seen so far is just the tip of the iceberg. We've already locked in another 10-25cm (4-10") of sea level rise between now and 2050. And we're currently looking at a minimum 28-61cm (11-24") of sea level rise between now and 2100, and a minimum 40-95cm (16-38") of sea level rise between now and 2200. And those are the best-case scenarios, based: on the most conservative of scientists' conclusions; and on the world taking drastic action to reduce greenhouse gas emissions starting right now (a depressingly unlikely occurrence).

So, ok, perhaps sea level rise ain't gotten real yet for most people (although it sure has for some people). But I got news for y'all: it's gonna get a whole lot realer.

It's complicated

Sea level rise is, admittedly, one of the tricker symptoms of climate change to get your head around.

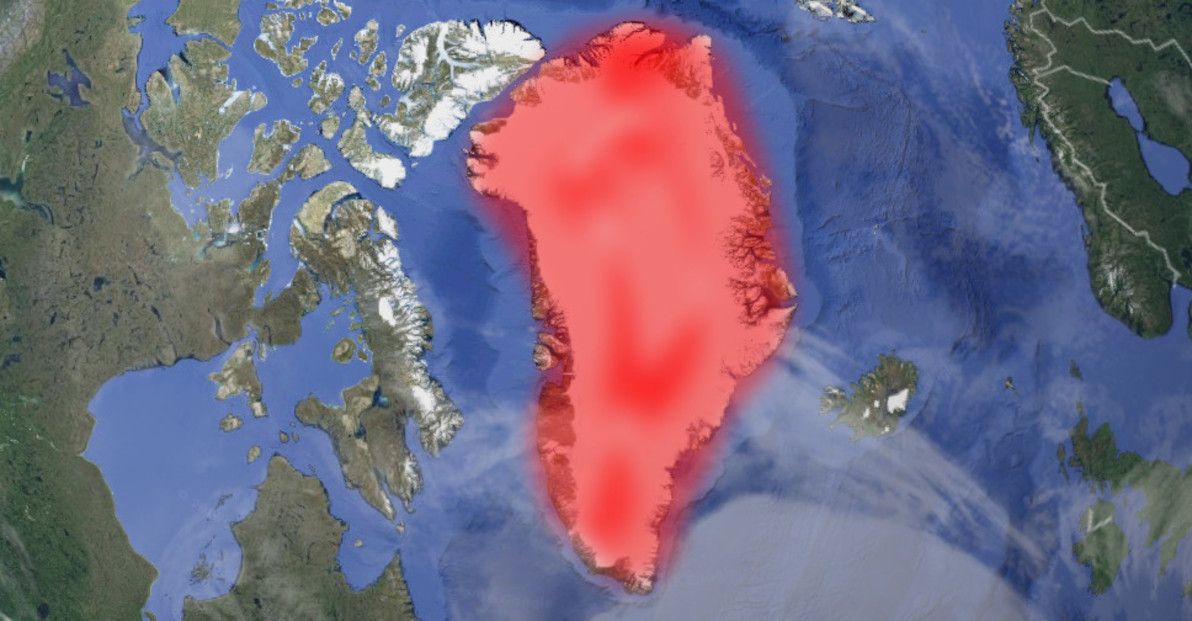

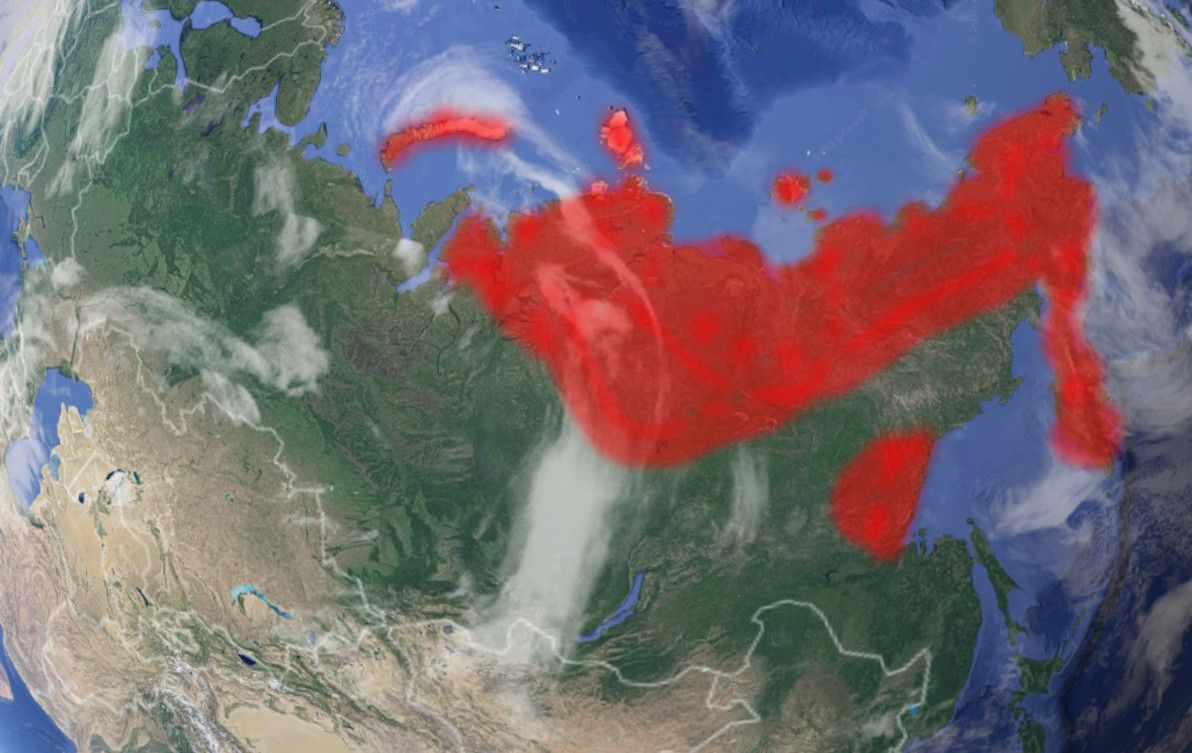

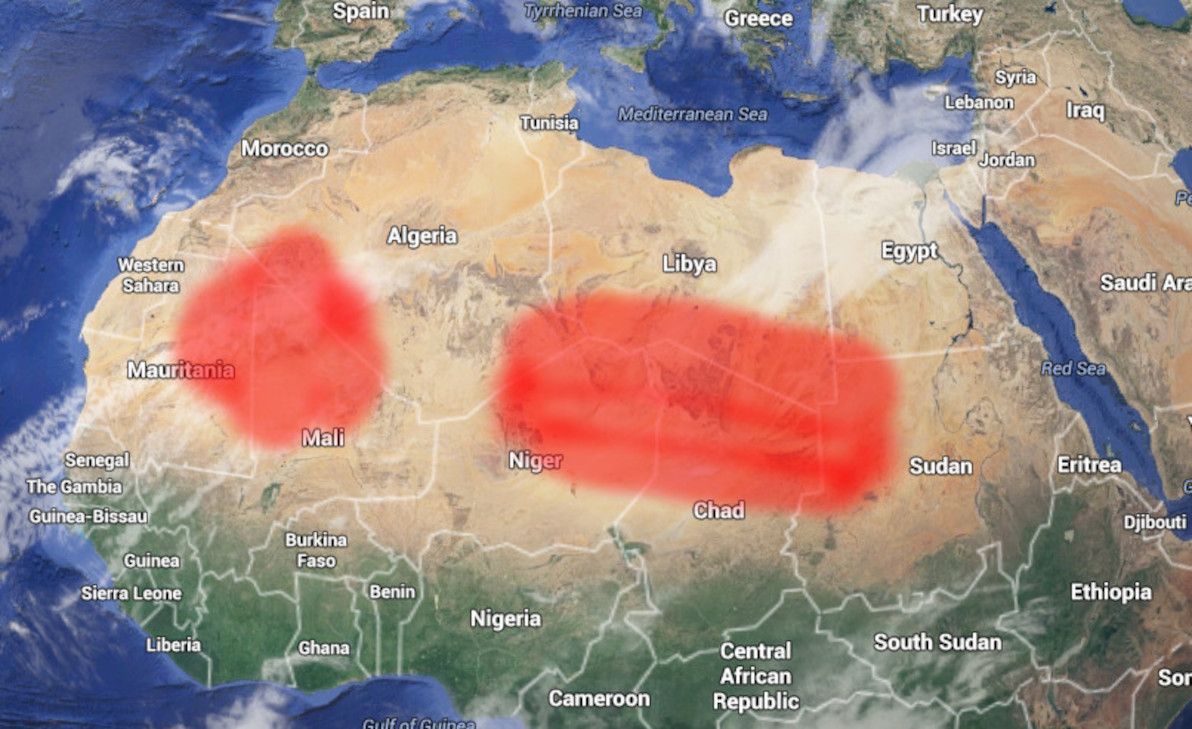

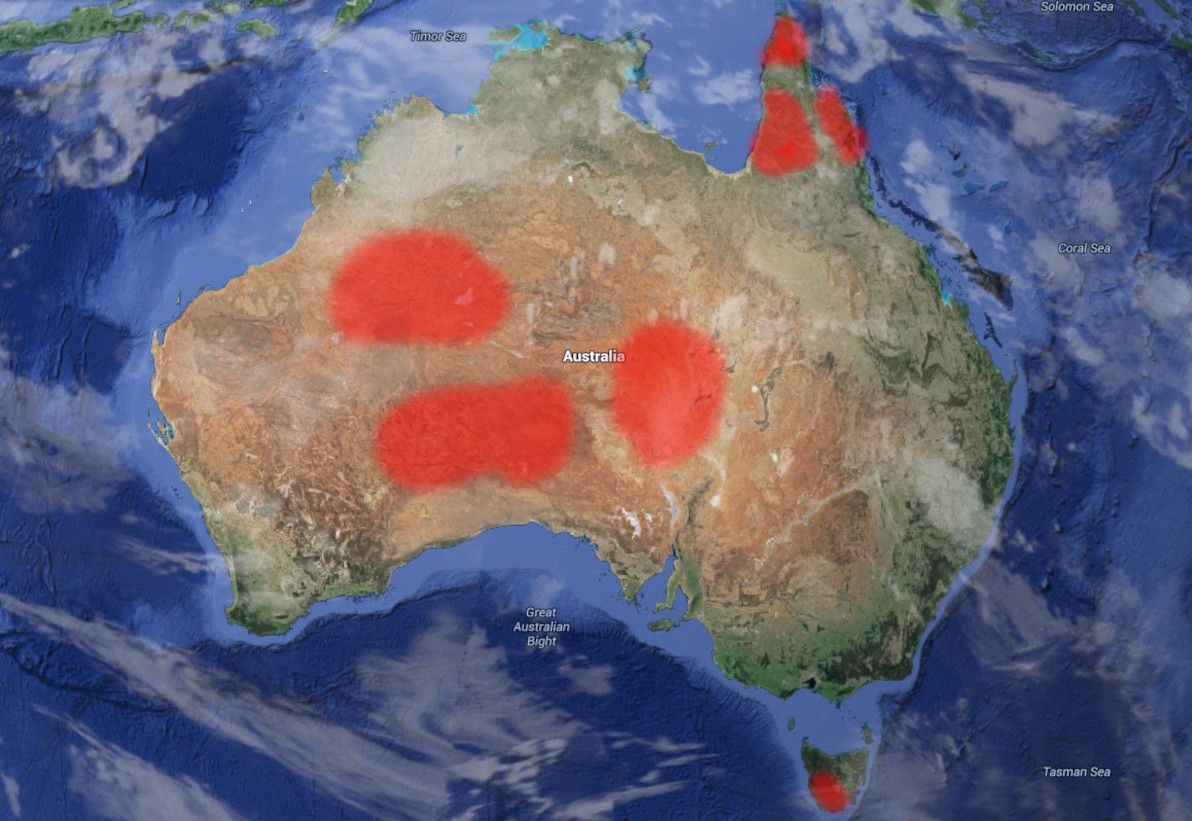

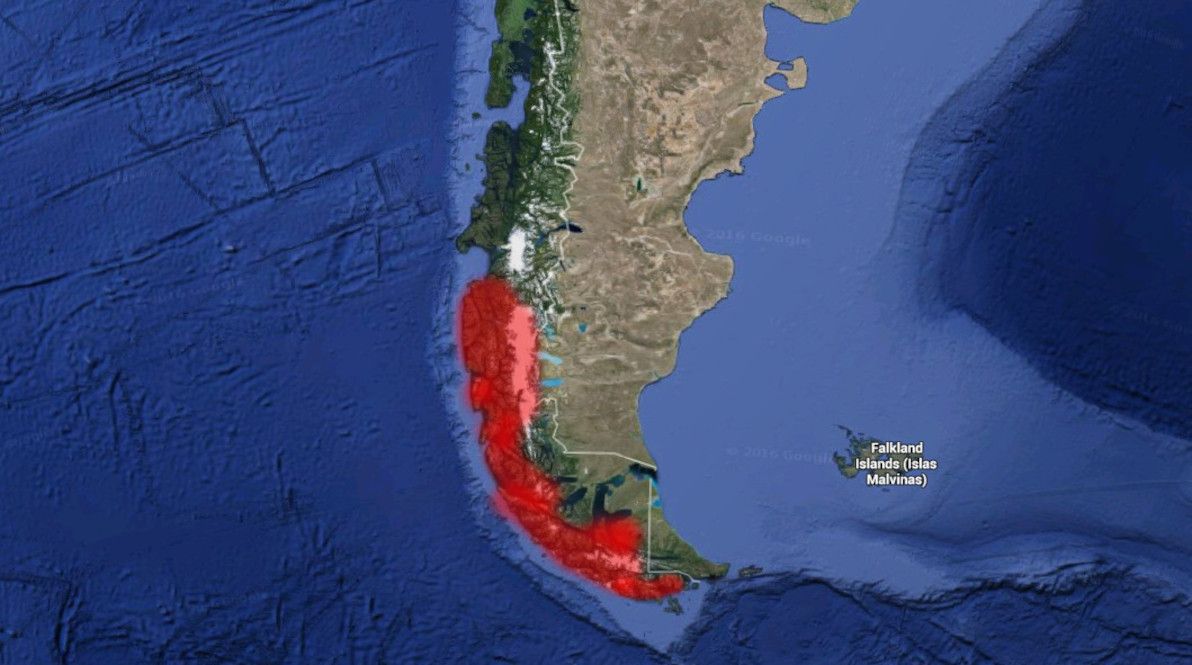

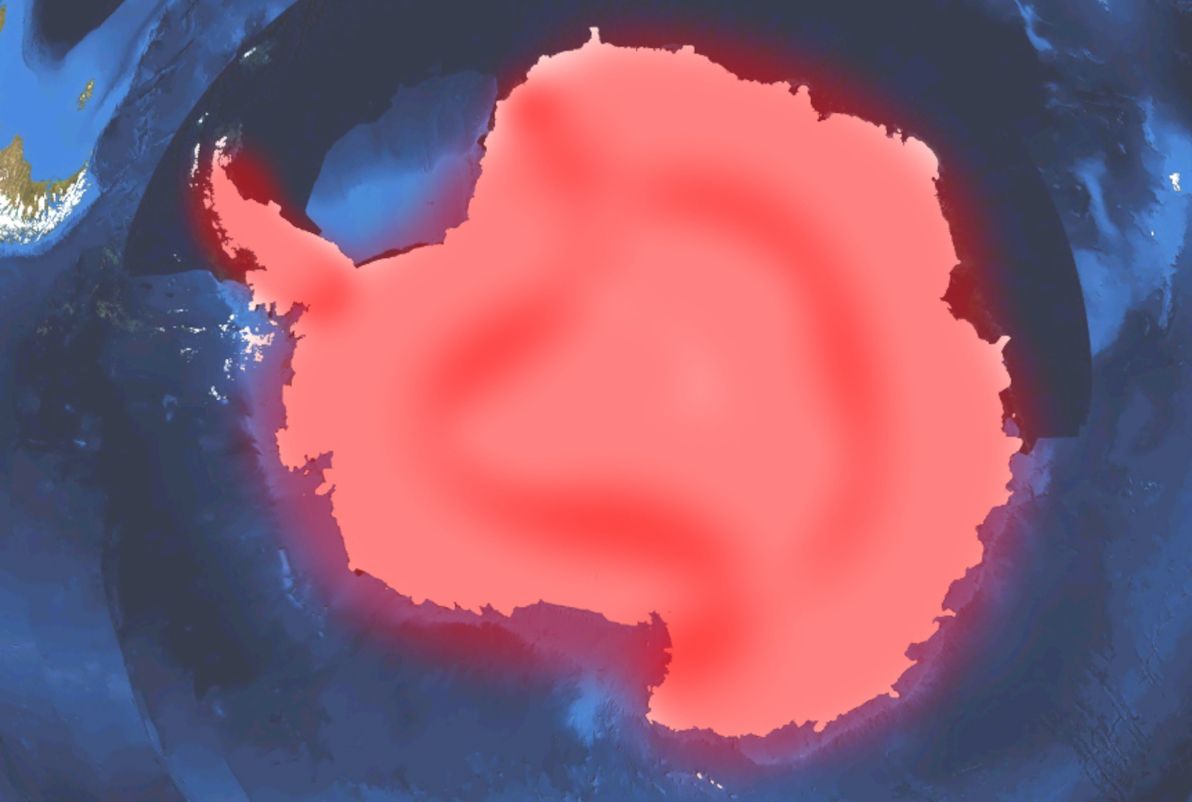

Firstly, it hasn't occurred – at least, not in most of the world – in as dramatic or as visible a manner as various other phenomena have. Glacial melting is clear as day, to anyone who is a local resident of, or a long-time vacationer to, one of the hotspots. Droughts, heatwaves and bushfires / wildfires have been noticeably increasing in both frequency and intensity, in North America, in Europe, and here in Australia (to name but a few places). Glaciers don't un-retreat, and charred countryside doesn't un-burn, when the tide goes out.

The only places in the world where the sea level rise merde (if you'll excuse my French) has already visibly hit the fan, are remote, impoverished, sparsely-populated, seldom-visited islands, particularly in the Pacific. So it's relatively easy, right now, for much of the world to feign blissful ignorance, if everything appears to still be cool and normal on the coastline down the road from your house.

Image source: PxHere.

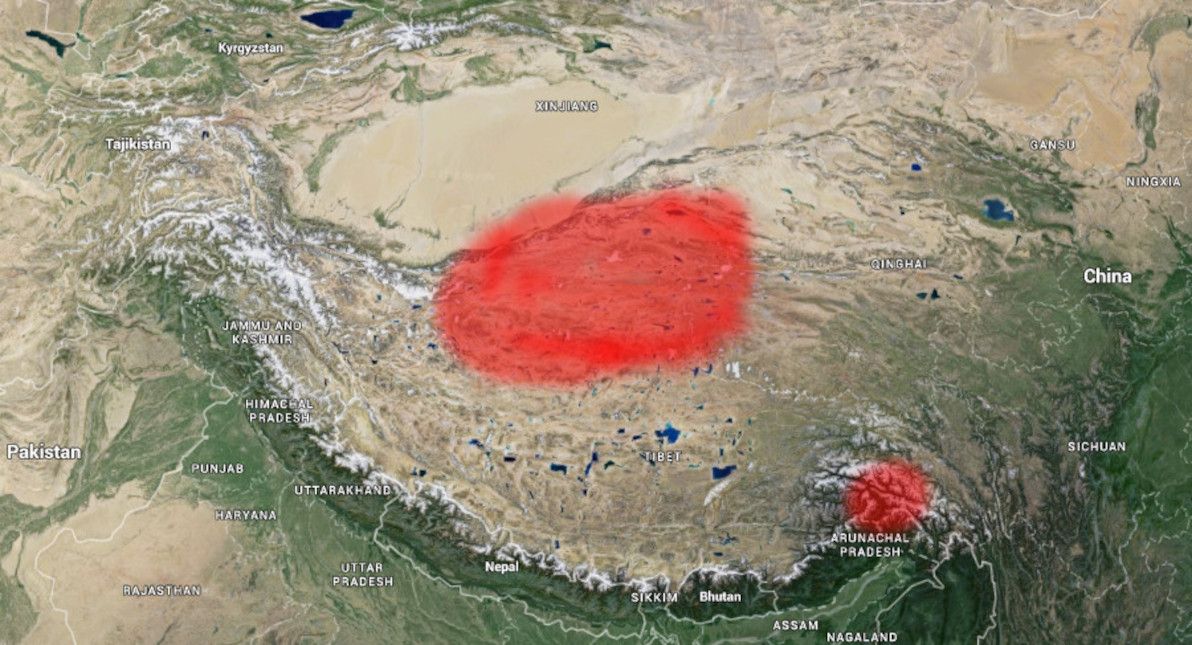

Also, the amount of sea level rise that has occurred over the past century-and-a-bit is, historically speaking, quite modest. Sure, it's the most significant rise in the past 3,000 years. However, from around 20,000 years ago (at the end of the last ice age), until around the start of Classical Antiquity, the sea level rose by about 125m (400'). That's great ammunition for deniers to go around claiming: "sea levels have always risen and fallen naturally, whatever is happening now has nothing to do with human activity".

Secondly, although scientists unanimously agree that sea level rise is accelerating and that it will get pretty bad pretty soon, there is massive uncertainty as to exactly how much and when. As I said, a 28-61cm (11-24") sea level rise between now and 2100 is the best-case forecast. The current worst-case forecast is a 1.3-1.6m (4-5') sea level rise between now and 2100. There are numerous forecasts in between.

Apart from not realising that it's already happening, and not realising that it lags behind air temperature changes by several decades, most people also don't realise for just how insanely long the sea level is going to keep on rising, as a result of industrialised humanity's love affair with carbon. In this arena too, estimates vary quite widely. But we're looking at a best-case forecast of a 2-3m (6-10') sea level rise over the next 2,000 years, and a current worse-case forecast of a 19-22m (62-72') sea level rise over the next 2,000 years.

But that's not all. The thing is, all that carbon we've emitted lately, it just ain't going anywhere in a hurry, it's going to keep hanging around like a bad smell. As such, we're looking a a best-case forecast of a 6-7m (19-23') sea level rise over the next 10,000 years, and a current worst-case forecast of a 52m (170') sea level rise over the next 10,000 years.

Image source: Wallpaper Delight.

Get ready

The sea may have only barely perceptibly risen so far, but it's about to rise a whole lot more. It's going to happen soon enough, that it will have a real and devastating effect, not on your great-grandchildren, not on your grandchildren, but on your children (and mine) who have already been born. Even considering the best-case forecasts, the map of the world's coastlines will already be different by the end of this century; and it will be unrecognisable in millennia to come.

]]>

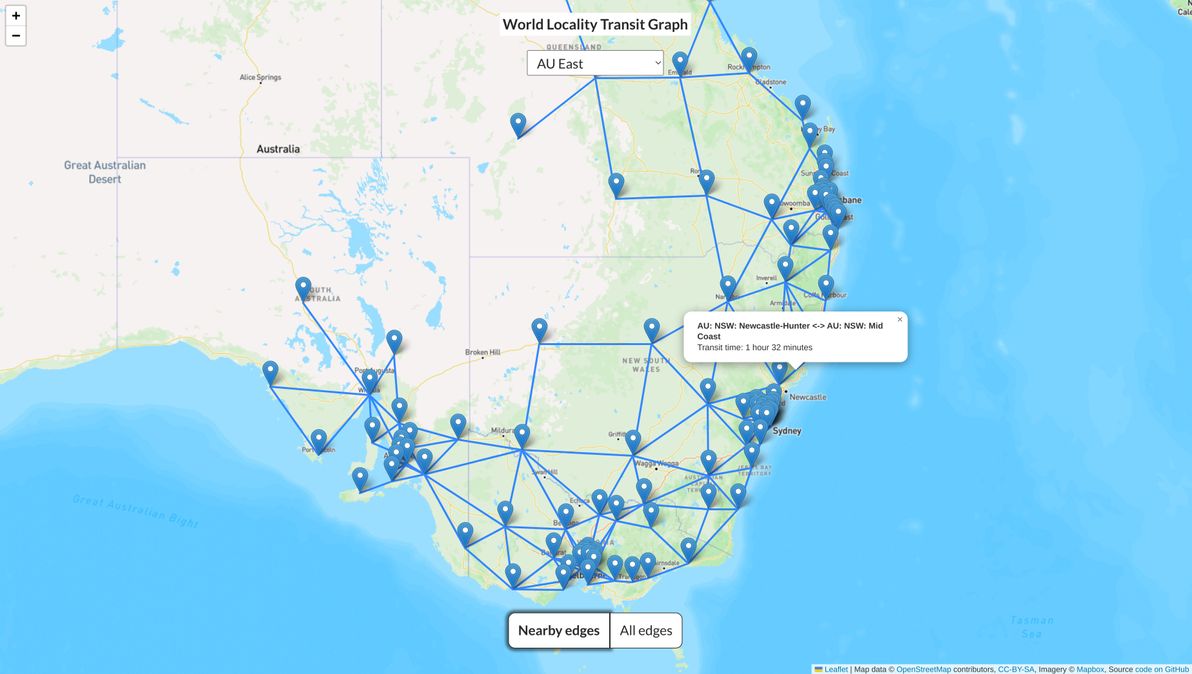

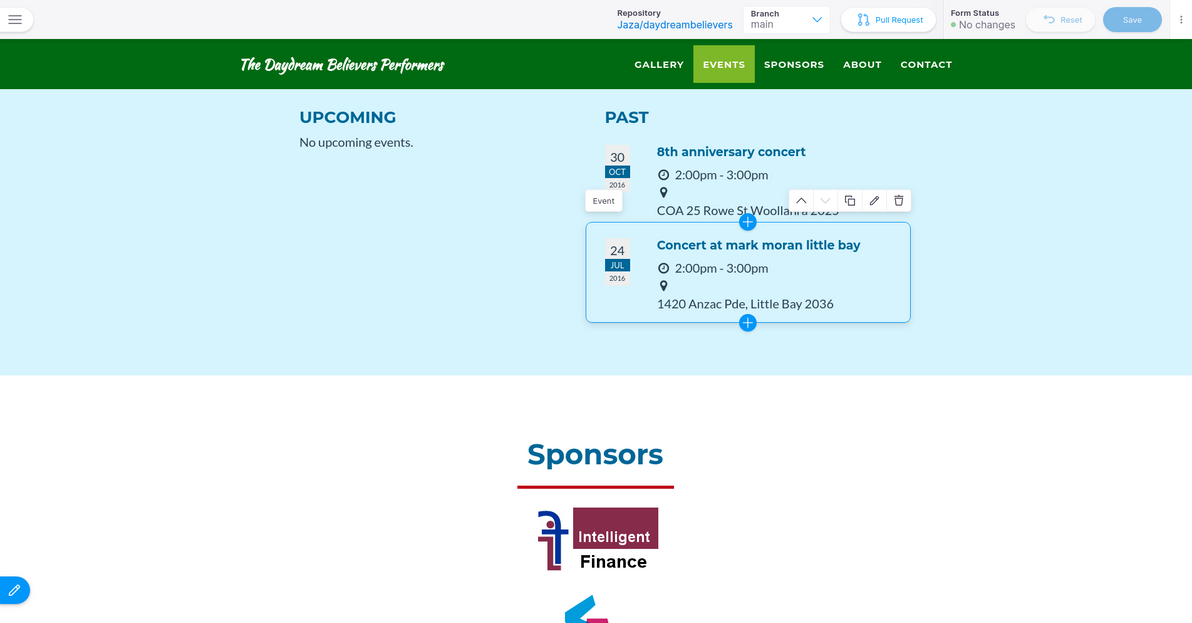

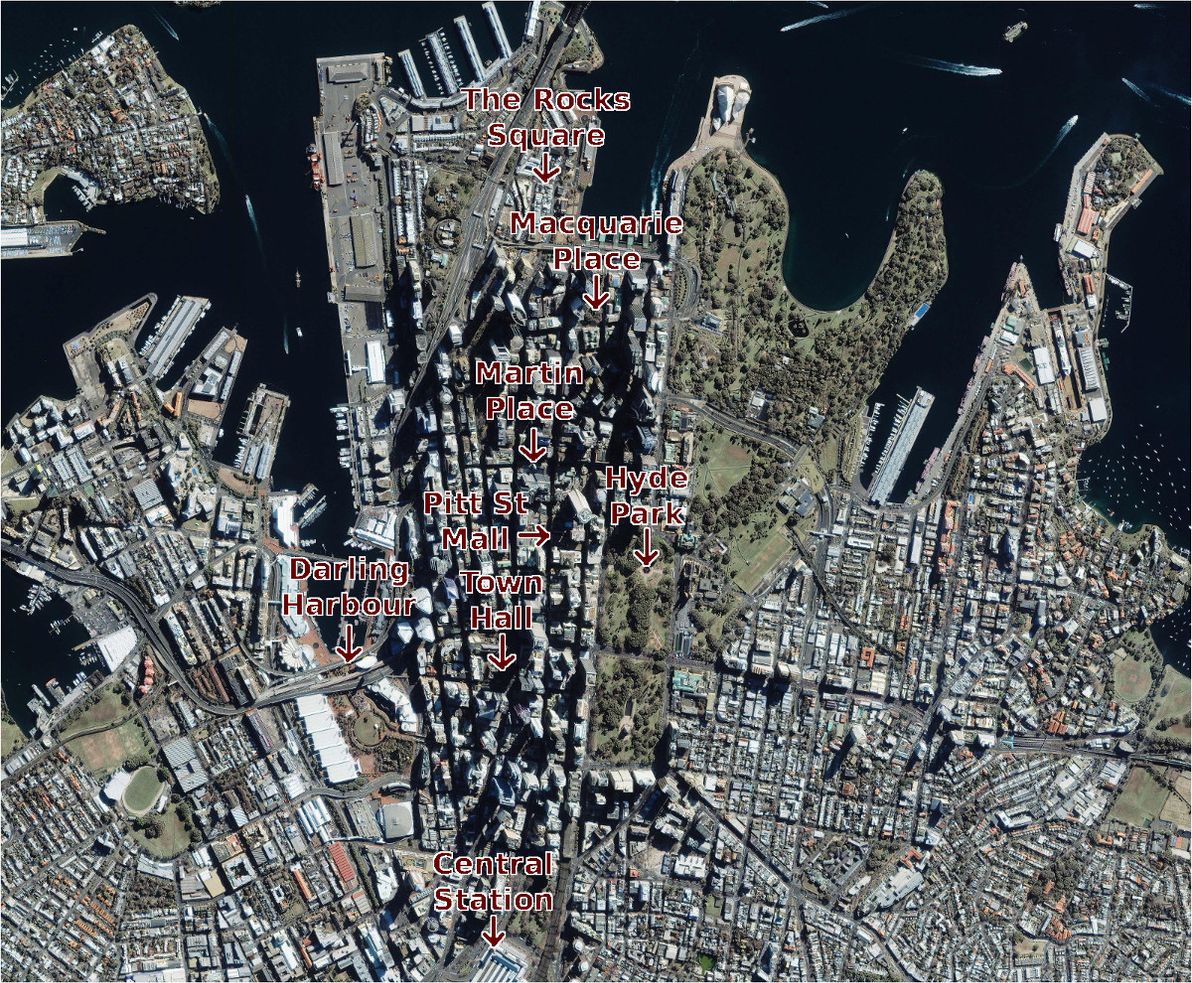

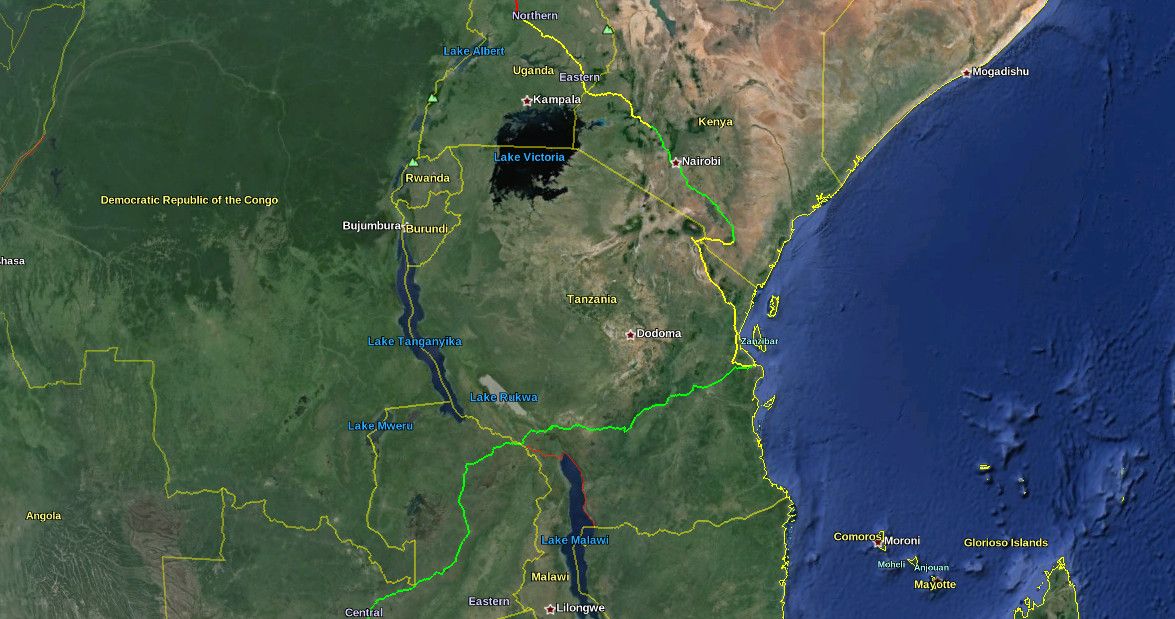

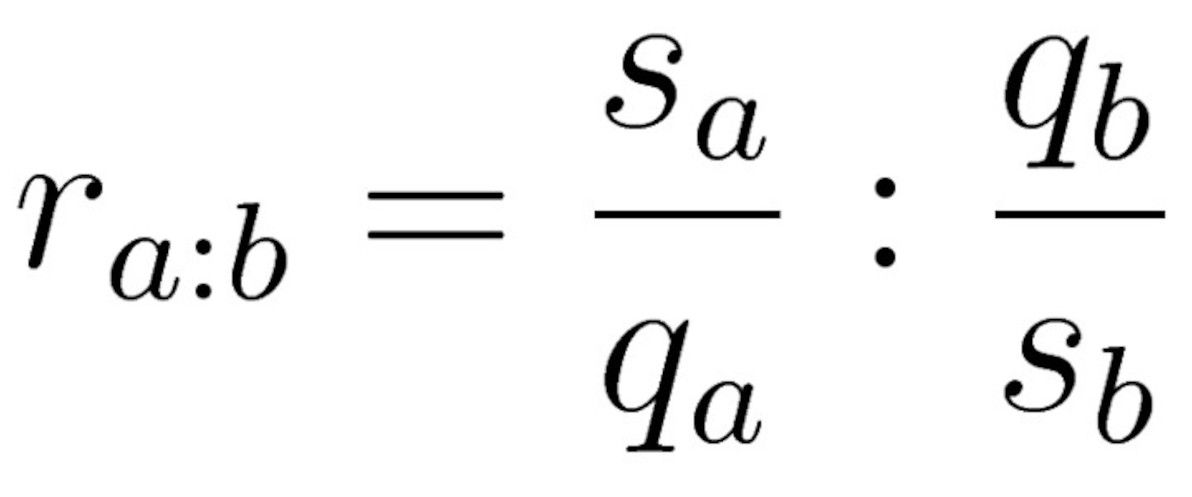

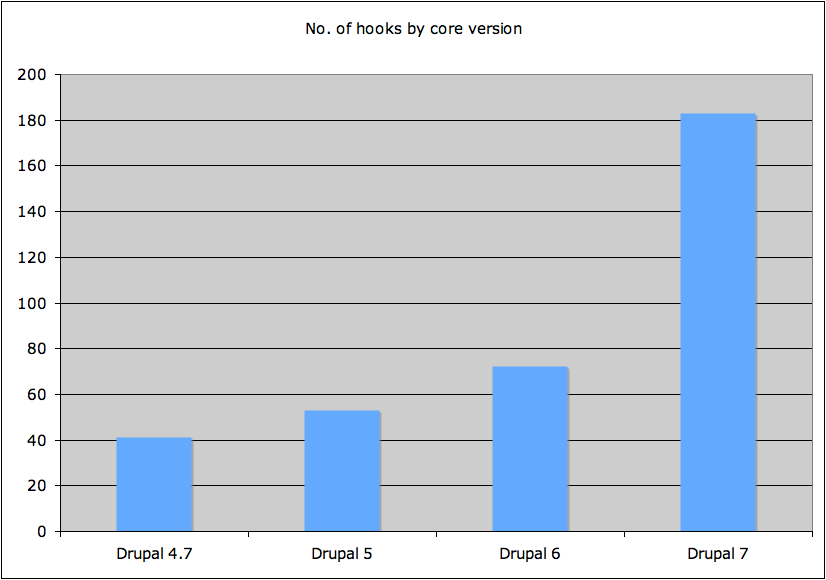

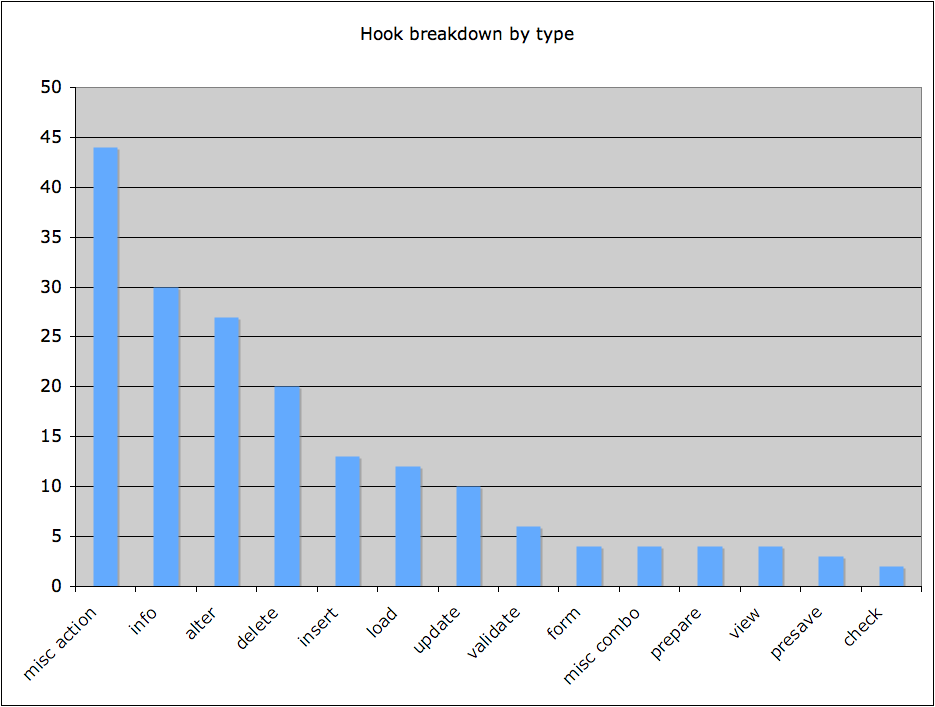

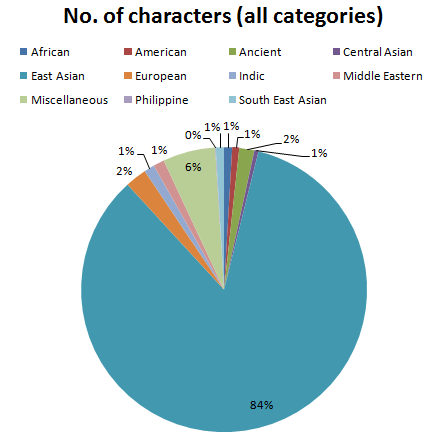

About the graph

(Note: this section is copied from the README that can be seen on GitHub.)

A "locality", for the purposes of the graph, is:

- In a large metropolitan area: a group of neighbourhoods / suburbs, e.g. "inner city", "southern suburbs"; such a locality should (as a rough guide) be 15-30 minutes transit time from its adjacent metropolitan localities

- In a (small urban area or) semi-rural area: the whole main town / city, and usually also neighbouring towns / countryside, e.g. "foobar valley", "fizzbuzz peninsula"; such a locality should (as a rough guide) be 1-2 hours transit time from its adjacent semi-rural localities

- In a remote rural area: all of the towns / countryside within a large area, e.g. "far north", "highlands"; such a locality should (as a rough guide) be 3-5 hours transit time from all adjacent localities

Additionally, regardless of whether it's big-city or middle-of-nowhere:

- Someone who lives in one locality, should consider anyone living in the same locality as being "in my area" (folks in a city of several million people have quite a different definition of "in my area", compared to folks whose next-door neighbour is over the horizon!)

- Each locality should have its own identity, both geographical and cultural; a person who lives in a locality should feel some connection (could be positive or negative!) to their locality's identity

Each locality is represented as a node in the graph. Two localities should be connected as "nearby edges" (i.e. there should be an edge connecting their nodes in the graph) if and only if:

- They are geographically adjacent

- It's possible to travel between them using one or more spontaneous transport modes, e.g. private car, some trains / buses / ferries, walking, bicycle, taxi (not non-spontaneous transport, i.e. not transport that has to be booked in advance, that may have infrequent service, and that may not be available 24/7, e.g. flights, some trains / buses / ferries)

- Travel between them using the fastest available spontaneous transport mode is no more than approximately 5 hours (under ideal conditions, i.e. very low traffic, no adverse weather, no roadwork / trackwork)

There is also an edge for every single possible pair of localities (in each connected graph), with a transit time of up to 5.5 hours, which can be seen in the "all edges" map view. These edges are calculated and generated in advance, using the Floyd-Warshall CSV Generator.

Due to the "5-hour max transit time" rule, and due to the "only spontaneous transport modes" rule, it's actually multiple graphs, not just one graph. This is because there is often no way to travel between two localities while adhering to those rules, usually due to a body of water being in the way, but sometimes due to a land route being extremely long and desolate (e.g. crossing the Nullarbor Plain between South Australia and Western Australia takes at least 12 hours of non-stop driving).

Why these rules? Because, being a "transit graph", the idea is that it only models "local" travel, i.e. travel that someone would undertake with little or no notice, at little or no financial cost, ideally (for metropolitan localities) local enough that one could still make it back home for the night, or (for rural and semi-rural localities) at least local enough that one could easily complete the journey one-way in a single day.

So, the aim of this graph is to model, for each locality, all of the other nearby localities that are "close enough", in terms of transit time, for casual travel - perhaps to catch up with friends / family, perhaps for local tourism, perhaps for shopping - to be feasible on a regular basis.

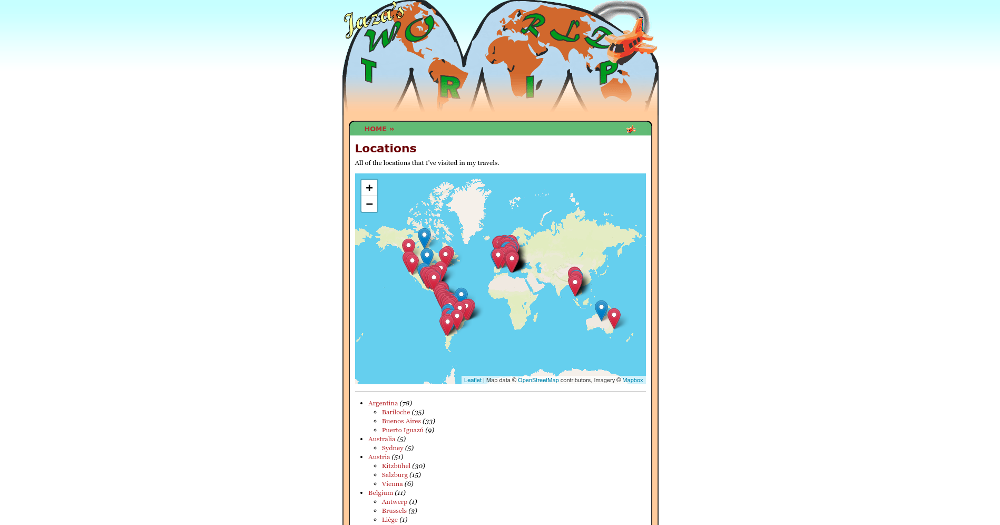

Built as a static site, using Leaflet as the map engine, OpenStreetMap for map data, and Mapbox for map tiles. Graph nodes and edges are stored in CSV files in the csv/ directory of the repo.

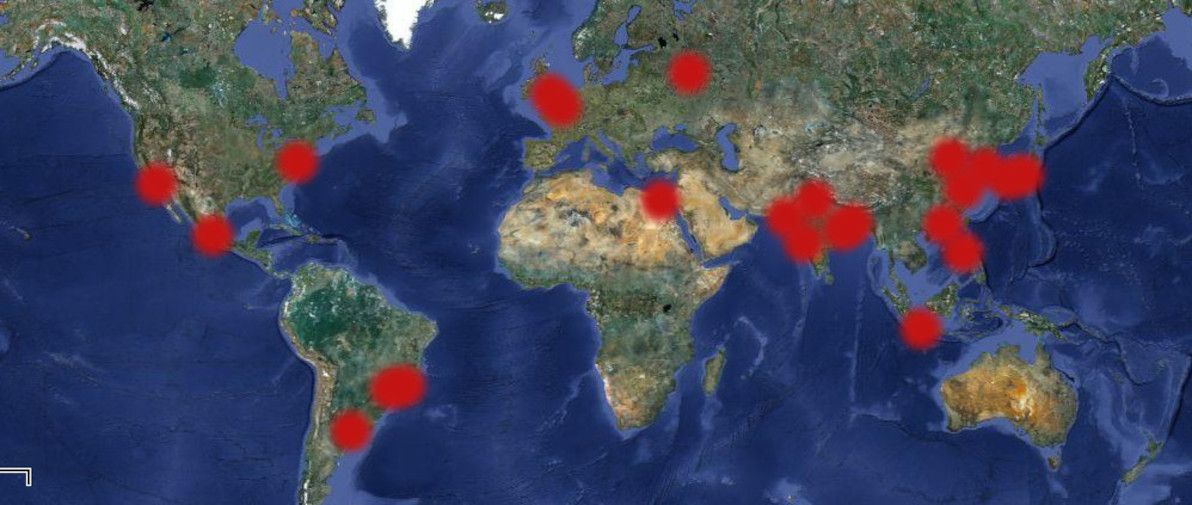

So far, there is only data for Australia and New Zealand. More world regions coming soon. If you're keen to help out with expanding the dataset, contributions are welcome! Ideally in the form of GitHub pull requests, but otherwise, just get in touch and send me data.

Cool, but why?

I built it primarily because I have another project in mind, that I may or may not build to completion, and which I may or may not be blogging about in future, for which the dataset in this graph would be really useful.

Also: fun!

Also: as far as I'm aware, nothing like this currently exists.

Also: I'd say it's a good thing to have a free, open, and dead-simple dataset like this, that provides a good alternative / good fallback to, for example, Google Maps's route travel time estimates.

Hope you like the World Locality Transit Graph. Feedback welcome.

]]>The script is a simple wrapper of the SciPy floyd_warshall function, which in turn implements the Floyd-Warshall Algorithm. Hope you find it useful for all your directed (or undirected) weighted graph needs.

Given an input CSV of the following graph edges:

point_a,point_b,cost

a,b,5

b,c,8

c,d,23

d,e,6When the script is called as follows:

floyd-warshall-csv-generator \

/path/to/input_data.csv \

--vertex-i-column-name point_a \

--vertex-j-column-name point_b \

--weight-column-name cost \

--no-directed \

--max-weight 35It generates an output CSV that looks like this:

point_a,point_b,cost

a,b,5.0

a,c,13.0

b,c,8.0

b,d,31.0

c,d,23.0

c,e,29.0

d,e,6.0That is, it generates all the possible (indirect) paths from one point to all other points, based on the (direct) paths that are already known, with duplicate (undirected) paths filtered out, and with paths whose cost is more than max-weight filtered out.

I wrote this script in order to generate the "all edges" data that's shown in the World Locality Transit Graph, which I'll also be blogging about real soon. Let me know if you put this script to any other interesting uses!

]]>

Image source: The Guardian

Let me start by lauding FastAPI's excellent documentation. Having a track record of rock-solid documentation, was (and still is!) – in my opinion – Django's most impressive achievement, and I'm pleased to see that it's also becoming Django's most enduring legacy. FastAPI, like Django, includes docs changes together with code changes in a single (these days called) pull request; it clearly documents that certain features are deprecated; and its docs often go beyond what is strictly required, by including end-to-end instructions for integrating with various third-party tools and services.

FastAPI's docs raise the bar further still, with more than a dash of humour in many sections, and with a frequent sprinkling of emojis as standard fare. That latter convention I have some reservations about – call me old-fashioned, but you could say that emoji-filled docs is unprofessional and is a distraction. However, they seem to enhance rather than detract from overall quality; and, you know what, they put a non-emoji real-life smile on my face. So, they get my tick of approval.

FastAPI more-or-less sits in the Flask camp of being a "microframework", in that it doesn't include an ORM, a template engine, or various other things that Django has always advertised as being part of its "batteries included" philosophy. But, on the other hand, it's more in the Django camp of being highly opinionated, and of consciously including things with which it wants a hassle-free experience. Most notably, it includes Swagger UI and Redoc out-of-the-box. I personally had quite a painful experience generating Swagger docs in Flask, back in the day; and I've been tremendously pleased with how API doc generation Just Works™ in FastAPI.

Much like with Flask, being a microframework means that FastAPI very much stands on the shoulders of giants. Just as Flask is a thin wrapper on top of Werkzeug, with the latter providing all things WSGI; so too is FastAPI a thin wrapper on top of Starlette, with the latter providing all things ASGI. FastAPI also heavily depends on Pydantic for data schemas / validation, for strongly-typed superpowers, for settings handling, and for all things JSON. I think it's fair to say that Pydantic is FastAPI's secret sauce.

My use of FastAPI so far has been rather unusual, in that I've been building apps that primarily talk to an Oracle database (and, indeed, this is unusual for Python dev more generally). I started out by depending on the (now-deprecated) cx_Oracle library, and I've recently switched to its successor python-oracledb. I was pleased to see that the fine folks at Oracle recently released full async support for python-oracledb, which I'm now taking full advantage of in the context of FastAPI. I wrote a little library called fastapi-oracle which I'm using as a bit of glue code, and I hope it's of use to anyone else out there who needs to marry those two particular bits of tech together.

There has been a not-insignificant amount of chit-chat on the interwebz lately, voicing concern that FastAPI is a one-man show (with its BDFL @tiangolo showing no intention of that changing anytime soon), and that the FastAPI issue and pull request queues receive insufficient TLC. Based on my experience so far, I'm not too concerned about this. It is, generally speaking, not ideal if a project has a bus factor of 1, and if support requests and bug fixes are left to rot.

However, in my opinion, the code and the documentation of FastAPI are both high-quality and highly-consistent, and I appreciate that this is largely thanks to @tiangolo continuing to personally oversee every small change, and that loosening the reins would mean a high risk of that deteriorating. And, speaking of quality, I personally have yet to uncover any bugs either in FastAPI or its core dependencies (which I'm pleasantly surprised by, considering how heavily I've been using it) – it would appear that the items languishing in the queue are lower priority, and it would appear that @tiangolo is on top of critical bugs as they arise.

In summary, I'm enjoying coding with FastAPI, I feel like it's a great fit for building Python web apps in 2024, and it will continue to be my Python framework of choice for the foreseeable future.

]]>

Image source: Fandom

I seldom write purely political pieces. I'm averse to walking into the ring and picking a fight with anyone. And honestly I find not-particularly-political writing on other topics (such as history and tech) to be more fun. Nor do I consider myself to be all that passionate about indigenous affairs – at least, not compared with other progressive causes such as the environment or refugees (maybe because I'm a racist privileged white guy myself!). However, with only five days to go until Australia votes (and with the forecast for said vote looking quite dismal), I thought I'd share my two cents on what, in my humble opinion, the Voice is all about.

I don't know about my fellow Yes advocates, but – call me cynical if you will – personally I have zero expectations of the plight of indigenous Australians actually improving, should the Voice be established. This is not about closing the gap. There are massive issues affecting Aborigines and Torres Strait Islanders, those issues have festered for a long time, and there's no silver bullet – no establishing of yet another advisory body, no throwing around of yet more money – that will magically or instantly change that. I hope that the Voice does make an inkling of a difference on the ground, but I'd say it'll be an inkling at best.

So then, what is this referendum about? It's about recognising indigenous Australians in the Constitution for the first time ever! (First-ever not racist mention, at least.) It's about adding something to the Constitution that's more than a purely symbolic gesture. It's about doing what indigenous Australians have asked for (yes, read the facts, they have asked for it!). It's about not having yet another decade or three of absolutely no progress being made towards reconciliation. And it's about Australia not being an embarassment to the world (in yet another way).

I'm not going to bother to regurgitate all the assertions of the Yes campaign, nor to try to refute all the vitriol of the No campaign, in this here humble piece (including, among the countless other bits of misinformation, the ridiculous claim that "the Voice is racist"). I just have this simple argument to put to y'all.

A vote for No is a vote for nothing. The Voice is something. It's not something perfect, but it's something, and it's an appropriate something for Australia in 2023, and it's better than nothing. And rather than being afraid of that modest little something (and expressing your fear by way of hostility), the only thing you should actually be afraid of – sure as hell the only thing I'm afraid of – is the shame and disgrace of doing more nothing.

]]>

Image source: Legends Revealed

Amazingly, to this day – more than three decades after the fall of communism – this city of about 100,000 residents (and its surrounds, including the Mayak nuclear facility, which is ground zero) remains a "closed city", with entry forbidden to all non-authorised personnel.

And, apart from being enclosed by barbed wire, it appears to also be enclosed in a time bubble, with the locals still routinely parroting the Soviet propaganda that labelled them "the nuclear shield and saviours of the world"; and with the Soviet-era pact still effectively in place that, in exchange for their loyalty, their silence, and a not-un-unhealthy dose of radiation, their basic needs (and some relative luxuries to boot) are taken care of for life.

Image source: Google Maps

So, as I said, there's very little information available on Ozersk, because to this day: it remains forbidden for virtually anyone to enter the area; it remains forbidden for anyone involved to divulge any information; and it remains forbidden to take photos / videos of it, or to access any documents relating to it. For over three decades, it had no name and was marked on no maps; and honestly, it seems like that might as well still be the case.

Image source: imgflip

Frankly, even had the 1957 explosion not occurred, the area would still be horrendously contaminated. Both before and after that incident, they were dumping enormous quantities of unadulterated radioactive waste directly into the water bodies in the vicinity, and indeed, they continue to do so, to this day. It's astounding that anyone still lives there – especially since, ostensibly, you know, Russians are able to live where they choose these days.

Image source: imgflip

As far as I can gather, from the available sources, most of the present-day residents of Ozersk are the descendants of those who were originally forced to go and live there in Stalin's time. And, apparently, most people who have been born and raised there since the fall of the USSR, choose to stay, due to: family ties; the government continuing to provide for their wellbeing; ongoing patriotic pride in fulfilling their duty; fear of the big bad world outside; and a belief (foolhardy or not) that the health risks are manageable. It's certainly possible that there are more sinister reasons why most people stay; but then again, in my opinion, it's not implausible that no outright threats or prohibitions are needed, in order to maintain the status quo.

Image source: Wonkapedia

Only one insider appears to have denounced the whole spectacle in recent history: lifelong resident Nadezhda Kutepova, who gave an in-depth interview with Western media several years ago. Kutepova fled Ozersk, and Russia, after threats were made against her, due to her campaigning to expose the truth about the prevalence of radiation sickness in her home town.

And only one outsider appears to have ever gotten in covertly, lived, and told the tale: Samira Goetschel, who produced City 40, the world's only documentary about life in Ozersk (the film features interview footage with Kutepova, along with several other Ozersk locals). Honestly, life inside North Korea has been covered more comprehensively than this.

Image source: imgflip

Considering how things are going in Putin's Russia, I don't imagine anything will be changing in Ozersk for a long time yet. Looks like business as usual – utterly trash the environment, manufacture dodgy nuclear stuff, maintain total secrecy, brainwash the locals, cause sickness and death – is set to continue indefinitely.

You can find much more in-depth information, in many of the articles and videos that I've linked to. Anyway, in a nutshell, Ozersk: you've never been there, and you'll never be able to go there, even if you wanted to. Which you don't. Now, please forget everything you've just read. This article will self-destruct in five seconds.

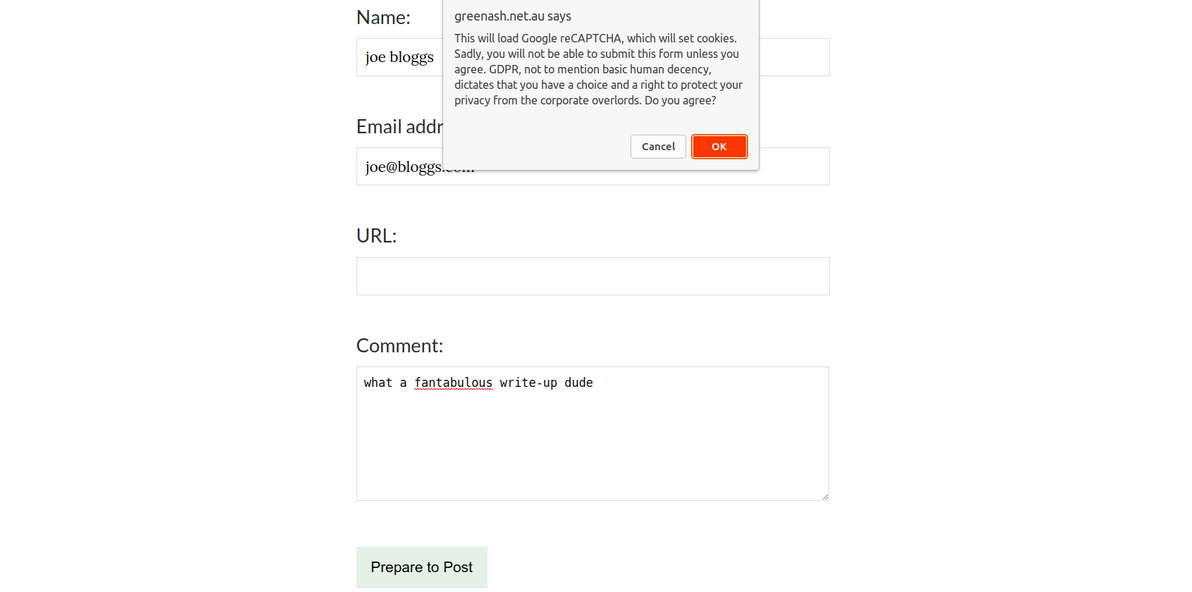

]]>In order to be GDPR-compliant, and in order to just be a good netizen, I made sure, when building GreenAsh v5 earlier this year, to not use services that set cookies at all, wherever possible. In previous iterations of GreenAsh, I used Google Analytics, which (like basically all Google services) is a notorious GDPR offender; this time around, I instead used Cloudflare Web Analytics, which is a good enough replacement for my modest needs, and which ticks all the privacy boxes.

However, on pages with forms at least, I still need Google reCAPTCHA. I'd like to instead use the privacy-conscious hCaptcha, but Netlify Forms only supports reCAPTCHA, so I'm stuck with it for now. Here's how I seek the user's consent before loading reCAPTCHA.

ready(() => {

const submitButton = document.getElementById('submit-after-recaptcha');

if (submitButton == null) {

return;

}

window.originalSubmitFormButtonText = submitButton.textContent;

submitButton.textContent = 'Prepare to ' + window.originalSubmitFormButtonText;

submitButton.addEventListener("click", e => {

if (submitButton.textContent === window.originalSubmitFormButtonText) {

return;

}

const agreeToCookiesMessage =

'This will load Google reCAPTCHA, which will set cookies. Sadly, you will ' +

'not be able to submit this form unless you agree. GDPR, not to mention ' +

'basic human decency, dictates that you have a choice and a right to protect ' +

'your privacy from the corporate overlords. Do you agree?';

if (window.confirm(agreeToCookiesMessage)) {

const recaptchaScript = document.createElement('script');

recaptchaScript.setAttribute(

'src',

'https://www.google.com/recaptcha/api.js?onload=recaptchaOnloadCallback' +

'&render=explicit');

recaptchaScript.setAttribute('async', '');

recaptchaScript.setAttribute('defer', '');

document.head.appendChild(recaptchaScript);

}

e.preventDefault();

});

});I load this JS on every page, thus putting it on the lookout for forms that require reCAPTCHA (in my case, that's comment forms and the contact form). It changes the form's submit button text from, for example, "Send", to instead be "Prepare to Send" (as a hint to the user that clicking the button won't actually submit the form, there will be further action required before that happens).

It hijacks the button's click event, such that if the user hasn't yet provided consent, it shows a prompt. When consent is given, the Google reCAPTCHA JS is added to the DOM, and reCAPTCHA is told to call recaptchaOnloadCallback when it's done loading. If the user has already provided consent, then the button's default click behaviour of triggering form submission is allowed.

{%- if params.recaptchaKey %}

<div id="recaptcha-wrapper"></div>

<script type="text/javascript">

window.recaptchaOnloadCallback = () => {

document.getElementById('submit-after-recaptcha').textContent =

window.originalSubmitFormButtonText;

window.grecaptcha.render(

'recaptcha-wrapper', {'sitekey': '{{ params.recaptchaKey }}'}

);

};

</script>

{%- endif %}I embed this HTML inside every form that requires reCAPTCHA. It defines the wrapper element into which the reCAPTCHA is injected. And it defines recaptchaOnloadCallback, which changes the submit button text back to what it originally was (e.g. changes it from "Prepare to Send" back to "Send"), and which actually renders the reCAPTCHA widget.

<!-- ... -->

<form other-attributes-here data-netlify-recaptcha>

<!-- ... -->

{% include 'components/recaptcha_loader.njk' %}

<p>

<button type="submit" id="submit-after-recaptcha">Send</button>

</p>

</form>

<!-- ... -->This is what my GDPR-compliant, reCAPTCHA-enabled, Netlify-powered contact form looks like. The data-netlify-recaptcha attribute tells Netlify to require a successful reCAPTCHA challenge in order to accept a submission from this form.

That's all there is to it! Not rocket science, but I just thought I'd share this with the world, because despite there being a gazillion posts on the interwebz advising that you "ask for consent before setting cookies", there seem to be surprisingly few step-by-step instructions explaining how to actually do that. And the standard advice appears to be to use a third-party script / plugin that implements an "accept cookies" popup for you, even though it's really easy to implement it yourself.

]]>

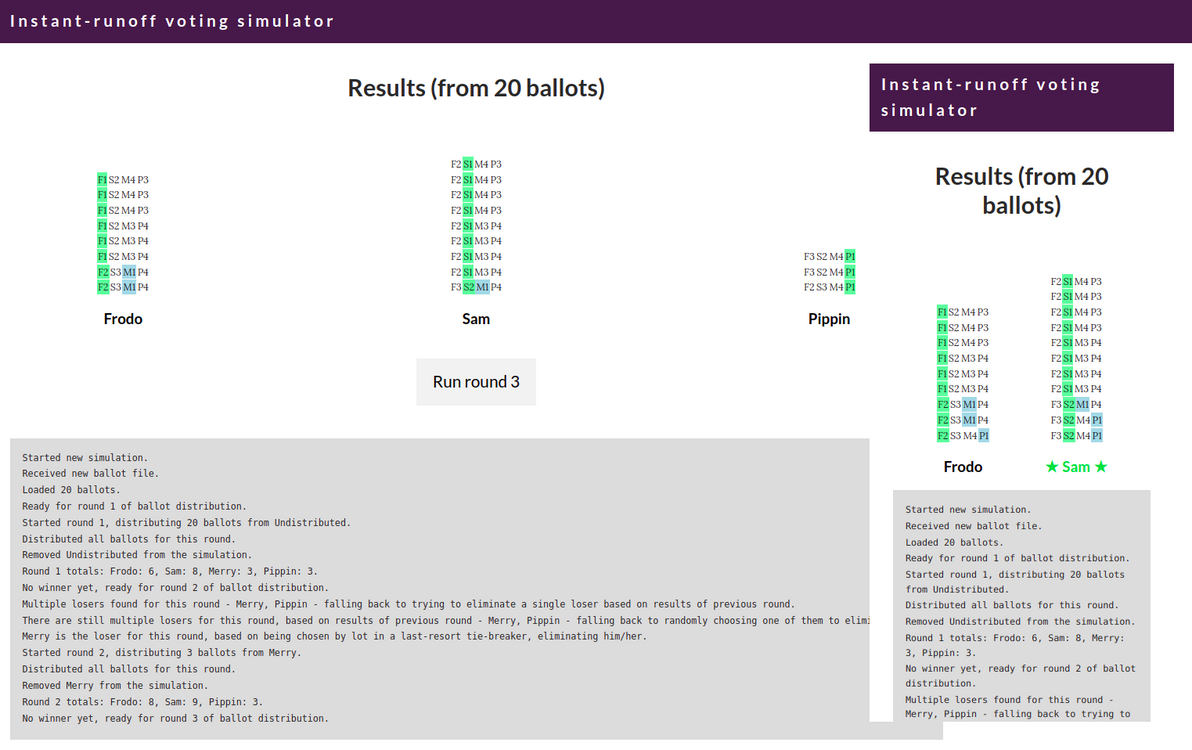

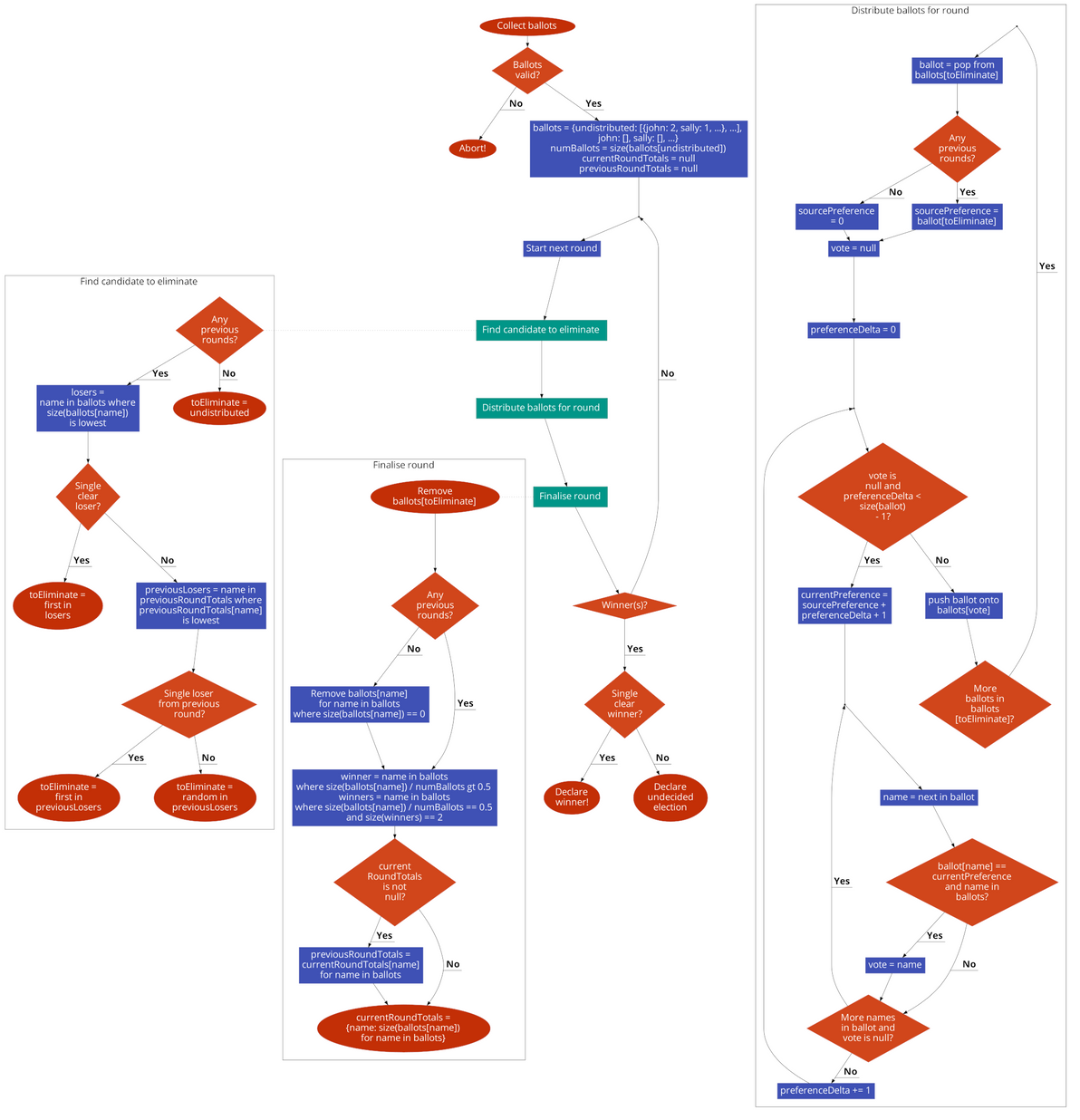

I hope that, by being an interactive, animated, round-by-round visualisation of the ballot distribution process, this simulation gives you a deeper understanding of how instant-runoff voting works.

The rules coded into the simulator, are those used for the House of Representatives in Australian federal elections, as specified in the Electoral Act 1918 (Cth) s274.

There are other tools around that do basically the same thing as this simulator. Kudos to the authors of those tools. However, they only output a text log or a text-based table, they don't provide any visualisation or animation of the vote-counting process. And they spit out the results for all rounds all at once, they don't show (quite as clearly) how the results evolve from one round to the next.

Source code is all up on GitHub. It's coded in vanilla JS, with the help of the lovely Papa Parse library for CSV handling. I made a nice flowchart version of the code too.

With a federal election coming up, here in Australia, in just a few days' time, this simulator means there's now one less excuse for any of my fellow citizens to not know how the voting system works. And, in this election more than ever, it's vital that you properly understand why every preference matters, and how you can make every preference count.

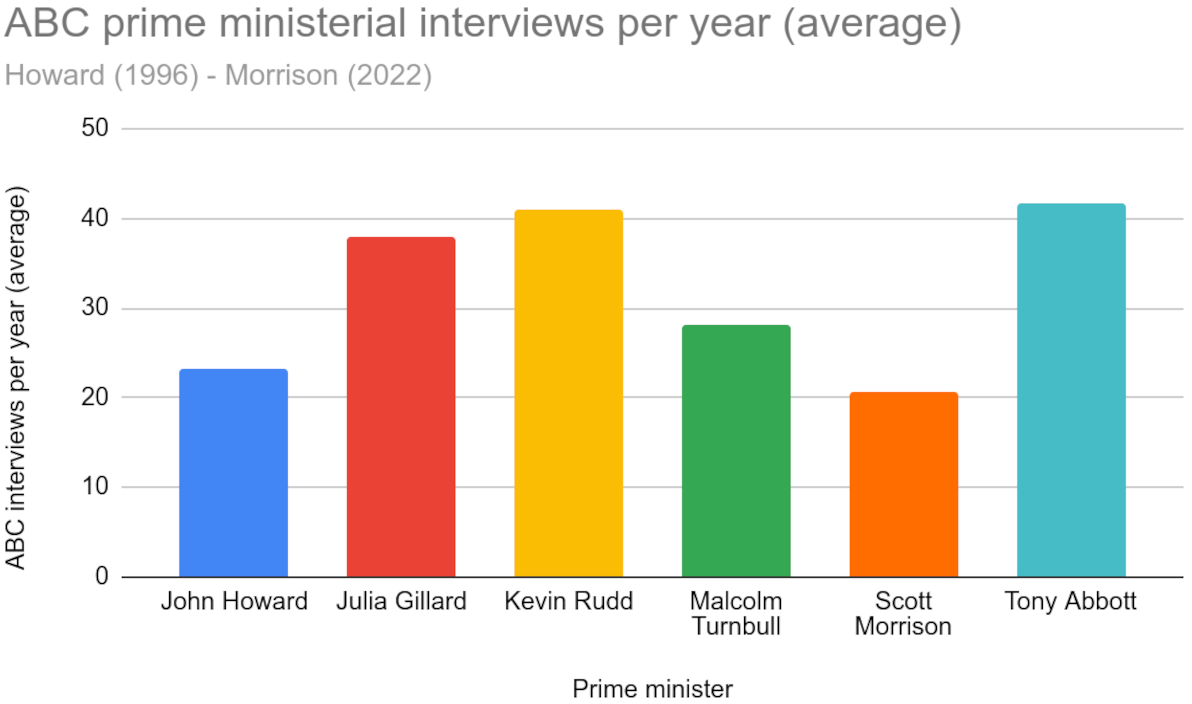

]]>I have also made the casual observation, over the last three years, that Morrison makes few appearances on Aunty in general, compared with the commercial alternatives, particularly Sky News (which I personally have never watched directly, and have no plans to, but I've seen plenty of clips of Morrison on Sky repeated on the ABC and elsewhere).

This led me to do some research, to find out: how often has Morrison taken part in ABC interviews, during his tenure so far as Prime Minister, compared with his predecessors? I compiled my findings, and this is what they show:

It's official: Morrison has, on average, taken part in fewer ABC TV and Radio interviews, than any other Prime Minister in recent Australian history.

I hope you find my humble dataset useful. Apart from illustrating Morrison's disdain for the ABC, there are also tonnes of other interesting analyses that could be performed on the data, and tonnes of other conclusions that could be drawn from it.

My findings are hardly surprising, considering Morrison's flagrant preference for sensationalism, spin, and the far-right fringe. I leave it as an exercise to the reader, to draw from my dataset what conclusions you will, regarding the fate of the already Coalition-scarred ABC, should Morrison win a second term.

Update, 18 May 2022: check it out, this article has been re-published on Independent Australia!

]]>Nobody (except Indonesia!) is disputing that an awful lot of bad stuff has happened in Indonesian Papua over the last half-century or so, and that the vast majority of the blood is on the Indonesian government's hands. However, is it true that the Australian government is not only silent on, but also complicit in, said bad stuff?

Image source: YouTube

Note: in keeping with what appears to be the standard in English-language media, for the rest of this article I'll be referring to the whole western half of the island of New Guinea – that is, to both of the present-day Indonesian provinces of Papua and West Papua – collectively as "West Papua".

Grasberg mine

Let's start with one of the video's least controversial claims – one that's about a simple objective fact, and one that has nothing to do with Australia:

[Grasberg mine] … the biggest copper and gold mine in the world

Close enough

I had never heard of the Grasberg mine before. Just like I had never heard much in general about West Papua before – even though it's only about 200km from (the near-uninhabited northern tip of) Australia. Which I guess is due to the scant media coverage afforded to what has become a forgotten region.

Image source: Wikimedia Commons

Anyway, Grasberg is indeed big (it's the biggest mine in Indonesia), it's massively polluting, and it's extremely lucrative (both for US-based Freeport-McMoRan and for the Indonesian government).

Image source: The Guardian

Grasberg is actually the second-biggest gold mine in the world, based on total reserves, but it's a close second. And it's the fifth-biggest gold mine in the world, based on production. It's the tenth-biggest copper mine in the world. And Freeport-McMoRan is the third-biggest copper mining company in the world. Exact rankings vary by source and by year, but Grasberg ranks near the top consistently.

I declare this claim to be very close to the truth.

Accessory to abuses

we've [Australia] done everything we can to help our mates [Indonesian National Military] beat the living Fak-Fak out of those indigenous folks

Exaggerated

Woah, woah, woah! Whaaa…?

Yes, Australia has supplied the Indonesian military and the Indonesian police with training and equipment over many years. And yes, some of those trained personnel have gone on to commit human rights abuses in West Papua. And yes, there are calls for Australia to cease all support for the Indonesian military.

Image source: International Academics for West Papua

But. Are a significant number of Australian-trained Indonesian government personnel deployed in West Papua, compared with elsewhere in the vastness of Indonesia? We don't know (although it seems unlikely). Does Australia train Indonesian personnel in a manner that encourages violence towards civilians? No idea (but I should hope not). And does Australia have any control over what the Indonesian government does with the resources provided to it? Not really.

I agree that, considering the Indonesian military's track record of human rights abuses, it would probably be a good idea for Australia to stop resourcing it. The risk of Australia indirectly facilitating human rights abuses, in my opinion, outweighs the diplomatic and geopolitical benefits of neighbourly cooperation.

Nevertheless: Australia (as far as we know) has no boots on the ground in West Papua; (I have to reluctantly say that) Australia is not responsible for how the Indonesian military utilises the training and equipment that it has received; and there's insufficient evidence to link Australia's support of the Indonesian military to date, with goings-on in West Papua.

I declare this claim to be exaggerated.

Corporate plunder

so that our other mates [Rio Tinto, LG, BP, Freeport-McMoRan] can come in and start makin' the ching ching

Close enough

At the time that the video was made (2018), Rio Tinto owned a significant stake in the Grasberg mine, and it most certainly was "makin' the ching ching" from that stake. Although shortly after that, Rio Tinto sold all of its right to 40% of the mine's production, and is now completely divested of its interest in the enterprise. Rio Tinto is a British-Australian company, and is most definitely one of the Australian government's mates.

Freeport-McMoRan has, of course, been Grasberg's principal owner and operator for most of the mine's history, as well as the principal entity that has been raking in on the mine's insane profits. The company has some business ventures in Australia, although its ties with the Australian economy, and therefore with the Australian government, appear to be quite modest.

BP is the main owner of the Tangguh gas field, which is probably the second-largest and second-most-lucrative (and second-most-polluting!) industrial enterprise in West Papua. BP is of course a British company, but it has a significant presence in Australia. LG appears to also be involved in Tangguh. LG is a Korean company, and it has modest ties to the Australian economy.

Image source: KBR

So, all of these companies could be considered "mates" of the Australian government (some more so than others). And all of them are, or until recently were, "makin' the ching ching" in West Papua.

I declare this claim to be very close to the truth.

Stopping independence

Remember when two Papuans [Clemens Runaweri and Willem Zonggonau] tried to flee to the UN to expose this bulls***? We [Australia] prevented them from ever getting there, by detaining them on Manus Island

Checks out

Well, no, I don't remember it, because – apart from the fact that it happened long before I was born – it's an incident that has scarcely ever been covered by the media (much like the lack of media coverage of West Papua in general). Nevertheless, it did happen, and it is documented:

In May 1969, two young West Papuan leaders named Clemens Runaweri and Willem Zonggonau attempted to board a plane in Port Moresby for New York so that they could sound the alarm at UN headquarters. At the request of the Indonesian government, Australian authorities detained them on Manus Island when their plane stopped to refuel, ensuring that West Papuan voices were silenced.

Source: ABC Radio National

After being briefly detained, the two men lived the rest of their lives in exile in Papua New Guinea. Zonggonau died in Sydney in 2006, where he and Runaweri were visiting, still campaigning to free their homeland until the end. Runaweri died in Port Moresby in 2011.

Interestingly, it also turns out that the detaining of these men, along with several hundred other West Papuans, in the late 1960s, was the little-known beginning of Australia's now-infamous use of Manus Island as a place to let refugees rot indefinitely.

Image source: The New York Times

I declare this claim to be 100% correct.

Training hitmen

We [Australia] helped train [at the Indonesia-Australia Defence Alumni Association (IKAHAN)] and arm those heroes [the hitmen who assassinated the Papuans' leader Theys Eluay in 2001]

Exaggerated

Theys Eluay was indeed the chairman of the Papua Presidium Council – he was even described as "Papua's would-be first president" – and his death in 2001 was indeed widely attributed to the Indonesian military.

There's no conclusive evidence that the soldiers who were found guilty of Eluay's murder (who were part of Kopassus, the Indonesian special forces), received any training from Australia. However, Australia has provided training to Kopassus over many years, including during the 1980s and 1990s. This co-operation has continued into more recent times, during which claims have been made that Kopassus is responsible for ongoing human rights abuses in Papua.

Image source: ABC

I don't know why IKAHAN was mentioned together with the 2001 murder of Eluay, because it wasn't founded until 2011, so one couldn't possibly have anything to do with the other. It's possible that Eluay's killers received Australian-backed training elsewhere, but not there. Similarly, it's possible that training undertaken at IKAHAN has contributed to other shameful incidents in West Papua, but not that one. Mentioning IKAHAN does nothing except conflate the facts.

In any case, I repeat, (I have to reluctantly say that) Australia is not responsible for how the Indonesian military utilises the training and equipment that it has received; and there's insufficient evidence to link Australia's support of the Indonesian military to date, with goings-on in West Papua.

I declare this claim to be exaggerated.

Shipments from Cairns

which [Grasberg mine] is serviced by massive shipments from Cairns. Cairns! The Aussie town supplying West Papua's Death Star with all its operational needs

"Citations needed"

This claim really came at me out of left field. So much so, that it was the main impetus for me penning this article as a fact check. Can it be true? Is the laid-back tourist town of Cairns really the source of regular shipments of supplies, to the industrial hellhole that is Grasberg?

Image source: Bulk Handling Review

I honestly don't know how TJM got their hands on this bit of intel, because there's barely any mention of it in any media, mainstream or otherwise. Clearly, this was an arrangement that all involved parties made a concerted effort to keep under the radar for many years.

In any case, yes, it appears to be true. Or, at least, it was true at the time that the video was published, and it had been true for about 45 years, up until that time. Then, in 2019, the shipping, and Freeport-McMoRan's presence in town, apparently disappeared from Cairns, presumably replaced by alternative logistics based in Indonesia (and presumably due to the Indonesian government having negotiated to make itself the majority owner of Grasberg shortly before that).

It makes sense logistically. Cairns is one of the closest fully-equipped ports to Grasberg, only slightly further away than Darwin. Much closer than Jakarta or any of the other big ports in the Indonesian heartland. And I can imagine that, for various economic and political reasons, it may well have been easier to supply Grasberg primarily from Australia rather than from elsewhere within Indonesia.

I would consider that this claim fully checks out, if I could find more sources to corroborate it. However, there's virtually no word of it in any mainstream media; and the sources that do mention it are old and of uncertain reliability.

I declare this claim to be "citations needed".

Verdict

Australia is proud to continue its fine tradition of complicity in West Papua

Exaggerated

In conclusion, I declare that the video "Honest Government Ad: Visit West Papua", on the whole, checks out. In particular, its allegation of the Australian government being economically complicit in the large-scale corporate plunder and environmental devastation of West Papua – by way of it having significant ties with many of the multinational companies operating there – is spot-on.

But. Regarding the Australian government being militarily complicit in human rights abuses in West Papua, I consider that to be a stronger allegation than is warranted. Providing training and equipment to the Indonesian military, and then turning a blind eye to the Indonesian military's actions, is deplorable, to be sure. Australia being apathetic towards human rights abuses, would be a valid allegation.

To be "complicit", in my opinion, there would have to be Australian personnel on the ground, actively committing abuses alongside Indonesian personnel, or actively aiding and abetting such abuses.

Don't get me wrong, I most certainly am not defending Australia as the patron saint of West Papua, and I'm not absolving Australia of any and all responsibility towards human rights abuses in West Papua. I'm just saying that TJM got a bit carried away with the level of blame they apportioned to Australia on that front.

Image source: new mandala

Also, bear in mind that the only reason I'm "going soft" on Australia here, is due to a lack of evidence of Australia's direct involvement militarily in West Papua. It's quite possible that there is indeed a more direct involvement, but that all evidence of it has been suppressed, both by Indonesia and by Australia.

And hey, I'm trying to play devil's advocate in this here article, which means that I'm giving TJM more of a grilling than I otherwise would, were I to simply preach my unadulterated opinion.

I'd like to wholeheartedly thank TJM for producing this video (along with all their other videos). Despite me giving them a hard time here, the video is – as TJM themselves tongue-in-cheek say – "surprisingly honest!". It educated me immensely, and I hope it educates many more folks just as immensely, as to the lamentable goings-on, right on Australia's doorstep, about which we Aussies (not to mention the rest of the world) hear unacceptably little.

The Australian government is, at the very least, one of those responsible for maintaining the status quo in West Papua. And "business as usual" over there clearly includes a generous dollop of atrocities.

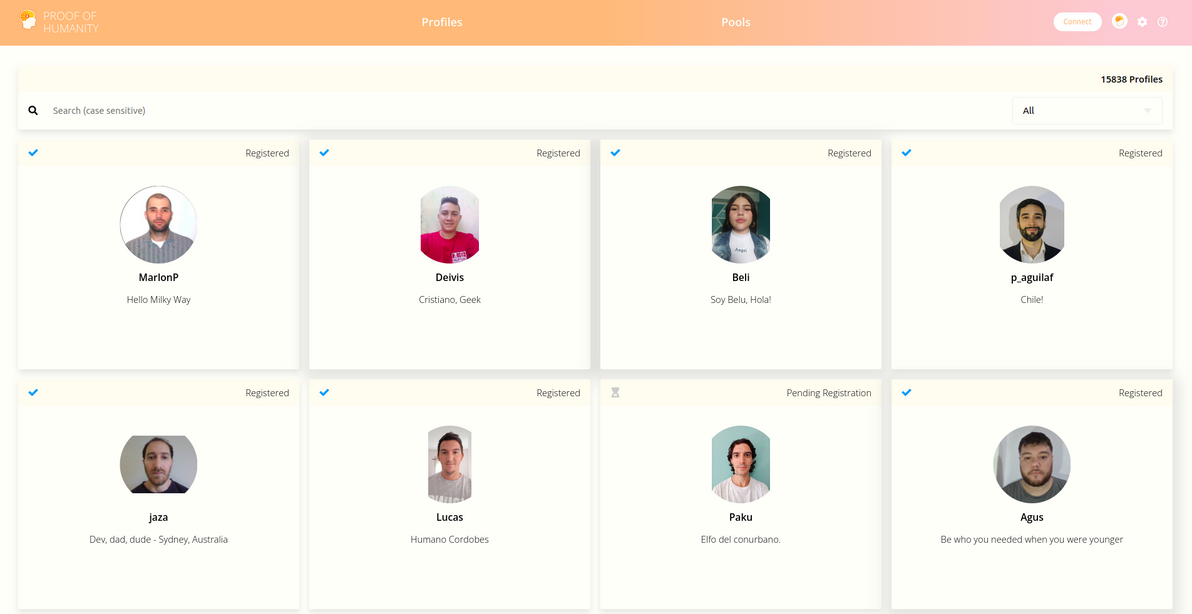

]]>Just for fun, I registered myself, so I'm now part of that tiny minority who, according to PoH, are verified humans! (Sorry, I guess the rest of you are just an illusion).

This is a brief musing on the PoH project: its background story, the people behind it, the technology powering it, the socio-economic philosophy behind it, the challenges it's facing, whether it stacks up, and what I think lies ahead.

The story

Most people think of Proof of Humanity in terms of its technology. That is, as a cryptocurrency thing, because it's all built on the Ethereum blockchain. So, yes, it's a crypto project. But, unlike almost every other crypto project, it has little to do with money (although some critics disagree), and everything to do with democracy.

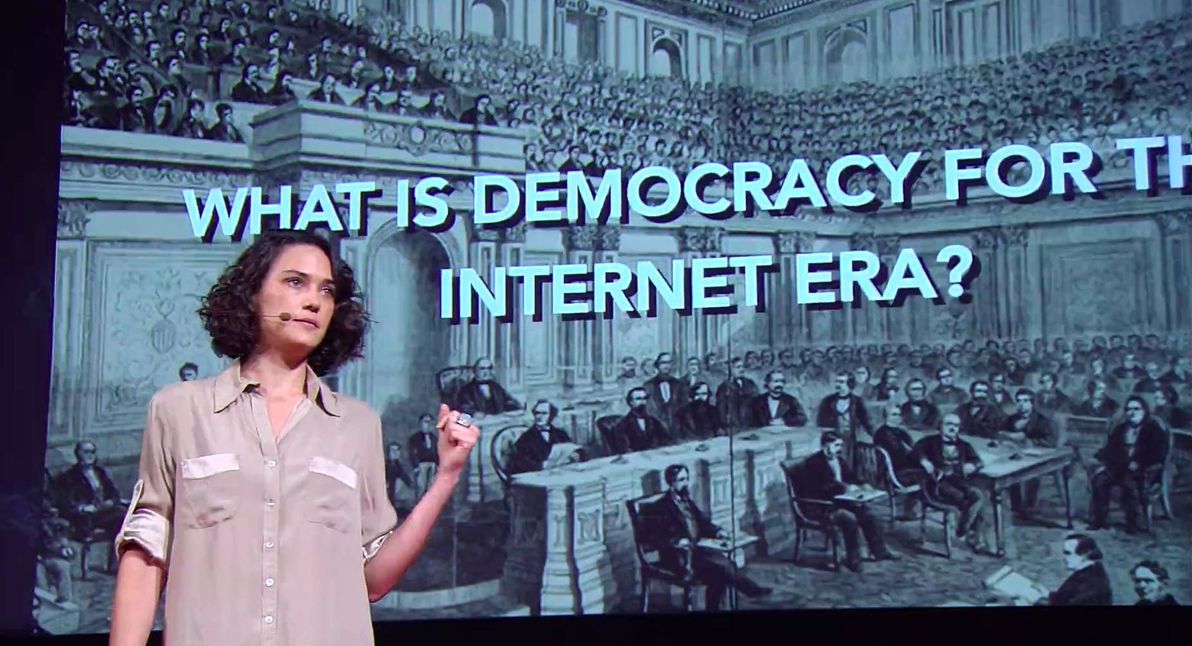

The story begins in 2012, in Buenos Aires, Argentina (a part of the world that I know well and that's close to my heart), when an online voting platform called DemocracyOS was built, and when Pia Mancini founded a new political party called Partido de la Red, which promised it would vote in congress the way constituents told it to vote, law by law (similar to many pirate parties around the world). In 2014, Pia presented all this in a TED talk.

Image source: TED

DemocracyOS – which, by the way, is still alive and kicking – has nothing to do with crypto. It's just a simple voting app. Nor does it handle identity in any innovative way. The pilot in Argentina just relied on voters providing their official government-issued ID documents in order to vote. DemocracyOS is about enabling direct democracy, giving more people a voice, and fighting corruption.

In 2015, Pia Mancini and her partner Santiago Siri – along with Herb Stephens – founded Democracy Earth, which is when crypto entered the mix. The foundation's seminal paper "The Social Smart Contract" laid down (in exhaustive detail) the technical design for a new voting platform based on blockchain. The original plan was for the whole thing to be built on Bitcoin (Ethereum was brand-new at the time).

(Side note: the Democracy Earth paper was actually the thing that I stumbled across, while googling stuff related to direct democracy and liquid democracy. It was only that paper, that then led me to discover Proof of Humanity.)

To make the voting platform feasible, the paper argued, a decentralised "Proof of Identity" solution was needed – the design that the paper spells out for such a system, is clearly the "first draft" of what would later become Proof of Humanity. The paper also presents the spec for a universal basic income being paid to everyone on the platform, which is one of the key features of PoH today.

When Pia and Santiago welcomed their daughter Roma Siri into the world in 2015, they gave her the world's first ever "blockchain valid birth certificate" (using the Bitcoin blockchain). The declaration stated verbally in the video, and the display of the blockchain address in the visual recording, are almost exactly the same as the declaration and the public key that are present in the thousands of PoH registration videos to date.

The original plan was for Democracy Earth itself to build a blockchain-based voting platform. Which they did: it was called Sovereign, and it launched in 2016. Whereas DemocracyOS enables direct democracy, Sovereign takes things a step further, and enables liquid democracy.

Fast-forward to 2018: Kleros, a "decentralised court", was founded by Federico Ast (another Argentinian) and Clément Lesaege (a Frenchman), all built on Ethereum. Kleros has different aims to Democracy Earth, although it describes its mission as "access to justice and individual freedom". Unlike Democracy Earth, Kleros is not a foundation, although it's not a traditional for-profit company either.

Image source: Twitter

And fast-forward again to 2021. Proof of Humanity is launched, as an Ethereum Dapp ("decentralised app"). Officially, PoH is independent of any "real-life" people or organisations, and is purely governed by a DAO ("decentralised autonomous organisation").

In practice, the launch announcements are all published by Kleros; the organisations behind PoH are recognised as being Kleros and Democracy Earth; Clément Lesaege and Santiago Siri are credited as the architects of PoH; and the PoH DAO's inaugural board members are Santiago Siri, Herb Stephens, Clément Lesaege, and Federico Ast.

The main selling point that PoH has pitched so far, is that everyone who successfully registers receives a stream of UBI tokens, which will (apparently!) reduce world poverty and global inequality.

PoH participants are also able to vote on "HIPs" (Humanity Improvement Proposals) – i.e. proposed changes to the PoH smart contract, so basically, equivalent to voting on pull requests for the project's main codebase – I've already cast my first vote. Voting is powered by Snapshot, which appears to be the successor platform to Sovereign – but I'm waiting for someone to reply to my question about that.

PoH is still in its infancy. It doesn't even have a Wikipedia page yet. I wrote a draft Proof of Humanity Wikipedia page, but, despite a lengthy argument with the moderators, I wasn't able to get it published, because apparently there's still insufficient reliable independent coverage of the project. You're welcome to add more sources, to try and satisfy the pedantic gatekeepers over there.

Challenges

By far the biggest challenge to the growth and the success of Proof of Humanity right now, is the exorbitant transaction fees (known as "gas fees") charged by the Ethereum network. Considering that its audience is (ostensibly) every human on the planet, you'd think that registering with PoH would be free, or at least very cheap. Think again!

You have to pay a deposit, which is currently 0.125 ETH (approximately $400 USD), and which is refunded once your profile is successfully verified (and believe me or not, but I'm telling you from personal experience, they do refund it). That amount isn't trivial, even for a privileged first-worlder like myself.

But you also, in my personal experience, have to pay at least another 10% on top of that (i.e. 0.012 ETH, or $40 USD), in non-refundable gas fees, to cover the estimated processing power required to execute the PoH smart contract for your profile. Plus another 10% or so (could well be more, depending on your circumstances) if you need to exchange fiat money for Ethereum, and back again, in order to pay the deposit and to recover it later.

Image source: Obscure Train Movies

So, a $400 USD deposit, which you lose if your profile is challenged (and your appeal fails), and which takes at least a week to get refunded to you. Plus $80 USD in fees. Plus it's all denominated in a highly volatile cryptocurrency, whose value could plummet at any time. That's a pretty steep price tag, for participation in "a cool experiment" that has no real-world utility right now. Would I spend that money and effort again, to renew my PoH profile when it expires in two years' time? Unless it gains some real-world utility, probably not.

Also a major challenge, is the question of how to give the UBI tokens any real value. UBI can be traded on the open market (although the only exchange that actually allows it to be bought and sold right now is the Argentinian Ripio). When Proof of Humanity launched in early 2021, 1 UBI was valued at approximately $1 USD. Since then, its value has consistently declined, and 1 UBI is now valued at approximately $0.04 USD.

UBI is highly inflationary by design. Every verified PoH profile results in 1 UBI being minted per hour. So every time the number of verified PoH profiles doubles, the rate of UBI minting doubles. And currently there's zero demand for UBI, because there's nothing useful that you can do with it (including investing or speculating in it!). The PoH community is actively discussing possible solutions, but there's no silver bullet.

To top it all off, it's still not clear whether or not PoH will live up to its purported aim, which is to create a Sybil-proof list of humans. The hypothesis underpinning it all, is that a video submission – featuring visual facial movement, and verbal narration – is too high a bar for AI to pass. Deepfake technology, while still in its infancy, is improving rapidly. PoH is betting on Deepfake's capability plateauing below that bar. Time will tell how that gamble unfolds.

PoH is also placing enormous trust in each member of the community of already-verified humans, to vet new profile submissions as representing real, unique humans. It's a radical and unproven experiment. That level of trust has traditionally been reserved for nation-states and their bureaucracies. There are defences built-in to PoH, but time will tell how resilient they are.

Musings

I'm not a crypto guy. The ETH that I bought in order to pay the PoH deposit, is my first ever cryptocurrency holding (and, in keeping with conservative mainstream advice, it's a modest amount, not more than I can afford to lose).

My interest in PoH is from a democratic empowerment point of view, not from a crypto nor a financial point of view. The founders of PoH claim to have the same underlying interest at heart. If that's so, then I'm afraid I don't really understand why they built it all on top of Ethereum, which is, at the end of the day, a financial instrument.

Image source: The University of Queensland

Sure, the PoH design relies on hash proofs, and it requires some kind of blockchain. But they could have built a new blockchain specifically for PoH, one that's not a financial instrument, and one that's completely free as in beer. Instead, they've built a system that's coupled to the monetary value of, at the mercy of the monetary fees of, and vulnerable to the monetary fraud / scams of, the underlying financial network.

Regarding UBI: I think I'm a fan of it – I more-or-less wrote a universal basic income proposal myself, nine years ago. Not unlike what PoH has done, I too proposed that a UBI should be issued in a new currency that's not controlled by any sovereign nation-state (although what I had in mind was that it be governed by some UN-like body, not by something as radical as a DAO).

However, I can't say I particularly like the way that "self-sovereignty" and UBI have been conflated in PoH. I would have thought that the most important use case for PoH would be democratic voting, and I feel that the whole UBI thing is a massive distraction from that. What's more, many of the people who have registered with PoH to date, have done so hoping to make a quick buck with UBI, and is that really the group of people we want, as the pioneers of PoH? (Plus, I hate to break it to you, all you folks banking on UBI, but you're going to be disappointed.)

So, do I think PoH "stacks up"? Well, it's not a scam, although clearly all the project's founders are heavily invested in crypto, and do stand to gain from the success of anything crypto-related. Call me naïve, but I think the people behind PoH are pure of heart, and are genuinely trying to make the world a better place. I can't say I agree with all their theories, but I applaud their efforts.

Image source: Meme Creator

And do I think PoH will succeed? If it can overcome the critical challenges that it's currently facing, then it stands some chance of one day reaching a critical mass, and of proving itself at scale. Although I think it's much more likely that it will remain a niche enclave. I'd be pleasantly surprised if PoH reaches 5 million users, which would be about 0.1% of internet-connected humanity, still a far cry from World Domination™.

Say what you will about it, Proof of Humanity is a novel, fascinating idea. Regardless of whether it ultimately succeeds in its aims, and regardless of whether it even can or should do so, I think it's an experiment worth conducting.

]]>

I'm not overly optimistic, here in what is one of the safest Liberal seats in Australia. But you never know, this may finally be the year when the winds of change rustle the verdant treescape of Sydney's leafy North Shore.

]]>But, as of now, it's with bittersweet-ness that I declare, that that era in my life has come to a close. No more (personal) server that I wholly or partially manage. No more SSH'ing in. No more updating Linux kernel / packages. No more Apache / Nginx setup. No more MySQL / PostgreSQL administration. No more SSL certificates to renew. No more CPU / RAM usage to monitor.

Image source: Meme Generator

In its place, I've taken the plunge and fully embraced SaaS. In particular, I've converted most of my personal web sites, and most of the other web sites under my purview, to be statically generated, and to be hosted on Netlify. I've also moved various backups to S3 buckets, and I've moved various Git repos to GitHub.

And so, you may lament that I'm yet one more netizen who has Less Power™ and less control. Yet another lost soul, entrusting these important things to the corporate overlords. And you have a point. But the case against SaaS is one that's getting harder to justify with each passing year. My new setup is (almost entirely) free (as in beer). And it's highly available, and lightning-fast, and secure out-of-the-box. And sysadmin is now Somebody Else's Problem. And the amount of ownership and control that I retain, is good enough for me.

The number one thing that I loathed about managing my own VPS, was security. A fully-fledged Linux instance, exposed to the public Internet 24/7, is a big responsibility. There are plenty of attack vectors: SSH credentials compromise; inadequate firewall setup; HTTP or other DDoS'ing; web application-level vulnerabilities (SQL injection, XSS, CSRF, etc); and un-patched system-level vulnerabilities (Log4j, Heartbleed, Shellshock, etc). Unless you're an experienced full-time security specialist, and you're someone with time to spare (and I'm neither of those things), there's no way you'll ever be on top of all that.

Image source: TAG Cyber

With the new setup, I still have some responsibility for security, but only the level of responsibility that any layman has for any managed online service. That is, responsibility for my own credentials, by way of a secure password, which is (wherever possible) complimented with robust 2FA. And, for GitHub, keeping my private SSH key safe (same goes for AWS secret tokens for API access). That's it!

I was also never happy with the level of uptime guarantee or load handling offered by a VPS. If there was a physical hardware fault, or a data centre networking fault, my server and everything hosted on it could easily become unreachable (fortunately this seldom happened to me, thanks to the fine folks at BuyVM). Or if there was a sudden spike in traffic (malicious or not), my server's CPU / RAM could easily get maxxed out and become unresponsive. Even if all my sites had been static when they were VPS-hosted, these would still have been constant risks.

Image source: YouTube

With the new setup, both uptime and load have a much higher guarantee level, as my sites are now all being served by a CDN, either CloudFront or Netlify's CDN (which is similar enough to CloudFront). Pretty much the most highly available, highly resilient services on the planet. (I could have hooked up CloudFront, or another CDN, to my old VPS, but there would have been non-trivial work involved, particularly for dynamic content; whereas, for S3 / CloudFront, or for Netlify, the CDN Just Works™).

And then there's cost. I had quite a chunky 4GB RAM VPS for the last few years, which was costing me USD$15 / month. Admittedly, that was a beefier box than I really needed, although I had more intensive apps running on it, several years ago, than I've had running over the past year or two. And I felt that it was worth paying a bit extra, if it meant a generous buffer against sudden traffic spikes that might gobble up resources.

Image source: The Register

Whereas now, my main web site hosting service, Netlify, is 100% free! (There are numerous premium bells and whistles that Netlify offers, but I don't need them). And my main code hosting service, GitHub, is 100% free too. And AWS is currently costing me less than USD$1 / month (with most of that being S3 storage fees for my private photo collection, which I never stored on my old VPS, and for which I used to pay Flickr quite a bit more money than that anyway). So I consider the whole new setup to be virtually free.

Apart from the security burden, sysadmin is simply never something that I've enjoyed. I use Ubuntu exclusively as my desktop OS these days, and I've managed a number of different Linux server environments (of various flavours, most commonly Ubuntu) over the years, so I've picked up more than a thing or two when it comes to Linux sysadmin. However, I've learnt what I have, out of necessity, and purely as a means to an end. I'm a dev, and what I actually enjoy doing, and what I try to spend most of my time doing, is dev work. Hosting everything in SaaS land, rather than on a VPS, lets me focus on just that.

In terms of ownership, like I said, I feel that my new setup is good enough. In particular, even though the code and the content for my sites now has its source of truth in GitHub, it's Git, it's completely exportable and sync-able, I can pull those repos to my local machine and to at-home backups as often as I want. Same for my files for which the source of truth is now S3, also completely exportable and sync-able. And in terms of control, obviously Netlify / S3 / CloudFront don't give me as many knobs and levers as things like Nginx or gunicorn, but they give me everything that I actually need.

Image source: Wikimedia Commons

Purists would argue that I've never even done real self-hosting, that if you're serious about ownership and control, then you host on bare metal that's physically located in your home, and that there isn't much difference between VPS- and SaaS-based hosting anyway. And that's true: a VPS is running on hardware that belongs to some company, in a data centre that belongs to some company, only accessible to you via network infrastructure that belongs to many companies. So I was already a heretic, now I've slipped even deeper into the inferno. So shoot me.

20-30 years ago, deploying stuff online required your own physical servers. 10-20 years ago, deploying stuff online required at least your own virtual servers. It's 2022, and I'm here to tell you, that deploying stuff online purely using SaaS / IaaS offerings is an option, and it's often the quickest, the cheapest, and the best-quality option (although can't you only ever pick two of those? hahaha), and it quite possibly should be your go-to option.

]]>

In a nutshell, the way it works is as follows:

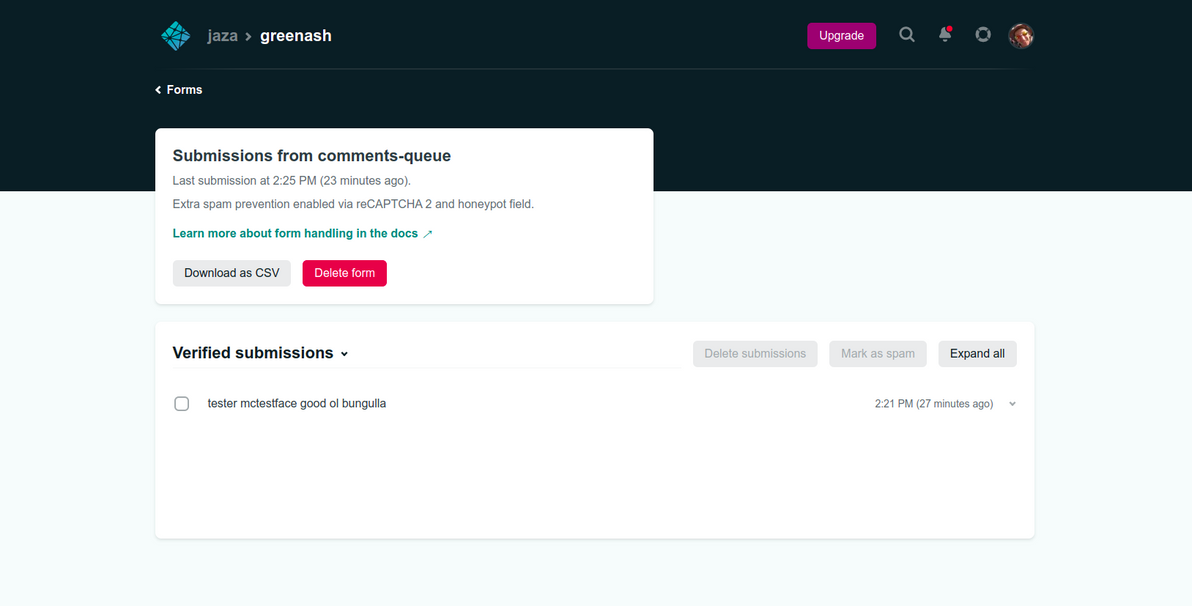

- The user submits their comment via a simple HTML form powered by Netlify Forms

- The submission gets saved to the Netlify Forms data store

- The submission-created event handler sends the site admin (me!) an email containing the submission data and a URL

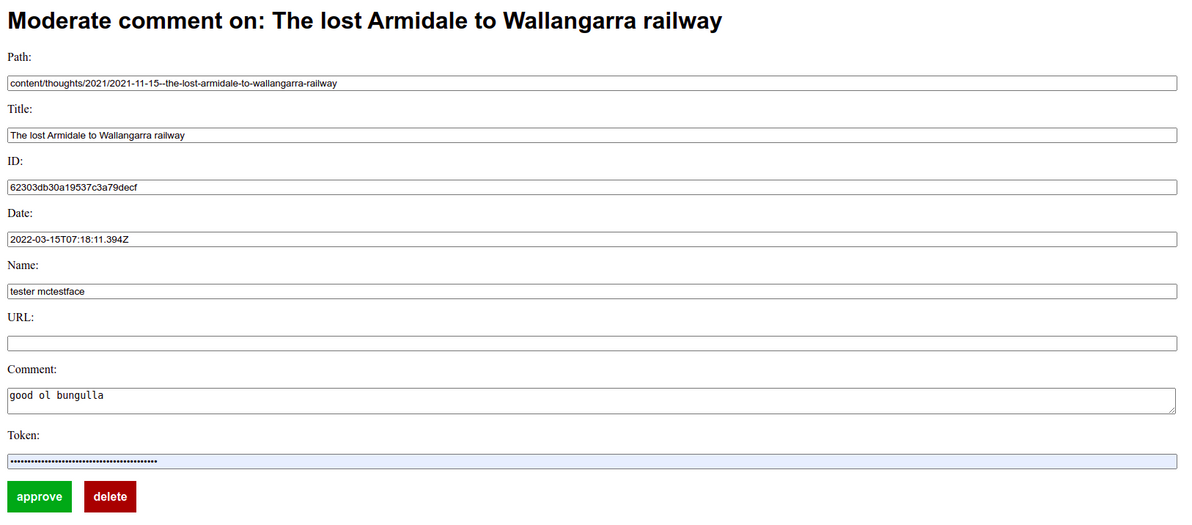

- The site admin opens the URL, which displays an HTML form populated with the submission data

- After eyeballing the submission data, the site admin enters a secret token to authenticate

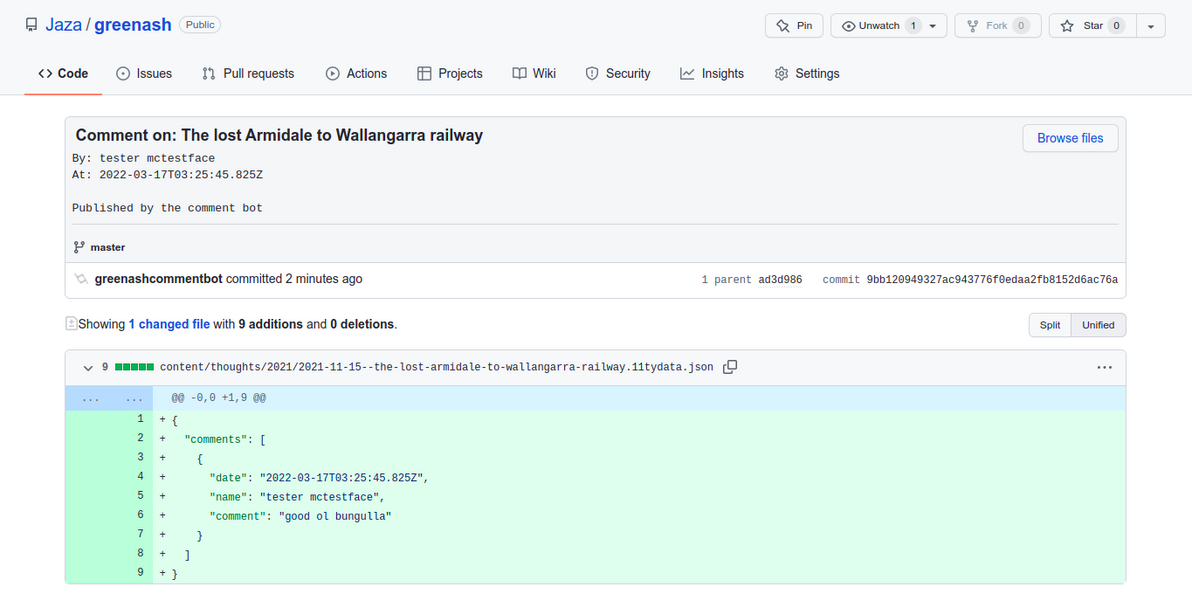

- The site admin clicks "Approve", which writes the new comment to a JSON file, pushes the code change to the site's repo via the GitHub Contents API, and deletes the submission from the data store via the Netlify Forms API (or the site admin clicks "Delete", in which case it just deletes the submission from the data store)

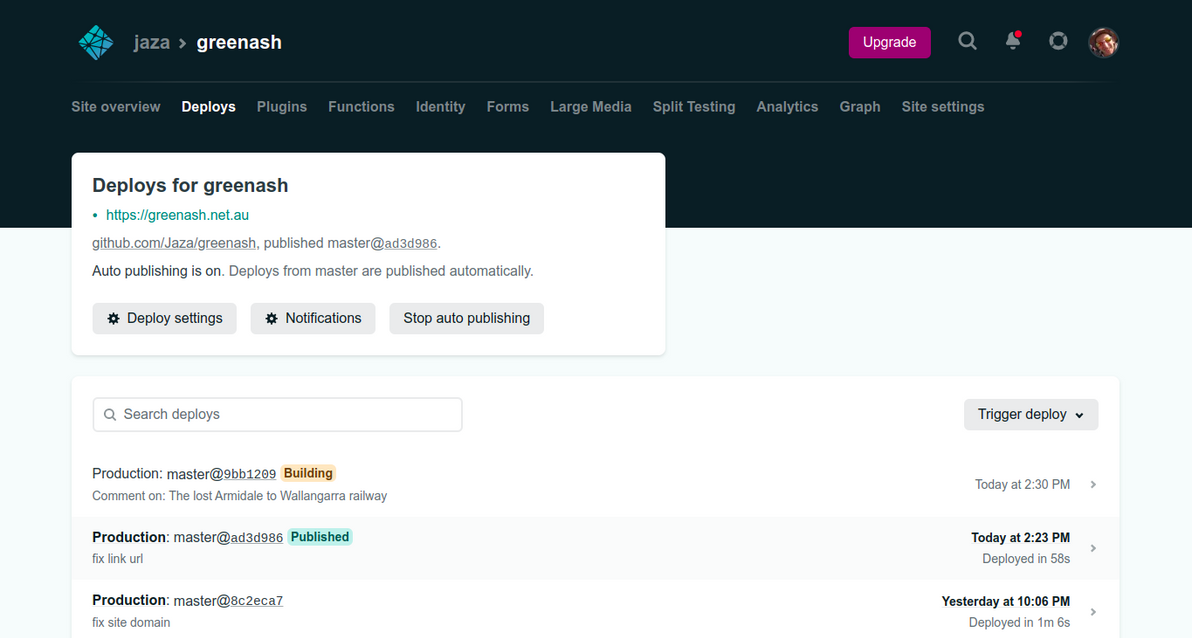

- Netlify rebuilds the site in response to a GitHub code change as usual, thus publishing the comment

The initial form submission is basically handled for me, by Netlify Forms. The bit where I had to write code only begins at the submission-created event handler. I could have POSTed form submissions directly to a serverless function, and that would have allowed me a lot more usage for free. Netlify Forms is a premium product, with a not-particularly-generous free tier of only 100 (non-spam) submissions per site per month. However, I'd rather use it, and live with its limits, because:

- It has solid built-in spam protection, and defence against spam is something that was my problem for nearly the past 20 years, and I'd really really like for it to be Somebody Else's Problem from now on

- It has its own data store of submissions, which I don't strictly need (because I'm emailing myself each submission), but which I consider really nice to have, if for any reason the email notifications don't reach me (and I also have many years of experience with unreliable email delivery), and which would be a pain (and totally not worth it) to build myself in a serverless way (would need something like DynamoDB, API Gateway, various lambda's, it would be a whole project in itself)

- I can interact with that data store via a nice API

- I can review spam submissions in the Netlify Forms UI (which is good, because I don't get notified of them, so otherwise I'd have no visibility over them)