The script is a simple wrapper of the SciPy floyd_warshall function, which in turn implements the Floyd-Warshall Algorithm. Hope you find it useful for all your directed (or undirected) weighted graph needs.

Given an input CSV of the following graph edges:

point_a,point_b,cost

a,b,5

b,c,8

c,d,23

d,e,6When the script is called as follows:

floyd-warshall-csv-generator \

/path/to/input_data.csv \

--vertex-i-column-name point_a \

--vertex-j-column-name point_b \

--weight-column-name cost \

--no-directed \

--max-weight 35It generates an output CSV that looks like this:

point_a,point_b,cost

a,b,5.0

a,c,13.0

b,c,8.0

b,d,31.0

c,d,23.0

c,e,29.0

d,e,6.0That is, it generates all the possible (indirect) paths from one point to all other points, based on the (direct) paths that are already known, with duplicate (undirected) paths filtered out, and with paths whose cost is more than max-weight filtered out.

I wrote this script in order to generate the "all edges" data that's shown in the World Locality Transit Graph, which I'll also be blogging about real soon. Let me know if you put this script to any other interesting uses!

]]>

Image source: The Guardian

Let me start by lauding FastAPI's excellent documentation. Having a track record of rock-solid documentation, was (and still is!) – in my opinion – Django's most impressive achievement, and I'm pleased to see that it's also becoming Django's most enduring legacy. FastAPI, like Django, includes docs changes together with code changes in a single (these days called) pull request; it clearly documents that certain features are deprecated; and its docs often go beyond what is strictly required, by including end-to-end instructions for integrating with various third-party tools and services.

FastAPI's docs raise the bar further still, with more than a dash of humour in many sections, and with a frequent sprinkling of emojis as standard fare. That latter convention I have some reservations about – call me old-fashioned, but you could say that emoji-filled docs is unprofessional and is a distraction. However, they seem to enhance rather than detract from overall quality; and, you know what, they put a non-emoji real-life smile on my face. So, they get my tick of approval.

FastAPI more-or-less sits in the Flask camp of being a "microframework", in that it doesn't include an ORM, a template engine, or various other things that Django has always advertised as being part of its "batteries included" philosophy. But, on the other hand, it's more in the Django camp of being highly opinionated, and of consciously including things with which it wants a hassle-free experience. Most notably, it includes Swagger UI and Redoc out-of-the-box. I personally had quite a painful experience generating Swagger docs in Flask, back in the day; and I've been tremendously pleased with how API doc generation Just Works™ in FastAPI.

Much like with Flask, being a microframework means that FastAPI very much stands on the shoulders of giants. Just as Flask is a thin wrapper on top of Werkzeug, with the latter providing all things WSGI; so too is FastAPI a thin wrapper on top of Starlette, with the latter providing all things ASGI. FastAPI also heavily depends on Pydantic for data schemas / validation, for strongly-typed superpowers, for settings handling, and for all things JSON. I think it's fair to say that Pydantic is FastAPI's secret sauce.

My use of FastAPI so far has been rather unusual, in that I've been building apps that primarily talk to an Oracle database (and, indeed, this is unusual for Python dev more generally). I started out by depending on the (now-deprecated) cx_Oracle library, and I've recently switched to its successor python-oracledb. I was pleased to see that the fine folks at Oracle recently released full async support for python-oracledb, which I'm now taking full advantage of in the context of FastAPI. I wrote a little library called fastapi-oracle which I'm using as a bit of glue code, and I hope it's of use to anyone else out there who needs to marry those two particular bits of tech together.

There has been a not-insignificant amount of chit-chat on the interwebz lately, voicing concern that FastAPI is a one-man show (with its BDFL @tiangolo showing no intention of that changing anytime soon), and that the FastAPI issue and pull request queues receive insufficient TLC. Based on my experience so far, I'm not too concerned about this. It is, generally speaking, not ideal if a project has a bus factor of 1, and if support requests and bug fixes are left to rot.

However, in my opinion, the code and the documentation of FastAPI are both high-quality and highly-consistent, and I appreciate that this is largely thanks to @tiangolo continuing to personally oversee every small change, and that loosening the reins would mean a high risk of that deteriorating. And, speaking of quality, I personally have yet to uncover any bugs either in FastAPI or its core dependencies (which I'm pleasantly surprised by, considering how heavily I've been using it) – it would appear that the items languishing in the queue are lower priority, and it would appear that @tiangolo is on top of critical bugs as they arise.

In summary, I'm enjoying coding with FastAPI, I feel like it's a great fit for building Python web apps in 2024, and it will continue to be my Python framework of choice for the foreseeable future.

]]>There are various possible solutions that can accomplish this, for example: a celery task queue, an event scheduler, and a synchronised / threaded queue. However, those are all fairly heavy solutions to this problem, because we only need a queue that runs inside one thread, and that lives for the duration of a single DB transaction (and therefore also only for a single request).

To solve this problem, I implemented a very lightweight function queue, where each queue is a deque instance, that lives inside flask.g, and that is therefore available for the duration of a given request context (or app context).

The code

The whole implementation really just consists of this one function:

from collections import deque

from flask import g

def queue_and_delayed_execute(

queue_key, session_hash, func_to_enqueue,

func_to_enqueue_ctx=None, is_time_to_execute_funcs=False):

"""Add a function to a queue, then execute the funcs now or later.

Creates a unique deque() queue for each queue_key / session_hash

combination, and stores the queue in flask.g. The idea is that

queue_key is some meaningful identifier for the functions in the

queue (e.g. 'banana_masher_queue'), and that session_hash is some

identifier that's guaranteed to be unique, in the case of there

being multiple queues for the same queue_key at the same time (e.g.

if there's a one-to-one mapping between a queue and a SQLAlchemy

transaction, then hash(db.session) is a suitable value to pass in

for session_hash).

Since flask.g only stores data for the lifetime of the current

request (or for the lifetime of the current app context, if not

running in a request context), this function should only be used for

a queue of functions that's guaranteed to only be built up and

executed within a single request (e.g. within a single DB

transaction).

Adds func_to_enqueue to the queue (and passes func_to_enqueue_ctx as

kwargs if it has been provided). If is_time_to_execute_funcs is

True (e.g. if a DB transaction has just been committed), then takes

each function out of the queue in FIFO order, and executes the

function.

"""

# Initialise the set of queues for queue_key

if queue_key not in g:

setattr(g, queue_key, {})

# Initialise the unique queue for the specified session_hash

func_queues = getattr(g, queue_key)

if session_hash not in func_queues:

func_queues[session_hash] = deque()

func_queue = func_queues[session_hash]

# Add the passed-in function and its context values to the queue

func_queue.append((func_to_enqueue, func_to_enqueue_ctx))

if is_time_to_execute_funcs:

# Take each function out of the queue and execute it

while func_queue:

func_to_execute, func_to_execute_ctx = (

func_queue.popleft())

func_ctx = (

func_to_execute_ctx

if func_to_execute_ctx is not None

else {})

func_to_execute(**func_ctx)

# The queue is now empty, so clean up by deleting the queue

# object from flask.g

del func_queues[session_hash]

To use the function queue, calling code should look something like this:

from flask import current_app as app

from flask_mail import Message

from sqlalchemy.exc import SQLAlchemyError

from myapp.extensions import db, mail

def do_api_log_msg(log_msg):

"""Log the specified message to the app logger."""

app.logger.info(log_msg)

def do_api_notify_email(mail_subject, mail_body):

"""Send the specified notification email to site admins."""

msg = Message(

mail_subject,

sender=app.config['MAIL_DEFAULT_SENDER'],

recipients=app.config['CONTACT_EMAIL_RECIPIENTS'])

msg.body = mail_body

mail.send(msg)

# Added for demonstration purposes, not really needed in production

app.logger.info('Sent email: {0}'.format(mail_subject))

def finalise_api_op(

log_msg=None, mail_subject=None, mail_body=None,

is_db_session_commit=False, is_app_logger=False,

is_send_notify_email=False):

"""Finalise an API operation by committing and logging."""

# Get a unique identifier for this DB transaction

session_hash = hash(db.session)

if is_db_session_commit:

try:

db.session.commit()

# Added for demonstration purposes, not really needed in

# production

app.logger.info('Committed DB transaction')

except SQLAlchemyError as exc:

db.session.rollback()

return {'error': 'error finalising api op'}

if is_app_logger:

queue_key = 'api_log_msg_queue'

func_to_enqueue_ctx = dict(log_msg=log_msg)

queue_and_delayed_execute(

queue_key=queue_key, session_hash=session_hash,

func_to_enqueue=do_api_log_msg,

func_to_enqueue_ctx=func_to_enqueue_ctx,

is_time_to_execute_funcs=is_db_session_commit)

if is_send_notify_email:

queue_key = 'api_notify_email_queue'

func_to_enqueue_ctx = dict(

mail_subject=mail_subject, mail_body=mail_body)

queue_and_delayed_execute(

queue_key=queue_key, session_hash=session_hash,

func_to_enqueue=do_api_notify_email,

func_to_enqueue_ctx=func_to_enqueue_ctx,

is_time_to_execute_funcs=is_db_session_commit)

return {'message': 'api op finalised ok'}

And that code can be called from a bunch of API methods like so:

def update_froggy_colour(

froggy, colour, is_db_session_commit=False, is_app_logger=False,

is_send_notify_email=False):

"""Update a froggy's colour."""

froggy.colour = colour

db.session.add(froggy)

log_msg = ((

'Froggy colour updated: {froggy.id}; new value: '

'{froggy.colour}').format(froggy=froggy))

mail_body = (

'Froggy: {froggy.id}; new colour: {froggy.colour}'.format(

froggy=froggy))

result = finalise_api_op(

log_msg=log_msg, mail_subject='Froggy colour updated',

mail_body=mail_body, is_db_session_commit=is_db_session_commit,

is_app_logger=is_app_logger,

is_send_notify_email=is_send_notify_email)

return result

def make_froggy_jump(

froggy, jump_height, is_db_session_commit=False,

is_app_logger=False, is_send_notify_email=False):

"""Make a froggy jump."""

froggy.is_jumping = True

froggy.jump_height = jump_height

db.session.add(froggy)

log_msg = ((

'Made froggy jump: {froggy.id}; jump height: '

'{froggy.jump_height}').format(froggy=froggy))

mail_body = (

'Froggy: {froggy.id}; jump height: {froggy.jump_height}'.format(

froggy=froggy))

result = finalise_api_op(

log_msg=log_msg, mail_subject='Made froggy jump',

mail_body=mail_body, is_db_session_commit=is_db_session_commit,

is_app_logger=is_app_logger,

is_send_notify_email=is_send_notify_email)

return result

And the API methods can be called like so:

def make_froggy_brightpink_and_highjump(froggy):

"""Make a froggy bright pink and jumping high."""

results = []

result1 = update_froggy_colour(

froggy, "bright_pink", is_app_logger=True)

results.append(result1)

result2 = make_froggy_jump(

froggy, "50 metres", is_db_session_commit=True,

is_app_logger=True, is_send_notify_email=True)

results.append(result2)

return results

If make_froggy_brightpink_and_highjump() is called from within a Flask app context, the app's log should include output that looks something like this:

INFO [2017-12-01 09:00:00] Committed DB transaction

INFO [2017-12-01 09:00:00] Froggy colour updated: 123; new value: bright_pink

INFO [2017-12-01 09:00:00] Made froggy jump: 123; jump height: 50 metres

INFO [2017-12-01 09:00:00] Sent email: Made froggy jump

The log output demonstrates that the desired behaviour has been achieved: first, the DB transaction finishes (i.e. the froggy actually gets set to bright pink, and made to jump high, in one atomic write operation); then, the API actions are logged in the order that they were called (first the colour was updated, then the froggy was made to jump); then, email notifications are sent in order (in this case, we only want an email notification sent for when the froggy jumps high – but if we had also asked for an email notification for when the froggy's colour was changed, that would have been the first email sent).

In summary

That's about all there is to this "task queue" implementation – as I said, it's very lightweight, because it only needs to be simple and short-lived. I'm sharing this solution, mainly to serve as a reminder that you shouldn't just use your standard hammer, because sometimes the hammer is disproportionately big compared to the nail. In this case, the solution doesn't need an asynchronous queue, it doesn't need a scheduled queue, and it doesn't need a threaded queue. (Although moving the email sending off to a celery task is a good idea in production; and moving the logging to celery would be warranted too, if it was logging to a third-party service rather than just to a local file.) It just needs a queue that builds up and that then gets processed, for a single DB transaction.

]]>namedtuple collection lately. As others have already written, despite having been available in Python 2.x and 3.x for a long time now, namedtuple continues to be under-appreciated and under-utilised by many programmers.

# The ol'fashioned tuple way

fruits = [

('banana', 'medium', 'yellow'),

('watermelon', 'large', 'pink')]

for fruit in fruits:

print('A {0} is coloured {1} and is {2} sized'.format(

fruit[0], fruit[2], fruit[1]))

# The nicer namedtuple way

from collections import namedtuple

Fruit = namedtuple('Fruit', 'name size colour')

fruits = [

Fruit(name='banana', size='medium', colour='yellow'),

Fruit(name='watermelon', size='large', colour='pink')]

for fruit in fruits:

print('A {0} is coloured {1} and is {2} sized'.format(

fruit.name, fruit.colour, fruit.size))

namedtuples can be used in a few obvious situations in Python. I'd like to present a new and less obvious situation, that I haven't seen any examples of elsewhere: using a namedtuple instead of MagicMock or flexmock, for creating fake objects in unit tests.

namedtuple vs the competition

namedtuples have a number of advantages over regular tuples and dicts in Python. First and foremost, a namedtuple is (by defintion) more semantic than a tuple, because you can define and access elements by name rather than by index. A namedtuple is also more semantic than a dict, because its structure is strictly defined, so you can be guaranteed of exactly which elements are to be found in a given namedtuple instance. And, similarly, a namedtuple is often more useful than a custom class, because it gives more of a guarantee about its structure than a regular Python class does.

A namedtuple can craft an object similarly to the way that MagicMock or flexmock can. The namedtuple object is more limited, in terms of what attributes it can represent, and in terms of how it can be swapped in to work in a test environment. But it's also simpler, and that makes it easier to define and easier to debug.

Compared with all the alternatives listed here (dict, a custom class, MagicMock, and flexmock – all except tuple), namedtuple has the advantage of being immutable. This is generally not such an important feature, for the purposes of mocking and running tests, but nevertheless, immutability always provides advantages – such as elimination of side-effects via parameters, and more thread-safe code.

Really, for me, the biggest "quick win" that you get from using namedtuple over any of its alternatives, is the lovely built-in string representation that the former provides. Chuck any namedtuple in a debug statement or a logging call, and you'll see everything you need (all the fields and their values) and nothing you don't (other internal attributes), right there on the screen.

# Printing a tuple

f1 = ('banana', 'medium', 'yellow')

# Shows all attributes ordered nicely, but no field names

print(f1)

# ('banana', 'medium', 'yellow')

# Printing a dict

f1 = {'name': 'banana', 'size': 'medium', 'colour': 'yellow'}

# Shows all attributes with field names, but ordering is wrong

print(f1)

# {'colour': 'yellow', 'size': 'medium', 'name': 'banana'}

# Printing a custom class instance

class Fruit(object):

"""It's a fruit, yo"""

f1 = Fruit()

f1.name = 'banana'

f1.size = 'medium'

f1.colour = 'yellow'

# Shows nothing useful by default! (Needs a __repr__() method for that)

print(f1)

# <__main__.Fruit object at 0x7f1d55400e48>

# But, to be fair, can print its attributes as a dict quite easily

print(f1.__dict__)

# {'size': 'medium', 'name': 'banana', 'colour': 'yellow'}

# Printing a MagicMock

from mock import MagicMock

class Fruit(object):

name = None

size = None

colour = None

f1 = MagicMock(spec=Fruit)

f1.name = 'banana'

f1.size = 'medium'

f1.colour = 'yellow'

# Shows nothing useful by default! (and f1.__dict__ is full of a tonne

# of internal cruft, with the fields we care about buried somewhere

# amongst it all)

print(f1)

# <MagicMock spec='Fruit' id='140682346494552'>

# Printing a flexmock

from flexmock import flexmock

f1 = flexmock(name='banana', size='medium', colour='yellow')

# Shows nothing useful by default!

print(f1)

# <flexmock.MockClass object at 0x7f691ecefda0>

# But, to be fair, printing f1.__dict__ shows minimal cruft

print(f1.__dict__)

# {

# 'name': 'banana',

# '_object': <flexmock.MockClass object at 0x7f691ecefda0>,

# 'colour': 'yellow', 'size': 'medium'}

# Printing a namedtuple

from collections import namedtuple

Fruit = namedtuple('Fruit', 'name size colour')

f1 = Fruit(name='banana', size='medium', colour='yellow')

# Shows exactly what we need: what it is, and what all of its

# attributes' values are. Sweeeet.

print(f1)

# Fruit(name='banana', size='medium', colour='yellow')

As the above examples show, without any special configuration, namedtuple's string configuration Just Works™.

namedtuple and fake objects

Let's say you have a simple function that you need to test. The function gets passed in a superhero, which it expects is a SQLAlchemy model instance. It queries all the items of clothing that the superhero uses, and it returns a list of clothing names. The function might look something like this:

# myproject/superhero.py

def get_clothing_names_for_superhero(superhero):

"""List the clothing for the specified superhero"""

clothing_names = []

clothing_list = superhero.clothing_items.all()

for clothing_item in clothing_list:

clothing_names.append(clothing_item.name)

return clothing_names

Since this function does all its database querying via the superhero object that's passed in as a parameter, there's no need to mock anything via funky mock.patch magic or similar. You can simply follow Python's preferred pattern of duck typing, and pass in something – anything – that looks like a superhero (and, unless he takes his cape off, nobody need be any the wiser).

You could write a test for that function, using namedtuple-based fake objects, like so:

# myproject/superhero_test.py

from collections import namedtuple

from myproject.superhero import get_clothing_names_for_superhero

FakeSuperhero = namedtuple('FakeSuperhero', 'clothing_items name')

FakeClothingItem = namedtuple('FakeClothingItem', 'name')

FakeModelQuery = namedtuple('FakeModelQuery', 'all first')

def get_fake_superhero_and_clothing():

"""Get a fake superhero and clothing for test purposes"""

superhero = FakeSuperhero(

name='Batman',

clothing_items=FakeModelQuery(

first=lambda: None,

all=lambda: [

FakeClothingItem(name='cape'),

FakeClothingItem(name='mask'),

FakeClothingItem(name='boots')]))

return superhero

def test_get_clothing_for_superhero():

"""Test listing the clothing for a superhero"""

superhero = get_fake_superhero_and_clothing()

clothing_names = set(get_clothing_names_for_superhero(superhero))

# Verify that list of clothing names is as expected

assert clothing_names == {'cape', 'mask', 'boots'}

The same setup could be achieved using one of the alternatives to namedtuple. In particular, a FakeSuperhero custom class would have done the trick. Using MagicMock or flexmock would have been fine too, although they're really overkill in this situation. In my opinion, for a case like this, using namedtuple is really the simplest and the most painless way to test the logic of the code in question.

In summary

I believe that namedtuple is a great choice for fake test objects, when it fits the bill, and I don't know why it isn't used or recommended for this in general. It's a choice that has some limitations: most notably, you can't have any attribute that starts with an underscore (the "_" character) in a namedtuple. It's also not particularly nice (although it's perfectly valid) to chuck functions into namedtuple fields, especially lambda functions.

Personally, I have used namedtuples in this way quite a bit recently, however I'm still ambivalent about it being the best approach. If you find yourself starting to craft very complicated FakeFoo namedtuples, then perhaps that's a sign that you're doing it wrong. As with everything, I think that this is an approach that can really be of value, if it's used with a degree of moderation. At the least, I hope you consider adding it to your tool belt.

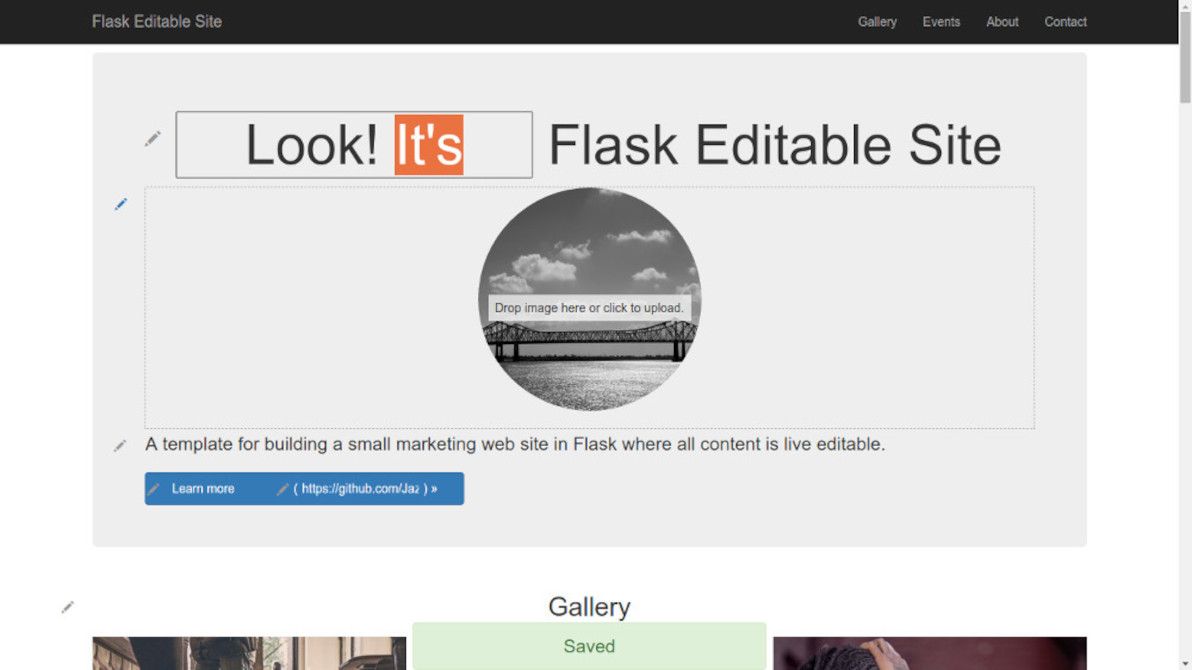

The aim of this app is to demonstrate that, with the help of modern JS libraries, and with some well-thought-out server-side snippets, it's now perfectly possible to "bake in" live in-place editing for virtually every content element in a typical brochureware site.

This app is not a CMS. On the contrary, think of it as a proof-of-concept alternative to a CMS. An alternative where there's no "admin area", there's no "editing mode", and there's no "preview button". There's only direct manipulation.

"Template" means that this is a sample app. It comes with a bunch of models that work out-of-the-box (e.g. text content block, image content block, gallery item, event). However, these are just a starting point: you can and should define your own models when building a real site. Same with the front-end templates: the home page layout and the CSS styles are just examples.

About that "template" idea

I can't stress enough that this is not a CMS. There are of course plenty of CMSes out there already, in Python and in every other language under the sun. Several of those CMSes I have used extensively. I've even been paid to build web sites with them, for most of my professional life so far. I desire neither to add to that list, nor to take on the heavy maintenance burden that doing so would entail.

What I have discovered as a web developer, and what I'm sure that all web developers discover sooner or later, is that there's no such thing as the perfect CMS. Possibly, there isn't even such thing as a good CMS! If you want to build a web site with a content management experience that's highly tailored to the project in question, then really, you have to build a unique custom CMS just for that site. Deride me as a perfectionist if you want, but that's my opinion.

There is such a thing as a good framework. Flask Editable Site, as its name suggests, uses the Flask framework, which has the glorious honour of being my favourite framework these days. And there is definitely such a thing as a good library. Flask Editable Site uses a number of both front-end and back-end libraries. The best libraries can be easily mashed up together in different configurations, on top of different frameworks, to help power a variety of different apps.

Flask Editable Site is not a CMS. It's a sample app, which is a template for building a unique CMS-like app tailor-made for a given project. If you're doing it right, then no two projects based on Flask Editable Site will be the same app. Every project has at least slightly different data models, users / permissions, custom forms, front-end widgets, and so on.

So, there's the practical aim of demonstrating direct manipulation / live editing. However, Flask Editable Site has a philosophical aim, too. The traditional "building a super one-size-fits-all app to power 90% of sites" approach isn't necessarily a good one. You inevitably end up fighting the super-app, and hacking around things to make it work for you. Instead, how about "building and sharing a template for making each site its own tailored app"? How about accepting that "every site is a hack", and embracing that instead of fighting it?

Thanks and acknowledgements

Thanks to all the libraries that Flask Editable Site uses; in each case, I tried to choose the best library available at the present time, for achieving a given purpose:

- Dante

contenteditableWYSIWYG editor, a Medium editor clone. I had previously used MediumEditor, and I recommend it too, but I feel that Dante gives a more polished out-of-the-box experience for now. I think the folks at Medium have done a great job in setting the bar high for beautiful rich-text editing, which is an important part of the admin experience for many web sites / apps. - Dropzone.js image upload widget. C'mon, people, it's 2015. Death to HTML file fields for uploads. Drag and drop with image preview, bring it on. From my limited research, Dropzone.js seems to be the clear leader of this pack at the moment.

- Bootstrap datetimepicker for calendar picker and hour/minute selector.

- Bootstrap 3 for pretty CSS styles and grid layouts. I admit I've become a bit of a Bootstrap addict lately. For developers with non-existent artistic ability, like myself, it's impossible to resist. Font Awesome is rather nice, too.

- Markovify for random text generation. I discovered this one (and several alternative implementations of it) while building Flask Editable Site, and I'm hooked. Adios, Lorem Ipsum, and don't hit the door on your way out.

- Bootstrap Freelancer theme by Start Bootstrap. Although Flask Editable Site uses vanilla Bootstrap, I borrowed various snippets of CSS / JS from this theme, as well as the overall layout.

- cookiecutter-flask, a Flask app template. I highly recommend this as a guide to best-practice directory layout, configuration management, and use of patterns in a Flask app. Thanks to these best practices, Flask Editable Site is also reasonably Twelve-Factor compliant, especially in terms of config and backing services.

Flask Editable Site began as the codebase for The Daydream Believers Performers web site, which I built pro-bono as a side project recently. So, acknowledgements to that group for helping to make Flask Editable Site happen.

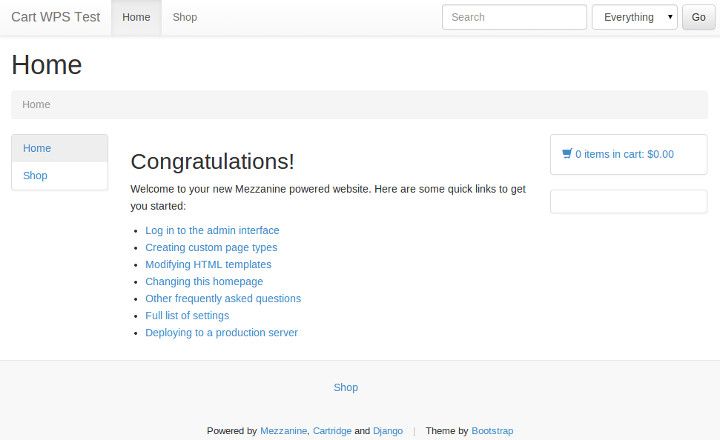

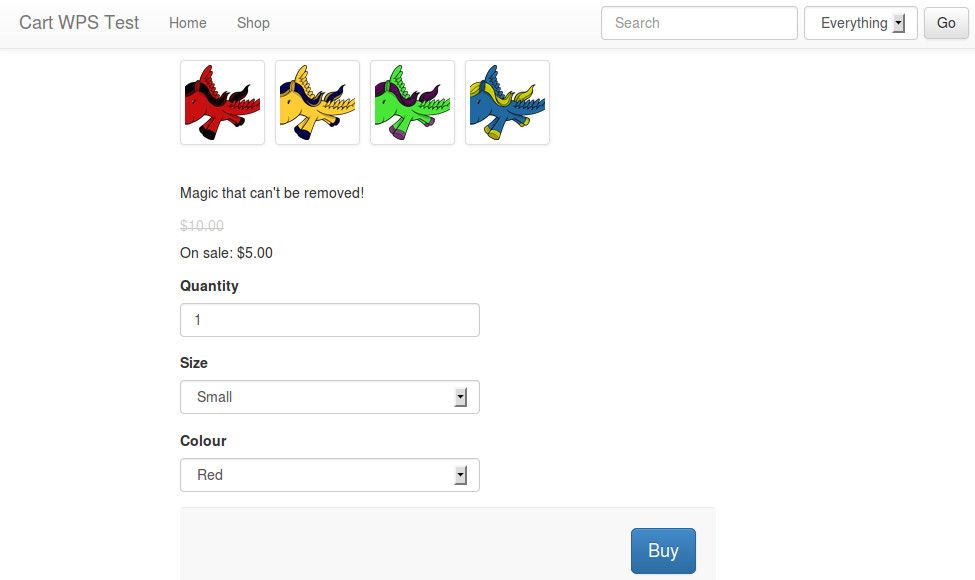

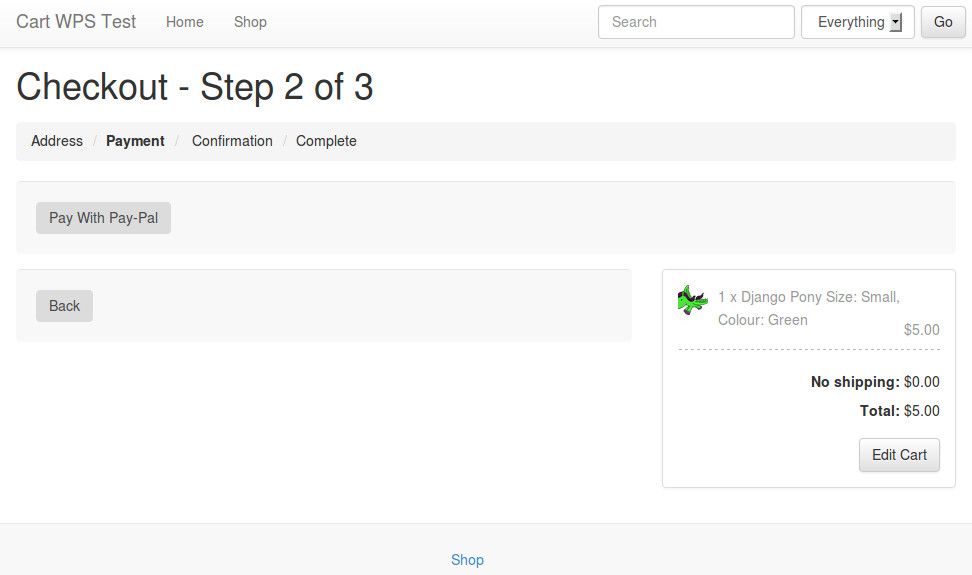

For the live editing UX, I acknowledge that I drew inspiration from several examples. First and foremost, from Mezzanine, a CMS (based on Django) which I've used on occasion. Mezzanine puts "edit" buttons in-place next to most text fields on a site, and pops up a traditional (i.e. non contenteditable) WYSIWYG editor when these are clicked.

I also had a peek at Create.js, which takes care of the front-end side of live content editing quite similarly to the way I've cobbled it together. In Flask Editable Site, the combo of Dante editor and my custom "autosave" JS could easily be replaced with Create.js (particularly when using Hallo editor, which is quite minimalist like Dante); I guess it's just a question of personal taste.

Sir Trevor JS is an interesting new kid on the block. I'm quite impressed with Sir Trevor, but its philosophy of "adding blocks of anything down the page" isn't such a great fit for Flask Editable Site, where the idea is that site admins can only add / edit content within specific constraints for each block on the page. However, for sites with no structured content models, where it's OK for each page to be a free canvas (or for a "free canvas" within, say, each blog post on a site), I can see Sir Trevor being a real game-changer.

There's also X-editable, which is the only JS solution that I've come across for nice live editing of list-type content (i.e. checkoxes, radio buttons, tag fields, autocomplete boxes, etc). I haven't used X-editable in Flask Editable Site, because I'm mainly dealing with text and image fields (and for date / time fields, I prefer a proper calendar widget). But if I needed live editing of list fields, X-editable would be my first choice.

Final thoughts

I must stress that, as I said above, Flask Editable site is a proof-of-concept. It doesn't have all the features you're going to need for your project foo. In particular, it doesn't support very many field types: only text ("short text" and "rich text"), date, time, and image. It should also support inline images and (YouTube / Vimeo) videos out-of-the-box, as this is included with Dante, but I haven't tested it. For other field types, forks / pull requests / sister projects are welcome.

If you look at the code (particularly the settings.py file and the home view), you should be able to add live editing of new content models quite easily, with just a bit of copy-pasting and tweaking. The idea is that the editable.views code is generic enough, that you won't need to change it at all when adding new models / fields in your back-end. At least, that's the idea.

Quite a lot of the code in Flask Editable Site is more complex than it strictly needs to be, in order to support "session store mode", where all content is saved to the current user's session instead of to the database (preferably using something like Memcached or temp files, rather than cookies, although that depends on what settings you use). I developed "session store mode" in order to make the demo site work without requiring any hackery such as a scheduled DB refresh (which is the usual solution in such cases). However, I can see it also being useful for sandbox environments, for UAT, and for reviewing design / functionality changes without "real" content getting in the way.

The app also includes a fair bit of code for random generation and selection of sample text and image content. This was also done primarily for the purposes of the demo site. But, upon reflection, I think that a robust solution for randomly populating a site's content is really something that all CMS-like apps should consider more seriously. The exact algorithms and sample content pools for this, of course, are a matter of taste. But the point is that it's not just about pretty pictures and amusing Dickensian text. It's about the mindset of treating content dynamically, and of recognising the bounds and the parameters of each placeholder area on the page. And what better way to enforce that mindset, than by seeing a different random set of content every time you restart the app?

I decided to make this project a good opportunity for getting my hands dirty with thorough unit / functional testing. As such, Flask Editable Site is my first open-source effort that features automated testing via Travis CI, as well as test coverage reporting via Coveralls. As you can see on the GitHub page, tests are passing and coverage is pretty good. The tests are written in pytest, with significant help from webtest, too. I hope that the tests also serve as a template for other projects; all too often, with small brochureware sites, formal testing is done sparingly if at all.

Regarding the "no admin area" principle, Flask Editable Site has taken quite a purist approach to this. Personally, I think that radically reducing the role of "admin areas" in web site administration will lead to better UX. Anything that's publicly visible on the site, should be editable first and foremost via direct manipulation. However, in reality there will always be things that aren't publicly visible, and that admins still need to edit. For example, sites will always need user / role CRUD pages (unless you're happy to only manage users via shell commands). So, if you do add admin pages to a project based on Flask Editable Site, please don't feel as though you're breaking some golden rule.

Hope you enjoy playing around with the app. Who knows, maybe you'll even build something useful based on it. Feedback, bug reports, pull requests, all welcome.

]]>

Image source: Giant Chocolate chip cookie recipe.

I'd never before stopped to think about whether or not there was a limit to how much you can put in a cookie. Usually, cookies only store very small string values, such as a session ID, a tracking code, or a browsing preference (e.g. "tile" or "list" for search results). So, usually, there's no need to consider its size limits.

However, while working on a new side project of mine that heavily uses session storage, I discovered this limit the hard (to debug) way. Anyway, now I've got one more adage to add to my developer's phrasebook: if you're trying to store more than 4KiB in a cookie, you're doing it wrong.

Actually, according to the web site Browser Cookie Limits, the safe "lowest common denominator" maximum size to stay below is 4093 bytes. Also check out the Stack Overflow discussion, What is the maximum size of a web browser's cookie's key?, for more commentary regarding the limit.

In my case – working with Flask, which depends on Werkzeug – trying to store an oversized cookie doesn't throw any errors, it simply fails silently. I've submitted a patch to Werkzeug, to make oversized cookies raise an exception, so hopefully it will be more obvious in future when this problem occurs.

It appears that this is not an isolated issue; many web frameworks and libraries fail silently with storage of too-big cookies. It's the case with Django, where the decision was made to not fix it, for technical reasons. Same story with CodeIgniter. Seems that Ruby on Rails is well-behaved and raises exceptions. Basically, your mileage may vary: don't count on your framework of choice alerting you, if you're being a cookie monster.

Also, as several others have pointed out, trying to store too much data in cookies is a bad idea anyway, because that data travels with every HTTP request and response, so it should be as small as possible. As I learned, if you find that you're dealing with non-trivial amounts of session data, then ditch client-side storage for the app in question, and switch to server-side session data storage (preferably using something like Memcached or Redis).

]]>foodutils) in multiple places, there are a variety of steps at your disposal. The most obvious step is to move that foodutils code into its own file (thus making it a Python module), and to then import that module wherever else you want in the codebase.

Most of the time, doing that is enough. The Python module importing system is powerful, yet simple and elegant.

But… what happens a few months down the track, when you're working on two new codebases (let's call them TortelliniFest and GnocchiFest – perhaps they're for new clients too), that could also benefit from re-using foodutils from your old project? What happens when you make some changes to foodutils, for the new projects, but those changes would break compatibility with the old LasagnaFest codebase?

What happens when you want to give a super-charged boost to your open source karma, by contributing foodutils to the public domain, but separated from the cruft that ties it to LasagnaFest and Co? And what do you do with secretfoodutils, which for licensing reasons (it contains super-yummy but super-secret sauce) can't be made public, but which should ideally also be separated from the LasagnaFest codebase for easier re-use?

Image source: Hoedspruit Endangered Species Centre.

Or – not to be forgotten – what happens when, on one abysmally rainy day, you take a step back and audit the LasagnaFest codebase, and realise that it's got no less than 38 different *utils chunks of code strewn around the place, and you ponder whether surely keeping all those utils within the LasagnaFest codebase is really the best way forward?

Moving foodutils to its own module file was a great first step; but it's clear that in this case, a more drastic measure is needed. In this case, it's time to split off foodutils into a separate, independent codebase, and to make it an external dependency of the LasagnaFest project, rather than an internal component of it.

This article is an introduction to the how and the why of cutting up parts of a Python codebase into dependencies. I've just explained a fair bit of the why. As for the how: in a nutshell, pip (for installing dependencies), the public PyPI repo (for hosting open-sourced dependencies), and a private PyPI repo (for hosting proprietary dependencies). Read on for more details.

Levels of modularity

One of the (many) joys of coding in Python is the way that it encourages modularity. For example, let's start with this snippet of completely non-modular code:

foodgreeter.py:

dude_name = 'Johnny'

food_today = 'lasagna'

print("Hey {dude_name}! Want a {food_today} today?".format(

dude_name=dude_name,

food_today=food_today))

There are, in my opinion, three different levels of re-factoring that you can apply, in order to make it more modular. You can think of these levels like the layers of a lasagna, if you want. Or not.

Each successive level of re-factoring involves a bit more work in the short-term, but results in more convenient re-use in the long-term. So, which level is appropriate, depends on the likelihood that you (or others) will want to re-use a given chunk of code in the future.

First, you can split the logic out of the procedural blurg, and into a function in the same file:

foodgreeter.py:

def greet_dude_with_food(dude_name, food_today):

return "Hey {dude_name}! Want a {food_today} today?".format(

dude_name=dude_name,

food_today=food_today)

dude_name = 'Johnny'

food_today = 'lasagna'

print(greet_dude_with_food(

dude_name=dude_name,

food_today=food_today))

Second, you can move that functionality into a separate file, and import it using Python's module imports system:

foodutils.py:

def greet_dude_with_food(dude_name, food_today):

return "Hey {dude_name}! Want a {food_today} today?".format(

dude_name=dude_name,

food_today=food_today)

foodgreeter.py:

from foodutils import greet_dude_with_food

dude_name = 'Johnny'

food_today = 'lasagna'

print(greet_dude_with_food(

dude_name=dude_name,

food_today=food_today))

And, finally, you can move that file out of your codebase, upload it to a Python package repository (the most common such repository being PyPI), and then declare it as a dependency of your codebase using pip:

requirements.txt:

foodutils==1.0.0

Run command:

pip install -r requirements.txt

foodgreeter.py:

from foodutils import greet_dude_with_food

dude_name = 'Johnny'

food_today = 'lasagna'

print(greet_dude_with_food(

dude_name=dude_name,

food_today=food_today))

Image source: Organize and Decorate Everything.

As I said, achieving this last level of modularity isn't always necessary or appropriate, due to the overhead involved. For a given chunk of code, there are always going to be trade-offs to consider, and as a developer it's always going to be your judgement call.

Splitting out code

For the times when it is appropriate to go that "last mile" and split code out as an external dependency, there are (in my opinion) insufficient resources regarding how to go about it. I hope, therefore, that this section serves as a decent guide on the matter.

Factor out coupling

The first step in making until-now "project code" an external dependency, is removing any coupling that the chunk of code may have to the rest of the codebase. For example, the foodutils code shown above is nice and de-coupled; but what if it instead looked like so:

foodutils.py:

from mysettings import NUM_QUESTION_MARKS

def greet_dude_with_food(dude_name, food_today):

return "Hey {dude_name}! Want a {food_today} today{q_marks}".format(

dude_name=dude_name,

food_today=food_today,

q_marks='?'*NUM_QUESTION_MARKS)

This would be problematic, because this code relies on the assumption that it lives in a codebase containing a mysettings module, and that the configuration value NUM_QUESTION_MARKS is defined within that module.

We can remove this coupling by changing NUM_QUESTION_MARKS to be a parameter passed to greet_dude_with_food, like so:

foodutils.py:

def greet_dude_with_food(dude_name, food_today, num_question_marks):

return "Hey {dude_name}! Want a {food_today} today{q_marks}".format(

dude_name=dude_name,

food_today=food_today,

q_marks='?'*num_question_marks)

The dependent code in this project could then pass in the required config value when it calls greet_dude_with_food, like so:

foodgreeter.py:

from foodutils import greet_dude_with_food

from mysettings import NUM_QUESTION_MARKS

dude_name = 'Johnny'

food_today = 'lasagna'

print(greet_dude_with_food(

dude_name=dude_name,

food_today=food_today,

num_question_marks=NUM_QUESTION_MARKS))

Once the code we're re-factoring no longer depends on anything elsewhere in the codebase, it's ready to be made an external dependency.

New repo for dependency

Next comes the step of physically moving the given chunk of code out of the project's codebase. In most cases, this means deleting the given file(s) from the project's version control repository (you are using version control, right?), and creating a new repo for those file(s) to live in.

For example, if you're using Git, the steps would be something like this:

mkdir /path/to/foodutils

cd /path/to/foodutils

git init .

mv /path/to/lasagnafest/project/foodutils.py .

git add .

git commit -m "Initial commit"

cd /path/to/lasagnafest

git rm project/foodutils.py

git commit -m "Moved foodutils to external dependency"

Add some metadata

The given chunk of code now has its own dedicated repo. But it's not yet a project, in its own right, and it can't yet be referenced as a dependency. To do that, we'll need to add some more files to the new repo, mainly consisting of metadata describing "who" this project is, and what it does.

First up, add a .gitignore file – I recommend the default Python .gitignore on GitHub. Feel free to customise as needed.

Next, add a version number to the code. The best way to do this, is to add it at the top of the main Python file, e.g. by adding this to the top of foodutils.py:

__version__ = '0.1.0'

After that, we're going to add the standard metadata files that almost all open-source Python projects have. Most importantly, a setup.py file that looks something like this:

import os

import setuptools

module_path = os.path.join(os.path.dirname(__file__), 'foodutils.py')

version_line = [line for line in open(module_path)

if line.startswith('__version__')][0]

__version__ = version_line.split('__version__ = ')[-1][1:][:-2]

setuptools.setup(

name="foodutils",

version=__version__,

url="https://github.com/misterfoo/foodutils",

author="Mister foo",

author_email="mister@foo.com",

description="Utils for handling food.",

long_description=open('README.rst').read(),

py_modules=['foodutils'],

zip_safe=False,

platforms='any',

install_requires=[],

classifiers=[

'Development Status :: 2 - Pre-Alpha',

'Environment :: Web Environment',

'Intended Audience :: Developers',

'Operating System :: OS Independent',

'Programming Language :: Python',

'Programming Language :: Python :: 2',

'Programming Language :: Python :: 2.7',

'Programming Language :: Python :: 3',

'Programming Language :: Python :: 3.3',

],

)

And also, a README.rst file:

foodutils

=========

Utils for handling food.

Once you've created those files, commit them to the new repo.

Push the repo

Great – the chunk of code now lives in its own repo, and it contains enough metadata for other projects to see what its name is, what version(s) of it there are, and what function(s) it performs. All that needs to be done now, is to decide where this repo will be hosted. But to do this, you first need to answer an important non-technical question: to open-source the code, or to keep it proprietary?

In general, you should open-source your dependencies whenever possible. You get more eyeballs (for free). Famous hairy people like Richard Stallman will send you flowers. If nothing else, you'll at least be able to always easily find your code, guaranteed (if you can't remember where it is, just Google it!). You get the drift. If open-sourcing the code, then the most obvious choice for where to host the repo is GitHub. (However, I'm not evangelising GitHub here, remember there are other options, kids).

Open source is kool, but sometimes you can't or you don't want to go down that route. That's fine, too – I'm not here to judge anyone, and I can't possibly be aware of anyone else's business / ownership / philosophical situation. So, if you want to keep the code all to your little self (or all to your little / big company's self), you're still going to have to host it somewhere. And no, "on my laptop" does not count as your code being hosted somewhere (well, technically you could just keep the repo on your own PC, and still reference it as a dependency, but that's a Bad Idea™). There are a number of hosting options: for example, on a VPS that you control; or using a managed service such as GitHub private, Bitbucket, or Assembla (note: once again, not promoting any specific service provider, just listing the main players as options).

So, once you've decided whether or not to open-source the code, and once you've settled on a hosting option, push the new repo to its hosted location.

Upload to PyPI

Nearly there now. The chunk of code has been de-coupled from its dependent project; it's been put in a new repo with the necessary metadata; and that repo is now hosted at a permanent location somewhere online. All that's left, is to make it known to the universe of Python projects, so that it can be easily listed as a dependency of other Python projects.

If you've developed with Python before (and if you've read this far, then I assume you have), then no doubt you've heard of pip. Being the Python package manager of choice these days, pip is the tool used to manage Python dependencies. pip can find dependencies from a variety of locations, but the place it looks first and foremost (by default) is on the Python Package Index (PyPI).

If your dependency is public and open-source, then you should add it to PyPI. Each time you release a new version, then (along with committing and tagging that new version in the repo) you should also upload it to PyPI. I won't go into the details in this article; please refer to the official docs for registering and uploading packages on PyPI. When following the instructions there, you'll generally want to package your code as a "universal wheel", you'll generally use the PyPI website form to register a new package, and you'll generally use twine to upload the package.

If your dependency is private and proprietary, then PyPI is not an option. The easiest way to deal with private dependencies (also the easiest way to deal with public dependencies, for that matter), is to not worry about proper Python packaging at all, and simply to use pip's ability to directly reference a source repo (including a specific commit / tag), e.g:

pip install -e \

git+http://git.myserver.com/foodutils.git@0.1.0#egg=foodutils

However, that has a number of disadvantages, the most visible disadvantage being that pip install will run much slower, because it has to do a git pull every time you ask it to check that foodutils is installed (even if you specify the same commit / tag each time).

A better way to deal with private dependencies, is to create your own "private PyPI". Same as with public packages: each time you release a new version, then (along with committing and tagging that new version in the repo) you should also upload it to your private PyPI. For instructions regarding this, please refer to my guide for how to set up and use a private PyPI repo. Also, note that my guide is for quite a minimal setup, although it contains links to some alternative setup options, including more advanced and full-featured options. (And if using a private PyPI, then take note of my guide's instructions for what to put in your local ~/.pip/pip.conf file).

Reference the dependency

The chunk of code is now ready to be used as an external dependency, by any project. To do this, you simply list the package in your project's requirements.txt file; whether the package is on the public PyPI, or on a private PyPI of your own, the syntax is the same:

foodutils==0.1.0 # From pypi.myserver.com

Then, just run your dependencies through pip as usual:

pip install -r requirements.txt

And there you have it: foodutils is now an external dependency. You can list it as a requirement for LasagnaFest, TortelliniFest, GnocchiFest, and as many other projects as you need.

Final thoughts

This article was born out of a series of projects that I've been working on over the past few months (and that I'm still working on), written mainly in Flask (these apps are still in alpha; ergo, sorry, can't talk about their details yet). The size of the projects' codebases grew to be rather unwieldy, and the projects have quite a lot of shared functionality.

I started out by re-using chunks of code between the different projects, with the hacky solution of sym-linking from one codebase to another. This quickly became unmanageable. Once I could stand the symlinks no longer (and once I had some time for clean-up), I moved these shared chunks of code into separate repos, and referenced them as dependencies (with some being open-sourced and put on the public PyPI). Only in the last week or so, after losing patience with slow pip installs, and after getting sick of seeing far too many -e git+http://git… strings in my requirements.txt files, did I finally get around to setting up a private PyPI, for better dealing with the proprietary dependencies of these codebases.

I hope that this article provides some clear guidance regarding what can be quite a confusing task, i.e. that of creating and maintaining a private Python package index. Aside from being a technical guide, though, my aim in penning this piece is to explain how you can split off component parts of a monolithic codebase into re-usable, independent separate codebases; and to convey the advantages of doing so, in terms of code quality and maintainability.

Flask, my framework of choice these days, strives to consist of a series of independent projects (Flask, Werkzeug, Jinja, WTForms, and the myriad Flask-* add-ons), which are compatible with each other, but which are also useful stand-alone or with other systems. I think that this is a great example for everyone to follow, even humble "custom web-app" developers like myself. Bearing that in mind, devoting some time to splitting code out of a big bad client-project codebase, and creating more atomic packages (even if not open-source) upon whose shoulders a client-project can stand, is a worthwhile endeavour.

]]>When it comes to exporting Postgres data (as SQL INSERT statements, at least), the tool of choice is the standard pg_dump utility. Good ol' pg_dump is rock solid but, unfortunately, it doesn't allow for any row-level filtering. Turns out that, for a recent project of mine, a filtered SQL dump is exactly what the client ordered.

On account of this shortcoming, I spent some time whipping up a lil' Python script to take care of this functionality. I've converted the original code (written for a client-specific data set) to a more generic example script, which I've put up on GitHub under the name "PG Dump Filtered". If you're just after the code, then feel free to head over to the repo without further ado. If you'd like to stick around for the tour, then read on.

Worlds apart

For the example script, I've set up a simple schema of four entities: worlds, countries, cities, and people. This schema happens to be purely hierarchical (i.e. each world has zero or more countries, each country has zero or more cities, and each city has zero or more people), for the sake of simplicity; but the script could be adapted to any valid set of foreign-key based relationships.

CREATE TABLE world (

name character varying(255) NOT NULL,

created_at timestamp without time zone,

updated_at timestamp without time zone,

active boolean NOT NULL,

uuid bytea,

id integer NOT NULL

);

ALTER TABLE ONLY world

ADD CONSTRAINT world_pkey PRIMARY KEY (id);

CREATE TABLE country (

name character varying(255) NOT NULL,

created_at timestamp without time zone,

updated_at timestamp without time zone,

active boolean NOT NULL,

uuid bytea,

id integer NOT NULL,

world_id integer,

bigness numeric(10,2)

);

ALTER TABLE ONLY country

ADD CONSTRAINT country_pkey PRIMARY KEY (id);

ALTER TABLE ONLY country

ADD CONSTRAINT country_world_id_fkey FOREIGN KEY (world_id)

REFERENCES world(id);

CREATE TABLE city (

name character varying(255) NOT NULL,

created_at timestamp without time zone,

updated_at timestamp without time zone,

active boolean NOT NULL,

uuid bytea,

id integer NOT NULL,

country_id integer,

weight integer,

is_big boolean DEFAULT false NOT NULL,

pseudonym character varying(255) DEFAULT ''::character varying

NOT NULL,

description text DEFAULT ''::text NOT NULL

);

ALTER TABLE ONLY city

ADD CONSTRAINT city_pkey PRIMARY KEY (id);

ALTER TABLE ONLY city

ADD CONSTRAINT city_country_id_fkey FOREIGN KEY (country_id)

REFERENCES country(id);

CREATE TABLE person (

name character varying(255) NOT NULL,

created_at timestamp without time zone,

updated_at timestamp without time zone,

active boolean NOT NULL,

uuid bytea,

id integer NOT NULL,

city_id integer,

person_type character varying(255) NOT NULL

);

ALTER TABLE ONLY person

ADD CONSTRAINT person_pkey PRIMARY KEY (id);

ALTER TABLE ONLY person

ADD CONSTRAINT person_city_id_fkey FOREIGN KEY (city_id)

REFERENCES city(id);

Using this schema, data belonging to two different worlds can co-exist in the same database. For example, we can have data for the world "Krypton" co-exist with data for the world "Romulus":

INSERT INTO world (name, created_at, updated_at, active, uuid, id)

VALUES ('Krypton', '2015-06-01 09:00:00.000000',

'2015-06-06 09:00:00.000000', true,

'\x478a43577ebe4b07ba8631ca228ee42a', 1);

INSERT INTO world (name, created_at, updated_at, active, uuid, id)

VALUES ('Romulus', '2015-06-01 10:00:00.000000',

'2015-06-05 13:00:00.000000', true,

'\x82e2c0ac3ba84a34a1ad3bbbb2063547', 2);

INSERT INTO country (name, created_at, updated_at, active, uuid, id,

world_id, bigness)

VALUES ('Crystalland', '2015-06-02 09:00:00.000000',

'2015-06-08 09:00:00.000000', true,

'\xcd0338cf2e3b40c3a3751b556a237152', 1, 1, 3.86);

INSERT INTO country (name, created_at, updated_at, active, uuid, id,

world_id, bigness)

VALUES ('Greenbloodland', '2015-06-03 11:00:00.000000',

'2015-06-07 13:00:00.000000', true,

'\x17591321d1634bcf986d0966a539c970', 2, 2, NULL);

INSERT INTO city (name, created_at, updated_at, active, uuid, id,

country_id, weight, is_big, pseudonym, description)

VALUES ('Kryptonopolis', '2015-06-05 09:00:00.000000',

'2015-06-11 09:00:00.000000', true,

'\x13659f9301d24ea4ae9c534d70285edc', 1, 1, 100, true,

'Pointyville',

'Nice place, once you get used to the pointiness.');

INSERT INTO city (name, created_at, updated_at, active, uuid, id,

country_id, weight, is_big, pseudonym, description)

VALUES ('Rom City', '2015-06-04 09:00:00.000000',

'2015-06-13 09:00:00.000000', true,

'\xc45a9fb0a92a43df91791b11d65f5096', 2, 2, 200, false,

'',

'Gakkkhhhh!');

INSERT INTO person (name, created_at, updated_at, active, uuid,

city_id, person_type)

VALUES ('Superman', '2015-06-14 09:00:00.000000',

'2015-06-15 22:00:00.000000', true,

'\xbadd1ca153994deca0f78a5158215cf6', 1,

'Awesome Heroic Champ');

INSERT INTO person (name, created_at, updated_at, active, uuid,

city_id, person_type)

VALUES ('General Zod', '2015-06-14 10:00:00.000000',

'2015-06-15 23:00:00.000000', true,

'\x796031428b0a46c2a9391eb5dc45008a', 1,

'Bad Bloke');

INSERT INTO person (name, created_at, updated_at, active, uuid,

city_id, person_type)

VALUES ('Mister Funnyears', '2015-06-14 11:00:00.000000',

'2015-06-15 22:30:00.000000', false,

'\x22380f6dc82d47f488a58153215864cb', 2,

'Mediocre Dude');

INSERT INTO person (name, created_at, updated_at, active, uuid,

city_id, person_type)

VALUES ('Captain Greeny', '2015-06-15 05:00:00.000000',

'2015-06-16 08:30:00.000000', true,

'\x485e31758528425dbabc598caaf86fa4', 2,

'Weirdo');

In this case, our two key stakeholders – the Kryptonians and the Romulans – have been good enough to agree to their respective data records being stored in the same physical database. After all, they're both storing the same type of data, and they accept the benefits of a shared schema in terms of cost-effectiveness, maintainability, and scalability.

However, these two stakeholders aren't exactly the best of friends. In fact, they're not even on speaking terms (have you even seen them both feature in the same franchise, let alone the same movie?). Plus, for legal reasons (and in the interests of intergalactic peace), there can be no possibility of Kryptonian records falling into Romulan hands, or vice versa. So, it really is critical that, as far as these two groups are concerned, the data appears to be completely partitioned.

(It's also lucky that we're using Postgres and Python, which all parties appear to be cool with – the Klingons are mad about Node.js and MongoDB these days, so the Romulans would never have come on board if we'd gone down that path…).

Fortunately, thanks to the wondrous script that's now been written, these unlikely DB room-mates can have their dilithium and eat it, too. The Romulans, for example, can simply specify their World ID of 2:

./pg_dump_filtered.py \

"postgresql://pg_dump_test:pg_dump_test@localhost:5432/pg_dump_test" 2 \

> ~/pg_dump_test_output.sql

And they'll get a DB dump of what is (as far as they're concerned) … well, the whole world! (Note: please do not change your dietary habits per above innuendo, dilithium can harm your unborn baby).

And all thanks to a lil' bit of Python / SQL trickery, to filter things according to their world:

# ...

# Thanks to:

# http://bytes.com/topic/python/answers/438133-find-out-schema-psycopg

t_cur.execute((

"SELECT column_name "

"FROM information_schema.columns "

"WHERE table_name = '%s' "

"ORDER BY ordinal_position") % table)

t_fields_str = ', '.join([x[0] for x in t_cur])

d_cur = conn.cursor()

# Start constructing the query to grab the data for dumping.

query = (

"SELECT x.* "

"FROM %s x ") % table

# The rest of the query depends on which table we're at.

if table == 'world':

query += "WHERE x.id = %(world_id)s "

elif table == 'country':

query += "WHERE x.world_id = %(world_id)s "

elif table == 'city':

query += (

"INNER JOIN country c "

"ON x.country_id = c.id ")

query += "WHERE c.world_id = %(world_id)s "

elif table == 'person':

query += (

"INNER JOIN city ci "

"ON x.city_id = ci.id "

"INNER JOIN country c "

"ON ci.country_id = c.id ")

query += "WHERE c.world_id = %(world_id)s "

# For all tables, filter by the top-level ID.

d_cur.execute(query, {'world_id': world_id})

With a bit more trickery thrown in for good measure, to more-or-less emulate pg_dump's export of values for different data types:

# ...

# Start constructing the INSERT statement to dump.

d_str = "INSERT INTO %s (%s) VALUES (" % (table, t_fields_str)

d_vals = []

for i, d_field in enumerate(d_row):

d_type = type(d_field).__name__

# Rest of the INSERT statement depends on the type of

# each field.

if d_type == 'datetime':

d_vals.append("'%s'" % d_field.isoformat().replace('T', ' '))

elif d_type == 'bool':

d_vals.append('%s' % (d_field and 'true' or 'false'))

elif d_type == 'buffer':

d_vals.append(r"'\x" + ("%s'" % hexlify(d_field)))

elif d_type == 'int':

d_vals.append('%d' % d_field)

elif d_type == 'Decimal':

d_vals.append('%f' % d_field)

elif d_type in ('str', 'unicode'):

d_vals.append("'%s'" % d_field.replace("'", "''"))

elif d_type == 'NoneType':

d_vals.append('NULL')

d_str += ', '.join(d_vals)

d_str += ');'

(Above code samples from: pg_dump_filtered.py).

And that's the easy part done! Now, on to working out how to efficiently do Postgres master-slave replication over a distance of several thousand light years, without disrupting the space-time continuum.

(livelong AND prosper);

Hope my little example script comes in handy, for anyone else needing a version of pg_dump that can do arbitrary filtering on inter-related tables. As I said in the README, with only a small amount of tweaking, this script should be able to produce a dump of virtually any relational data set, filtered by virtually any criteria that you might fancy.

Also, this script is for Postgres: the pg_dump utility lacks any query-level filtering functionality, so using it in this way is simply not an option. The script could also be quite easily adapted to other DBMSes (e.g. MySQL, SQL Server, Oracle), although most of Postgres' competitors have a dump utility with at least some filtering capability.

For static files (i.e. an app's seldom-changing CSS, JS, and images), Flask-Assets and Flask-S3 work together like a charm. For more dynamic files, there exist numerous snippets of solutions, but I couldn't find anything to fill in all the gaps and tie it together nicely.

Due to a pressing itch in one of my projects, I decided to rectify this situation somewhat. Over the past few weeks, I've whipped up a bunch of Python / Flask tidbits, to handle the features that I needed:

- Local or S3-based find, save, and delete for files in Python

- Easy linking to S3 files

- On-demand local- or S3-based image thumbnail generation in Flask

- Local- or S3-based file uploading in Flask-Admin

I've also published an example app, that demonstrates how all these tools can be used together. Feel free to dive straight into the example code on GitHub; or read on for a step-by-step guide of how this Flask S3 tool suite works.

Using s3-saver

The key feature across most of this tool suite, is being able to use the same code for working with local and with S3-based files. Just change a single config option, or a single function argument, to switch from one to the other. This is critical to the way I need to work with files in my Flask projects: on my development environment, everything should be on the local filesystem; but on other environments (especially production), everything should be on S3. Others may have the same business requirements (in which case you're in luck). This is most evident with s3-saver.

Here's a sample of the typical code you might use, when working with s3-saver:

from io import BytesIO

from os import path

from flask import current_app as app

from flask import Blueprint

from flask import flash

from flask import redirect

from flask import render_template

from flask import url_for

from s3_saver import S3Saver

from project import db

from library.prefix_file_utcnow import prefix_file_utcnow

from foo.forms import ThingySaveForm

from foo.models import Thingy

mod = Blueprint('foo', __name__)

@mod.route('/', methods=['GET', 'POST'])

def home():

"""Displays the Flask S3 Save Example home page."""

model = Thingy.query.first() or Thingy()

form = ThingySaveForm(obj=model)

if form.validate_on_submit():

image_orig = model.image

image_storage_type_orig = model.image_storage_type

image_bucket_name_orig = model.image_storage_bucket_name

# Initialise s3-saver.

image_saver = S3Saver(

storage_type=app.config['USE_S3'] and 's3' or None,

bucket_name=app.config['S3_BUCKET_NAME'],

access_key_id=app.config['AWS_ACCESS_KEY_ID'],

access_key_secret=app.config['AWS_SECRET_ACCESS_KEY'],

field_name='image',

storage_type_field='image_storage_type',

bucket_name_field='image_storage_bucket_name',

base_path=app.config['UPLOADS_FOLDER'],

static_root_parent=path.abspath(

path.join(app.config['PROJECT_ROOT'], '..')))

form.populate_obj(model)

if form.image.data:

filename = prefix_file_utcnow(model, form.image.data)

filepath = path.abspath(

path.join(

path.join(

app.config['UPLOADS_FOLDER'],

app.config['THINGY_IMAGE_RELATIVE_PATH']),

filename))

# Best to pass in a BytesIO to S3Saver, containing the

# contents of the file to save. A file from any source

# (e.g. in a Flask form submission, a

# werkzeug.datastructures.FileStorage object; or if

# reading in a local file in a shell script, perhaps a

# Python file object) can be easily converted to BytesIO.

# This way, S3Saver isn't coupled to a Werkzeug POST

# request or to anything else. It just wants the file.

temp_file = BytesIO()

form.image.data.save(temp_file)

# Save the file. Depending on how S3Saver was initialised,

# could get saved to local filesystem or to S3.

image_saver.save(

temp_file,

app.config['THINGY_IMAGE_RELATIVE_PATH'] + filename,

model)

# If updating an existing image,

# delete old original and thumbnails.

if image_orig:

if image_orig != model.image:

filepath = path.join(

app.config['UPLOADS_FOLDER'],

image_orig)

image_saver.delete(filepath,

storage_type=image_storage_type_orig,

bucket_name=image_bucket_name_orig)

glob_filepath_split = path.splitext(path.join(

app.config['MEDIA_THUMBNAIL_FOLDER'],

image_orig))

glob_filepath = glob_filepath_split[0]

glob_matches = image_saver.find_by_path(

glob_filepath,

storage_type=image_storage_type_orig,

bucket_name=image_bucket_name_orig)

for filepath in glob_matches:

image_saver.delete(

filepath,

storage_type=image_storage_type_orig,

bucket_name=image_bucket_name_orig)

else:

model.image = image_orig

# Handle image deletion

if form.image_delete.data and image_orig:

filepath = path.join(

app.config['UPLOADS_FOLDER'], image_orig)

# Delete the file. In this case, we have to pass in

# arguments specifying whether to delete locally or on

# S3, as this should depend on where the file was

# originally saved, rather than on how S3Saver was

# initialised.

image_saver.delete(filepath,

storage_type=image_storage_type_orig,

bucket_name=image_bucket_name_orig)

# Also delete thumbnails

glob_filepath_split = path.splitext(path.join(

app.config['MEDIA_THUMBNAIL_FOLDER'],

image_orig))

glob_filepath = glob_filepath_split[0]

# S3Saver can search for files too. When searching locally,

# it uses glob(); when searching on S3, it uses key

# prefixes.

glob_matches = image_saver.find_by_path(

glob_filepath,

storage_type=image_storage_type_orig,

bucket_name=image_bucket_name_orig)

for filepath in glob_matches:

image_saver.delete(filepath,

storage_type=image_storage_type_orig,

bucket_name=image_bucket_name_orig)

model.image = ''

model.image_storage_type = ''

model.image_storage_bucket_name = ''

if form.image.data or form.image_delete.data:

db.session.add(model)

db.session.commit()

flash('Thingy %s' % (

form.image_delete.data and 'deleted' or 'saved'),

'success')

else:

flash(

'Please upload a new thingy or delete the ' +

'existing thingy',

'warning')

return redirect(url_for('foo.home'))

return render_template('home.html',

form=form,

model=model)

(From: https://github.com/Jaza/flask-s3-save-example/blob/master/project/foo/views.py).

As is hopefully evident in the sample code above, the idea with s3-saver is that as little S3-specific code as possible is needed, when performing operations on a file. Just find, save, and delete files as usual, per the user's input, without worrying about the details of that file's storage back-end.

s3-saver uses the excellent Python boto library, as well as Python's built-in file handling functions, so that you don't have to. As you can see in the sample code, you don't need to directly import either boto, or the file-handling functions such as glob or os.remove. All you need to import is io.BytesIO, and os.path, in order to be able to pass s3-saver the parameters that it needs.

Using url-for-s3

This is a simple utility function, that generates a URL to a given S3-based file. It's designed to match flask.url_for as closely as possible, so that one can be swapped out for the other with minimal fuss.

from __future__ import print_function

from flask import url_for

from url_for_s3 import url_for_s3

from project import db

class Thingy(db.Model):

"""Sample model for flask-s3-save-example."""

id = db.Column(db.Integer(), primary_key=True)

image = db.Column(db.String(255), default='')

image_storage_type = db.Column(db.String(255), default='')

image_storage_bucket_name = db.Column(db.String(255), default='')

def __repr__(self):

return 'A thingy'

@property

def image_url(self):

from flask import current_app as app

return (self.image

and '%s%s' % (

app.config['UPLOADS_RELATIVE_PATH'],

self.image)

or None)

@property

def image_url_storageaware(self):

if not self.image:

return None

if not (

self.image_storage_type

and self.image_storage_bucket_name):

return url_for(

'static',

filename=self.image_url,

_external=True)

if self.image_storage_type != 's3':

raise ValueError((

'Storage type "%s" is invalid, the only supported ' +

'storage type (apart from default local storage) ' +

'is s3.') % self.image_storage_type)

return url_for_s3(

'static',

bucket_name=self.image_storage_bucket_name,

filename=self.image_url)

(From: https://github.com/Jaza/flask-s3-save-example/blob/master/project/foo/models.py).

The above sample code illustrates how I typically use url_for_s3. For a given instance of a model, if that model's file is stored locally, then generate its URL using flask.url_for; otherwise, switch to url_for_s3. Only one extra parameter is needed: the S3 bucket name.

{% if model.image %}

<p><a href="{{ model.image_url_storageaware }}">View original</a></p>

{% endif %}

(From: https://github.com/Jaza/flask-s3-save-example/blob/master/templates/home.html).

I can then easily show the "storage-aware URL" for this model in my front-end templates.

Using flask-thumbnails-s3

In my use case, the majority of the files being uploaded are images, and most of those images need to be resized when displayed in the front-end. Also, ideally, the dimensions for resizing shouldn't have to be pre-specified (i.e. thumbnails shouldn't only be able to get generated when the original image is first uploaded); new thumbnails of any size should get generated on-demand per the templates' needs. The front-end may change according to the design / branding whims of clients and other stakeholders, further on down the road.

flask-thumbnails handles just this workflow for local files; so, I decided to fork it and to create flask-thumbnails-s3, which works the same as flask-thumbnails when set to use local files, but which can also store and retrieve thumbnails on a S3 bucket.

{% if image %}

<div>

<img src="{{ image|thumbnail(size,

crop=crop,

quality=quality,

storage_type=storage_type,

bucket_name=bucket_name) }}"

alt="{{ alt }}" title="{{ title }}" />

</div>

{% endif %}

(From: https://github.com/Jaza/flask-s3-save-example/blob/master/templates/macros/imagethumb.html).

Like its parent project, flask-thumbnails-s3 is most commonly invoked by way of a template filter. If a thumbnail of the given original file exists, with the specified size and attributes, then it's returned straightaway; if not, then the original file is retrieved, a thumbnail is generated, and the thumbnail is saved to the specified storage back-end.

At the moment, flask-thumbnails-s3 blocks the running thread while it generates a thumbnail and saves it to S3. Ideally, this task would get sent to a queue, and a "dummy" thumbnail would be returned in the immediate request, until the "real" thumbnail is ready in a later request. The Sorlery plugin for Django uses the queued approach. It would be cool if flask-thumbnails-s3 (optionally) did the same. Anyway, it works without this fanciness for now; extra contributions welcome!

(By the way, in my testing, this is much less of a problem if your Flask app is deployed on an Amazon EC2 box, particularly if it's in the same region as your S3 bucket; unsurprisingly, there appears to be much less latency between an EC2 server and S3, than there is between a non-Amazon server and S3).

Using flask-admin-s3-upload

The purpose of flask-admin-s3-upload is basically to provide the same 'save' functionality as s3-saver, but automatically within Flask-Admin. It does this by providing alternatives to the flask_admin.form.upload.FileUploadField and flask_admin.form.upload.ImageUploadField classes, namely flask_admin_s3_upload.S3FileUploadField and flask_admin_s3_upload.S3ImageUploadField.

(Anecdote: I actually wrote flask-admin-s3-upload before any of the other tools in this suite, because I began by working with a part of my project that has no custom front-end, only a Flask-Admin based management console).

Using the utilities provided by flask-admin-s3-upload is fairly simple:

from os import path

from flask_admin_s3_upload import S3ImageUploadField

from project import admin, app, db

from foo.models import Thingy

from library.admin_utils import ProtectedModelView

from library.prefix_file_utcnow import prefix_file_utcnow

class ThingyView(ProtectedModelView):

column_list = ('image',)

form_excluded_columns = ('image_storage_type',

'image_storage_bucket_name')

form_overrides = dict(

image=S3ImageUploadField)

form_args = dict(

image=dict(

base_path=app.config['UPLOADS_FOLDER'],

relative_path=app.config['THINGY_IMAGE_RELATIVE_PATH'],

url_relative_path=app.config['UPLOADS_RELATIVE_PATH'],

namegen=prefix_file_utcnow,

storage_type_field='image_storage_type',

bucket_name_field='image_storage_bucket_name',

))

def scaffold_form(self):

form_class = super(ThingyView, self).scaffold_form()

static_root_parent = path.abspath(

path.join(app.config['PROJECT_ROOT'], '..'))

if app.config['USE_S3']:

form_class.image.kwargs['storage_type'] = 's3'

form_class.image.kwargs['bucket_name'] = \

app.config['S3_BUCKET_NAME']

form_class.image.kwargs['access_key_id'] = \

app.config['AWS_ACCESS_KEY_ID']

form_class.image.kwargs['access_key_secret'] = \

app.config['AWS_SECRET_ACCESS_KEY']

form_class.image.kwargs['static_root_parent'] = \

static_root_parent