In order to be GDPR-compliant, and in order to just be a good netizen, I made sure, when building GreenAsh v5 earlier this year, to not use services that set cookies at all, wherever possible. In previous iterations of GreenAsh, I used Google Analytics, which (like basically all Google services) is a notorious GDPR offender; this time around, I instead used Cloudflare Web Analytics, which is a good enough replacement for my modest needs, and which ticks all the privacy boxes.

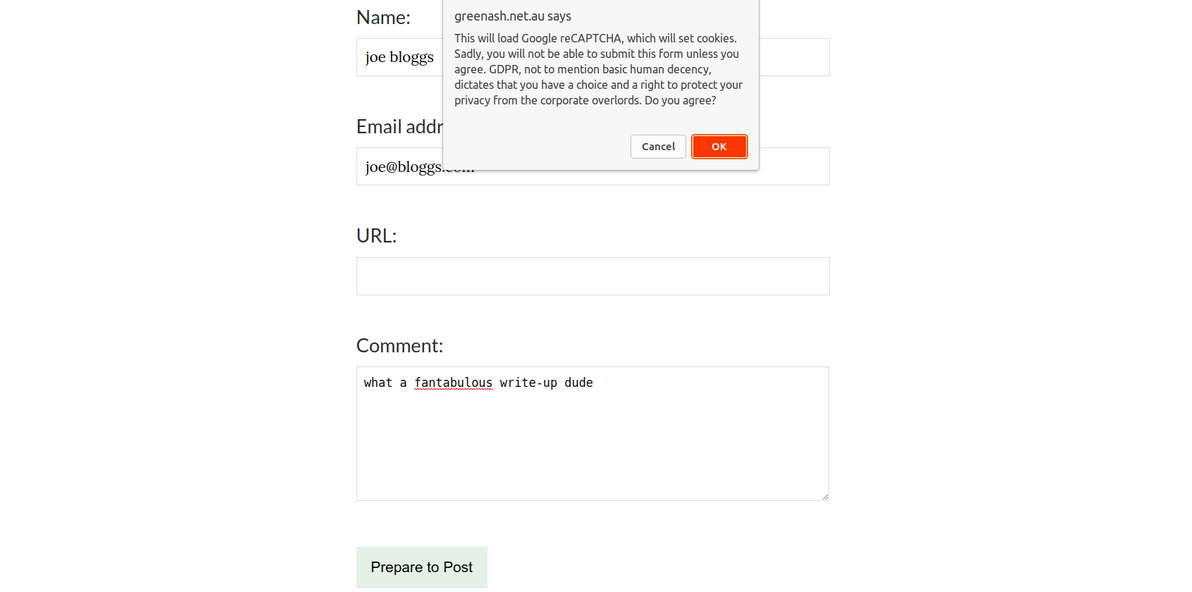

However, on pages with forms at least, I still need Google reCAPTCHA. I'd like to instead use the privacy-conscious hCaptcha, but Netlify Forms only supports reCAPTCHA, so I'm stuck with it for now. Here's how I seek the user's consent before loading reCAPTCHA.

ready(() => {

const submitButton = document.getElementById('submit-after-recaptcha');

if (submitButton == null) {

return;

}

window.originalSubmitFormButtonText = submitButton.textContent;

submitButton.textContent = 'Prepare to ' + window.originalSubmitFormButtonText;

submitButton.addEventListener("click", e => {

if (submitButton.textContent === window.originalSubmitFormButtonText) {

return;

}

const agreeToCookiesMessage =

'This will load Google reCAPTCHA, which will set cookies. Sadly, you will ' +

'not be able to submit this form unless you agree. GDPR, not to mention ' +

'basic human decency, dictates that you have a choice and a right to protect ' +

'your privacy from the corporate overlords. Do you agree?';

if (window.confirm(agreeToCookiesMessage)) {

const recaptchaScript = document.createElement('script');

recaptchaScript.setAttribute(

'src',

'https://www.google.com/recaptcha/api.js?onload=recaptchaOnloadCallback' +

'&render=explicit');

recaptchaScript.setAttribute('async', '');

recaptchaScript.setAttribute('defer', '');

document.head.appendChild(recaptchaScript);

}

e.preventDefault();

});

});I load this JS on every page, thus putting it on the lookout for forms that require reCAPTCHA (in my case, that's comment forms and the contact form). It changes the form's submit button text from, for example, "Send", to instead be "Prepare to Send" (as a hint to the user that clicking the button won't actually submit the form, there will be further action required before that happens).

It hijacks the button's click event, such that if the user hasn't yet provided consent, it shows a prompt. When consent is given, the Google reCAPTCHA JS is added to the DOM, and reCAPTCHA is told to call recaptchaOnloadCallback when it's done loading. If the user has already provided consent, then the button's default click behaviour of triggering form submission is allowed.

{%- if params.recaptchaKey %}

<div id="recaptcha-wrapper"></div>

<script type="text/javascript">

window.recaptchaOnloadCallback = () => {

document.getElementById('submit-after-recaptcha').textContent =

window.originalSubmitFormButtonText;

window.grecaptcha.render(

'recaptcha-wrapper', {'sitekey': '{{ params.recaptchaKey }}'}

);

};

</script>

{%- endif %}I embed this HTML inside every form that requires reCAPTCHA. It defines the wrapper element into which the reCAPTCHA is injected. And it defines recaptchaOnloadCallback, which changes the submit button text back to what it originally was (e.g. changes it from "Prepare to Send" back to "Send"), and which actually renders the reCAPTCHA widget.

<!-- ... -->

<form other-attributes-here data-netlify-recaptcha>

<!-- ... -->

{% include 'components/recaptcha_loader.njk' %}

<p>

<button type="submit" id="submit-after-recaptcha">Send</button>

</p>

</form>

<!-- ... -->This is what my GDPR-compliant, reCAPTCHA-enabled, Netlify-powered contact form looks like. The data-netlify-recaptcha attribute tells Netlify to require a successful reCAPTCHA challenge in order to accept a submission from this form.

That's all there is to it! Not rocket science, but I just thought I'd share this with the world, because despite there being a gazillion posts on the interwebz advising that you "ask for consent before setting cookies", there seem to be surprisingly few step-by-step instructions explaining how to actually do that. And the standard advice appears to be to use a third-party script / plugin that implements an "accept cookies" popup for you, even though it's really easy to implement it yourself.

]]>

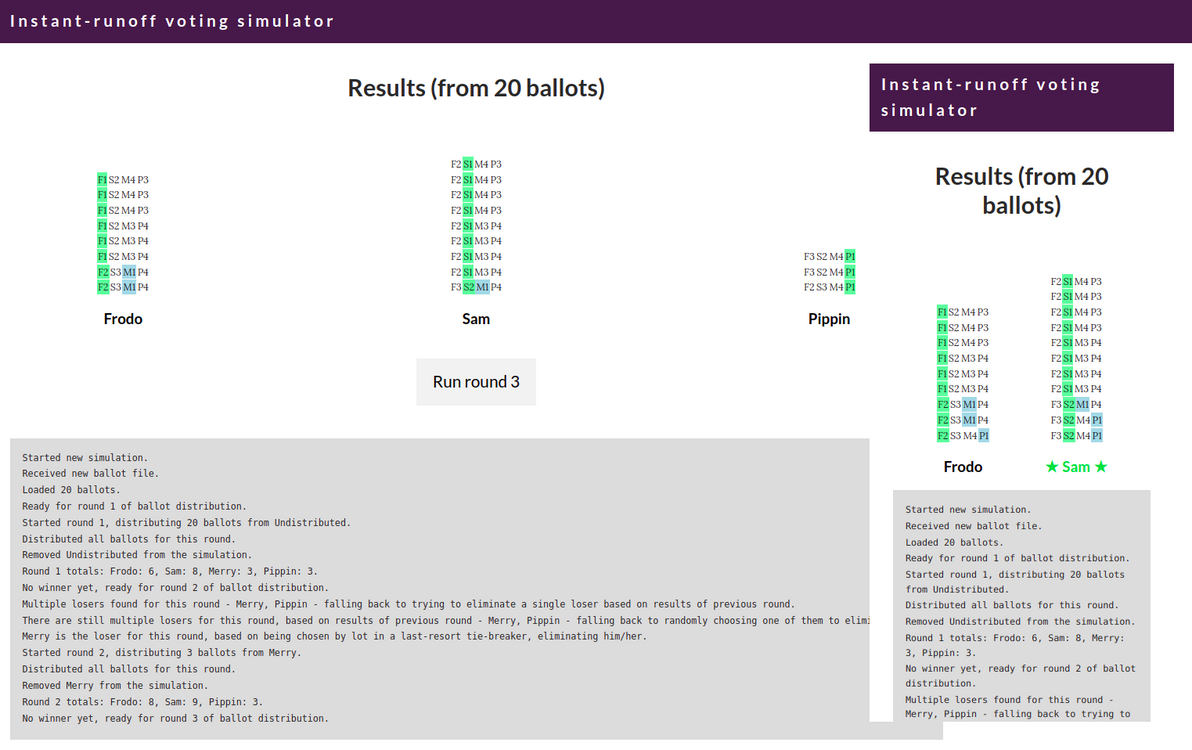

I hope that, by being an interactive, animated, round-by-round visualisation of the ballot distribution process, this simulation gives you a deeper understanding of how instant-runoff voting works.

The rules coded into the simulator, are those used for the House of Representatives in Australian federal elections, as specified in the Electoral Act 1918 (Cth) s274.

There are other tools around that do basically the same thing as this simulator. Kudos to the authors of those tools. However, they only output a text log or a text-based table, they don't provide any visualisation or animation of the vote-counting process. And they spit out the results for all rounds all at once, they don't show (quite as clearly) how the results evolve from one round to the next.

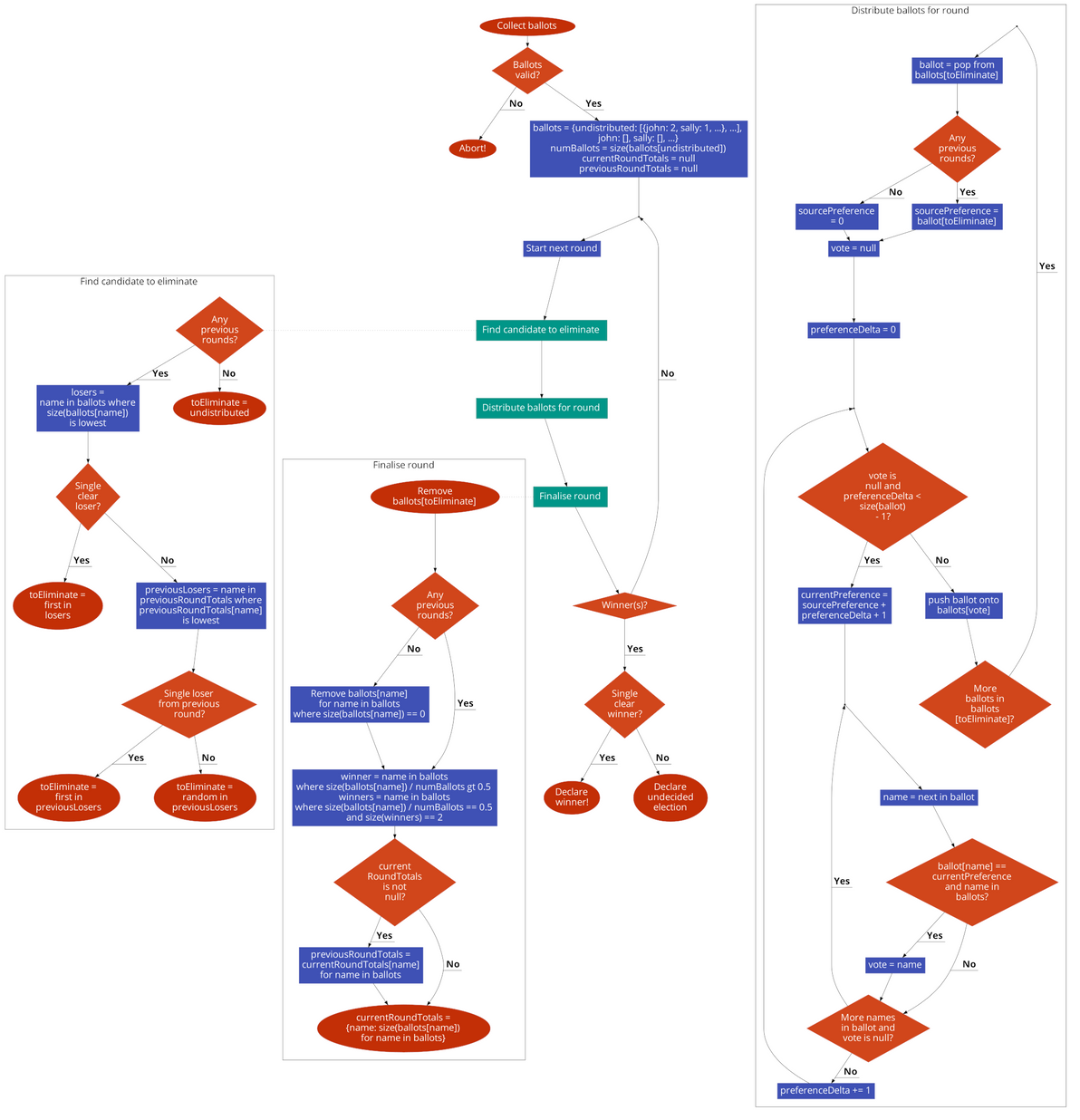

Source code is all up on GitHub. It's coded in vanilla JS, with the help of the lovely Papa Parse library for CSV handling. I made a nice flowchart version of the code too.

With a federal election coming up, here in Australia, in just a few days' time, this simulator means there's now one less excuse for any of my fellow citizens to not know how the voting system works. And, in this election more than ever, it's vital that you properly understand why every preference matters, and how you can make every preference count.

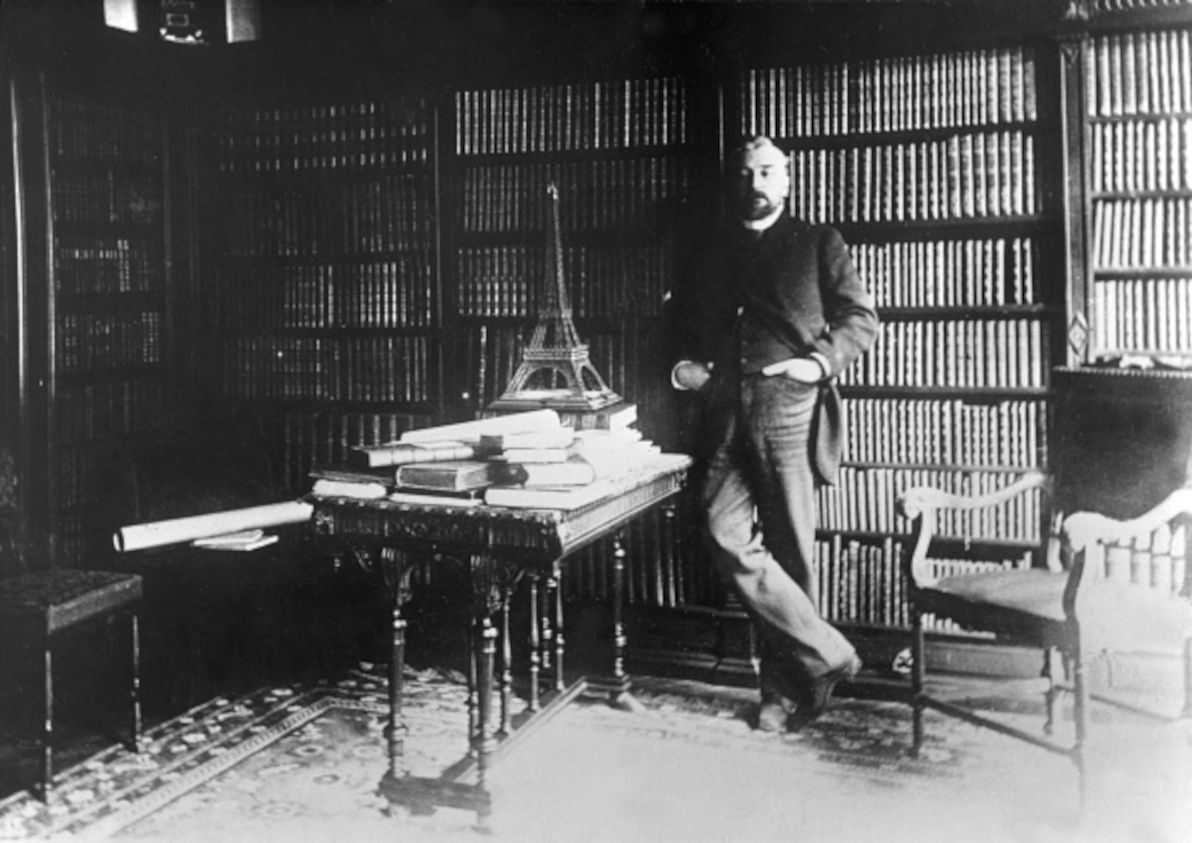

]]>From reading the wonderful epic novel Paris, by Edward Rutherford, I learned some facts about Gustave Eiffel's life, and about the Eiffel Tower's original conception, its construction, and its first few decades as the exclamation mark of the Paris skyline, that both surprised and intrigued me. Allow me to share these tidbits of history in this here humble article.

Image source: domain.com.au.

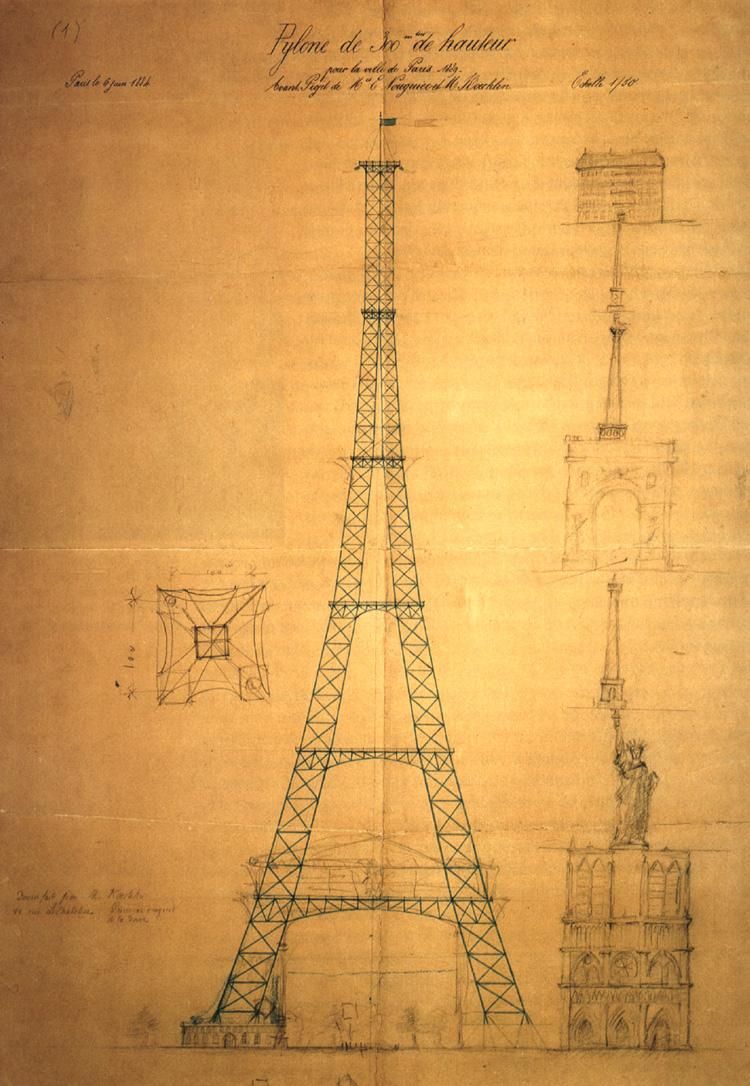

To begin with, the Eiffel Tower was not designed by Gustave Eiffel. The original idea and the first drafts of the design were produced by one Maurice Koechlin, who worked at Eiffel's firm. The same is true of Eiffel's other great claim to fame, the Statue of Liberty (which he built just before the Tower): after Eiffel's firm took over the project of building the statue, it was Koechlin who came up with Liberty's ingenious inner iron truss skeleton, and outer copper "skin", that makes her highly wind-resistant in the midst of blustery New York Harbour. It was a similar story for the Garabit Viaduct, and various other projects: although Eiffel himself was a highly capable engineer, it was Koechlin who was the mastermind, while Eiffel was the salesman and the celebrity.

Eiffel, and his colleagues Maurice Koechlin and Émile Nouguier, were engineers, not designers. In particular, they were renowned bridge-builders of their time. As such, their tower design was all about the practicalities of wind resistance, thermal expansion, and material strength; the Tower's aesthetic qualities were secondary considerations, with architect Stephen Sauvestre only being invited to contribute an artistic touch (such as the arches on the Tower's base), after the initial drafts were completed.

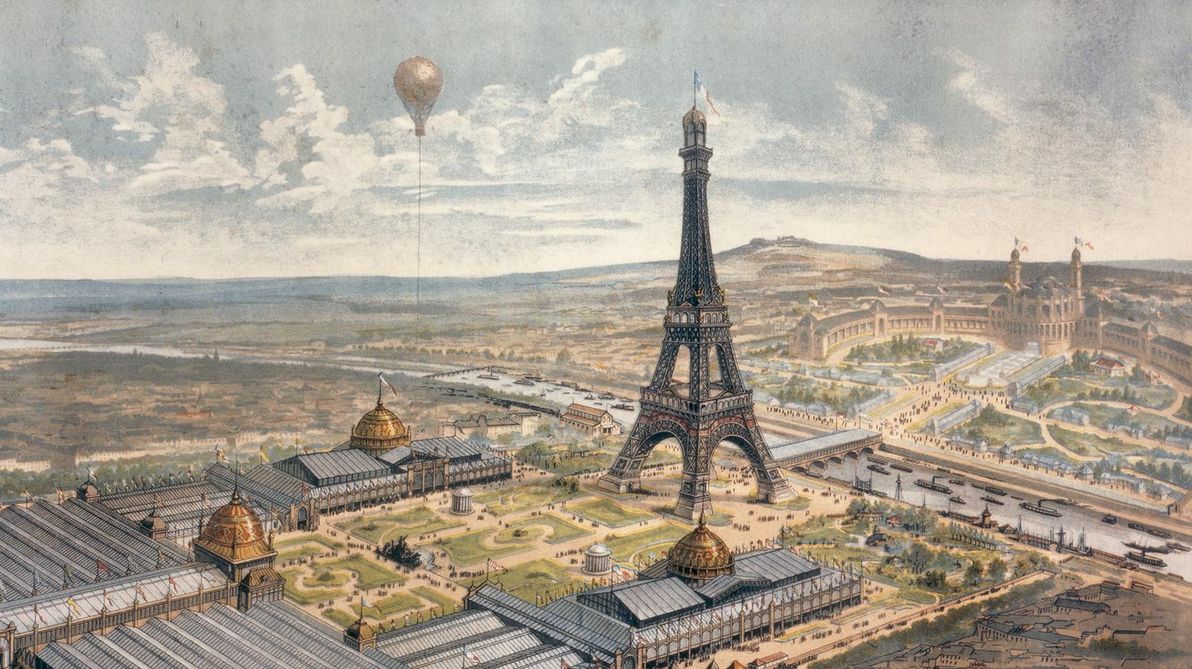

Image source: Wikimedia Commons.

The Eiffel Tower was built as the centrepiece of the 1889 Exposition Universelle in Paris, after winning the 1886 competition that was held to find a suitable design. However, after choosing it, the City of Paris then put forward only a small modicum of the estimated money needed to build it, rather than the Tower's full estimated budget. As such, Eiffel agreed to cover the remainder of the construction costs out of his own pocket, but only on the condition that he receive all commercial income from the Tower, for 20 years from the date of its inauguration. This proved to be much to Eiffel's advantage in the long-term, as the Tower's income just during the Exposition Universelle itself – i.e. just during the first six months of its operating life – more than covered Eiffel's out-of-pocket costs; and the Tower has consistently operated at a profit ever since.

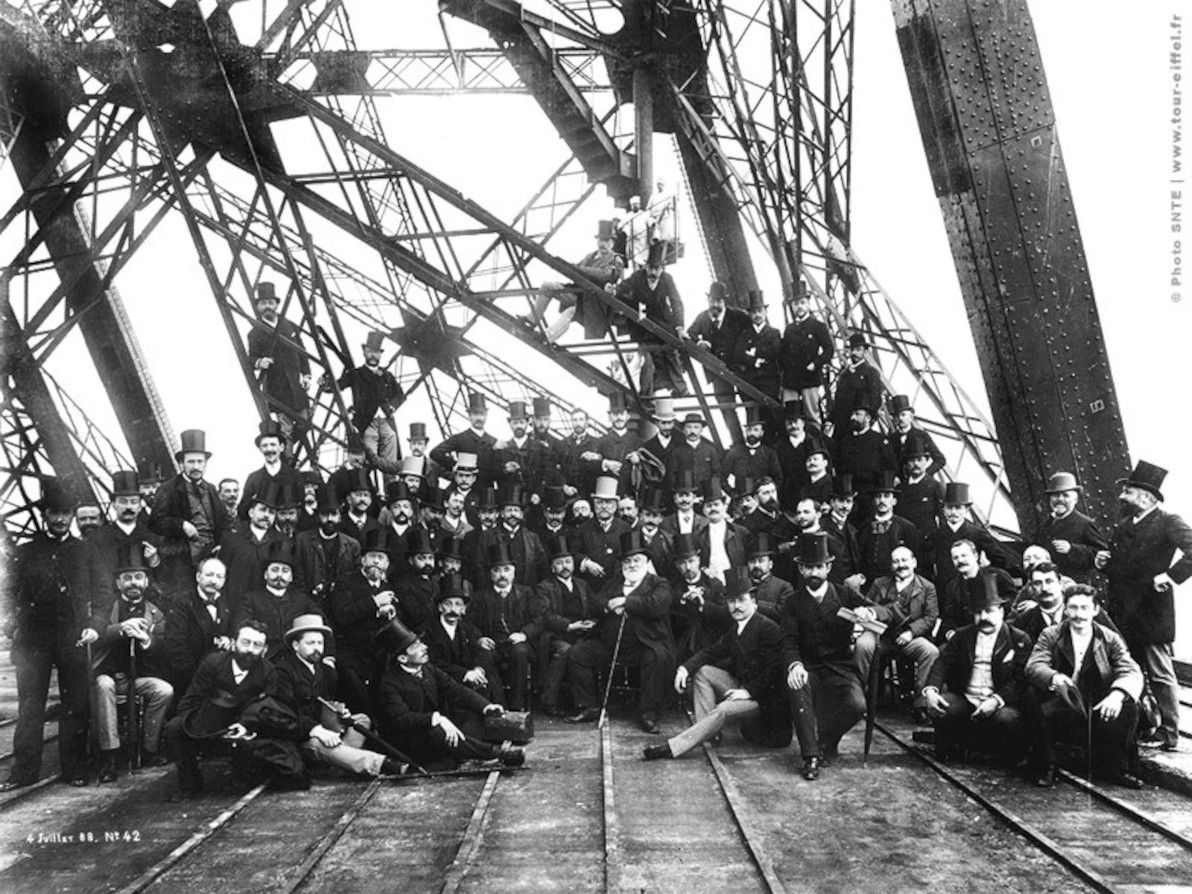

Image source: toureiffel.paris.

Pioneering construction projects of the 19th century (and, indeed, of all human history before then too) were, in general, hardly renowned for their occupational safety standards. I had always assumed that the building of the Eiffel Tower, which saw workmen reach more dizzying heights than ever before, had taken no small toll of lives. However, it just so happens that Gustave Eiffel was more than a mere engineer and a bourgeois, he was also a pioneer of safety: thanks to his insistence on the use of devices such as guard rails and movable stagings, the Eiffel Tower project amazingly saw only one fatality; and it wasn't even really a workplace accident, as the deceased, a workman named Angelo Scagliotti, climbed the tower while off duty, to impress his girlfriend, and sadly lost his footing.

The Tower's three levels, and its lifts and staircases, have always been accessible to the general public. However, something that not all visitors to the Tower may be aware of, is that near the summit of the Tower, just above the third level's viewing platform, sits what was originally Gustave Eiffel's private apartment. For the 20 years that he owned the rights to the Tower, Eiffel also enjoyed his own bachelor pad at the top! Eiffel reportedly received numerous requests to rent out the pad for a night, but he never did so, instead only inviting distinguished guests of his choosing, such as (no less than) Thomas Edison. The apartment is now open to the public as a museum. Still no word regarding when it will be listed on Airbnb; although another private apartment was more recently added lower down in the Tower and was rented out.

Image source: The Independent.

So why did Eiffel's contract for the rights to the Tower stipulate 20 years? Because the plan was, that after gracing the Paris cityscape for that many years, it was to be torn down! That's right, the Eiffel Tower – which today seems like such an invincible monument – was only ever meant to be a temporary structure. And what saved it? Was it that the City Government came to realise what a tremendous cash cow it could inherit? Was it that Parisians came to love and to admire what they had considered to be a detestable blight upon their elegant city? Not at all! The only thing that saved the Eiffel Tower was that, a few years prior to its scheduled doomsday, a little thing known as radio had been invented. The French military, who had started using the Tower as a radio antenna – realising that it was the best antenna in all of Paris, if not the world at that time – promptly declared the Tower vital to the defence of Paris, thus staving off the wrecking ball.

Image source: busy.org.

And the rest, as they say, is history. There are plenty more intriguing anecdotes about the Eiffel Tower, if you're interested in delving further. The Tower continued to have a colourful life, after the City of Paris relieved Eiffel of his rights to it in 1909, and after his death in 1923; and the story continues to this day. So, next time you have the good fortune of visiting La belle Paris, remember that there's much more to her tallest monument than just a fine view from the top.

]]>foodutils) in multiple places, there are a variety of steps at your disposal. The most obvious step is to move that foodutils code into its own file (thus making it a Python module), and to then import that module wherever else you want in the codebase.

Most of the time, doing that is enough. The Python module importing system is powerful, yet simple and elegant.

But… what happens a few months down the track, when you're working on two new codebases (let's call them TortelliniFest and GnocchiFest – perhaps they're for new clients too), that could also benefit from re-using foodutils from your old project? What happens when you make some changes to foodutils, for the new projects, but those changes would break compatibility with the old LasagnaFest codebase?

What happens when you want to give a super-charged boost to your open source karma, by contributing foodutils to the public domain, but separated from the cruft that ties it to LasagnaFest and Co? And what do you do with secretfoodutils, which for licensing reasons (it contains super-yummy but super-secret sauce) can't be made public, but which should ideally also be separated from the LasagnaFest codebase for easier re-use?

Image source: Hoedspruit Endangered Species Centre.

Or – not to be forgotten – what happens when, on one abysmally rainy day, you take a step back and audit the LasagnaFest codebase, and realise that it's got no less than 38 different *utils chunks of code strewn around the place, and you ponder whether surely keeping all those utils within the LasagnaFest codebase is really the best way forward?

Moving foodutils to its own module file was a great first step; but it's clear that in this case, a more drastic measure is needed. In this case, it's time to split off foodutils into a separate, independent codebase, and to make it an external dependency of the LasagnaFest project, rather than an internal component of it.

This article is an introduction to the how and the why of cutting up parts of a Python codebase into dependencies. I've just explained a fair bit of the why. As for the how: in a nutshell, pip (for installing dependencies), the public PyPI repo (for hosting open-sourced dependencies), and a private PyPI repo (for hosting proprietary dependencies). Read on for more details.

Levels of modularity

One of the (many) joys of coding in Python is the way that it encourages modularity. For example, let's start with this snippet of completely non-modular code:

foodgreeter.py:

dude_name = 'Johnny'

food_today = 'lasagna'

print("Hey {dude_name}! Want a {food_today} today?".format(

dude_name=dude_name,

food_today=food_today))

There are, in my opinion, three different levels of re-factoring that you can apply, in order to make it more modular. You can think of these levels like the layers of a lasagna, if you want. Or not.

Each successive level of re-factoring involves a bit more work in the short-term, but results in more convenient re-use in the long-term. So, which level is appropriate, depends on the likelihood that you (or others) will want to re-use a given chunk of code in the future.

First, you can split the logic out of the procedural blurg, and into a function in the same file:

foodgreeter.py:

def greet_dude_with_food(dude_name, food_today):

return "Hey {dude_name}! Want a {food_today} today?".format(

dude_name=dude_name,

food_today=food_today)

dude_name = 'Johnny'

food_today = 'lasagna'

print(greet_dude_with_food(

dude_name=dude_name,

food_today=food_today))

Second, you can move that functionality into a separate file, and import it using Python's module imports system:

foodutils.py:

def greet_dude_with_food(dude_name, food_today):

return "Hey {dude_name}! Want a {food_today} today?".format(

dude_name=dude_name,

food_today=food_today)

foodgreeter.py:

from foodutils import greet_dude_with_food

dude_name = 'Johnny'

food_today = 'lasagna'

print(greet_dude_with_food(

dude_name=dude_name,

food_today=food_today))

And, finally, you can move that file out of your codebase, upload it to a Python package repository (the most common such repository being PyPI), and then declare it as a dependency of your codebase using pip:

requirements.txt:

foodutils==1.0.0

Run command:

pip install -r requirements.txt

foodgreeter.py:

from foodutils import greet_dude_with_food

dude_name = 'Johnny'

food_today = 'lasagna'

print(greet_dude_with_food(

dude_name=dude_name,

food_today=food_today))

Image source: Organize and Decorate Everything.

As I said, achieving this last level of modularity isn't always necessary or appropriate, due to the overhead involved. For a given chunk of code, there are always going to be trade-offs to consider, and as a developer it's always going to be your judgement call.

Splitting out code

For the times when it is appropriate to go that "last mile" and split code out as an external dependency, there are (in my opinion) insufficient resources regarding how to go about it. I hope, therefore, that this section serves as a decent guide on the matter.

Factor out coupling

The first step in making until-now "project code" an external dependency, is removing any coupling that the chunk of code may have to the rest of the codebase. For example, the foodutils code shown above is nice and de-coupled; but what if it instead looked like so:

foodutils.py:

from mysettings import NUM_QUESTION_MARKS

def greet_dude_with_food(dude_name, food_today):

return "Hey {dude_name}! Want a {food_today} today{q_marks}".format(

dude_name=dude_name,

food_today=food_today,

q_marks='?'*NUM_QUESTION_MARKS)

This would be problematic, because this code relies on the assumption that it lives in a codebase containing a mysettings module, and that the configuration value NUM_QUESTION_MARKS is defined within that module.

We can remove this coupling by changing NUM_QUESTION_MARKS to be a parameter passed to greet_dude_with_food, like so:

foodutils.py:

def greet_dude_with_food(dude_name, food_today, num_question_marks):

return "Hey {dude_name}! Want a {food_today} today{q_marks}".format(

dude_name=dude_name,

food_today=food_today,

q_marks='?'*num_question_marks)

The dependent code in this project could then pass in the required config value when it calls greet_dude_with_food, like so:

foodgreeter.py:

from foodutils import greet_dude_with_food

from mysettings import NUM_QUESTION_MARKS

dude_name = 'Johnny'

food_today = 'lasagna'

print(greet_dude_with_food(

dude_name=dude_name,

food_today=food_today,

num_question_marks=NUM_QUESTION_MARKS))

Once the code we're re-factoring no longer depends on anything elsewhere in the codebase, it's ready to be made an external dependency.

New repo for dependency

Next comes the step of physically moving the given chunk of code out of the project's codebase. In most cases, this means deleting the given file(s) from the project's version control repository (you are using version control, right?), and creating a new repo for those file(s) to live in.

For example, if you're using Git, the steps would be something like this:

mkdir /path/to/foodutils

cd /path/to/foodutils

git init .

mv /path/to/lasagnafest/project/foodutils.py .

git add .

git commit -m "Initial commit"

cd /path/to/lasagnafest

git rm project/foodutils.py

git commit -m "Moved foodutils to external dependency"

Add some metadata

The given chunk of code now has its own dedicated repo. But it's not yet a project, in its own right, and it can't yet be referenced as a dependency. To do that, we'll need to add some more files to the new repo, mainly consisting of metadata describing "who" this project is, and what it does.

First up, add a .gitignore file – I recommend the default Python .gitignore on GitHub. Feel free to customise as needed.

Next, add a version number to the code. The best way to do this, is to add it at the top of the main Python file, e.g. by adding this to the top of foodutils.py:

__version__ = '0.1.0'

After that, we're going to add the standard metadata files that almost all open-source Python projects have. Most importantly, a setup.py file that looks something like this:

import os

import setuptools

module_path = os.path.join(os.path.dirname(__file__), 'foodutils.py')

version_line = [line for line in open(module_path)

if line.startswith('__version__')][0]

__version__ = version_line.split('__version__ = ')[-1][1:][:-2]

setuptools.setup(

name="foodutils",

version=__version__,

url="https://github.com/misterfoo/foodutils",

author="Mister foo",

author_email="mister@foo.com",

description="Utils for handling food.",

long_description=open('README.rst').read(),

py_modules=['foodutils'],

zip_safe=False,

platforms='any',

install_requires=[],

classifiers=[

'Development Status :: 2 - Pre-Alpha',

'Environment :: Web Environment',

'Intended Audience :: Developers',

'Operating System :: OS Independent',

'Programming Language :: Python',

'Programming Language :: Python :: 2',

'Programming Language :: Python :: 2.7',

'Programming Language :: Python :: 3',

'Programming Language :: Python :: 3.3',

],

)

And also, a README.rst file:

foodutils

=========

Utils for handling food.

Once you've created those files, commit them to the new repo.

Push the repo

Great – the chunk of code now lives in its own repo, and it contains enough metadata for other projects to see what its name is, what version(s) of it there are, and what function(s) it performs. All that needs to be done now, is to decide where this repo will be hosted. But to do this, you first need to answer an important non-technical question: to open-source the code, or to keep it proprietary?

In general, you should open-source your dependencies whenever possible. You get more eyeballs (for free). Famous hairy people like Richard Stallman will send you flowers. If nothing else, you'll at least be able to always easily find your code, guaranteed (if you can't remember where it is, just Google it!). You get the drift. If open-sourcing the code, then the most obvious choice for where to host the repo is GitHub. (However, I'm not evangelising GitHub here, remember there are other options, kids).

Open source is kool, but sometimes you can't or you don't want to go down that route. That's fine, too – I'm not here to judge anyone, and I can't possibly be aware of anyone else's business / ownership / philosophical situation. So, if you want to keep the code all to your little self (or all to your little / big company's self), you're still going to have to host it somewhere. And no, "on my laptop" does not count as your code being hosted somewhere (well, technically you could just keep the repo on your own PC, and still reference it as a dependency, but that's a Bad Idea™). There are a number of hosting options: for example, on a VPS that you control; or using a managed service such as GitHub private, Bitbucket, or Assembla (note: once again, not promoting any specific service provider, just listing the main players as options).

So, once you've decided whether or not to open-source the code, and once you've settled on a hosting option, push the new repo to its hosted location.

Upload to PyPI

Nearly there now. The chunk of code has been de-coupled from its dependent project; it's been put in a new repo with the necessary metadata; and that repo is now hosted at a permanent location somewhere online. All that's left, is to make it known to the universe of Python projects, so that it can be easily listed as a dependency of other Python projects.

If you've developed with Python before (and if you've read this far, then I assume you have), then no doubt you've heard of pip. Being the Python package manager of choice these days, pip is the tool used to manage Python dependencies. pip can find dependencies from a variety of locations, but the place it looks first and foremost (by default) is on the Python Package Index (PyPI).

If your dependency is public and open-source, then you should add it to PyPI. Each time you release a new version, then (along with committing and tagging that new version in the repo) you should also upload it to PyPI. I won't go into the details in this article; please refer to the official docs for registering and uploading packages on PyPI. When following the instructions there, you'll generally want to package your code as a "universal wheel", you'll generally use the PyPI website form to register a new package, and you'll generally use twine to upload the package.

If your dependency is private and proprietary, then PyPI is not an option. The easiest way to deal with private dependencies (also the easiest way to deal with public dependencies, for that matter), is to not worry about proper Python packaging at all, and simply to use pip's ability to directly reference a source repo (including a specific commit / tag), e.g:

pip install -e \

git+http://git.myserver.com/foodutils.git@0.1.0#egg=foodutils

However, that has a number of disadvantages, the most visible disadvantage being that pip install will run much slower, because it has to do a git pull every time you ask it to check that foodutils is installed (even if you specify the same commit / tag each time).

A better way to deal with private dependencies, is to create your own "private PyPI". Same as with public packages: each time you release a new version, then (along with committing and tagging that new version in the repo) you should also upload it to your private PyPI. For instructions regarding this, please refer to my guide for how to set up and use a private PyPI repo. Also, note that my guide is for quite a minimal setup, although it contains links to some alternative setup options, including more advanced and full-featured options. (And if using a private PyPI, then take note of my guide's instructions for what to put in your local ~/.pip/pip.conf file).

Reference the dependency

The chunk of code is now ready to be used as an external dependency, by any project. To do this, you simply list the package in your project's requirements.txt file; whether the package is on the public PyPI, or on a private PyPI of your own, the syntax is the same:

foodutils==0.1.0 # From pypi.myserver.com

Then, just run your dependencies through pip as usual:

pip install -r requirements.txt

And there you have it: foodutils is now an external dependency. You can list it as a requirement for LasagnaFest, TortelliniFest, GnocchiFest, and as many other projects as you need.

Final thoughts

This article was born out of a series of projects that I've been working on over the past few months (and that I'm still working on), written mainly in Flask (these apps are still in alpha; ergo, sorry, can't talk about their details yet). The size of the projects' codebases grew to be rather unwieldy, and the projects have quite a lot of shared functionality.

I started out by re-using chunks of code between the different projects, with the hacky solution of sym-linking from one codebase to another. This quickly became unmanageable. Once I could stand the symlinks no longer (and once I had some time for clean-up), I moved these shared chunks of code into separate repos, and referenced them as dependencies (with some being open-sourced and put on the public PyPI). Only in the last week or so, after losing patience with slow pip installs, and after getting sick of seeing far too many -e git+http://git… strings in my requirements.txt files, did I finally get around to setting up a private PyPI, for better dealing with the proprietary dependencies of these codebases.

I hope that this article provides some clear guidance regarding what can be quite a confusing task, i.e. that of creating and maintaining a private Python package index. Aside from being a technical guide, though, my aim in penning this piece is to explain how you can split off component parts of a monolithic codebase into re-usable, independent separate codebases; and to convey the advantages of doing so, in terms of code quality and maintainability.

Flask, my framework of choice these days, strives to consist of a series of independent projects (Flask, Werkzeug, Jinja, WTForms, and the myriad Flask-* add-ons), which are compatible with each other, but which are also useful stand-alone or with other systems. I think that this is a great example for everyone to follow, even humble "custom web-app" developers like myself. Bearing that in mind, devoting some time to splitting code out of a big bad client-project codebase, and creating more atomic packages (even if not open-source) upon whose shoulders a client-project can stand, is a worthwhile endeavour.

]]>

Image source: Australian Outback Buffalo Safaris.

Over the past century or so, much has been achieved in combating the famous Tyranny of Distance that naturally afflicts this land. High-quality road, rail, and air links now traverse the length and breadth of Oz, making journeys between most of her far-flung corners relatively easy.

Nevertheless, there remain a few key missing pieces, in the grand puzzle of a modern, well-connected Australian infrastructure system. This article presents five such missing pieces, that I personally would like to see built in my lifetime. Some of these are already in their early stages of development, while others are pure fantasies that may not even be possible with today's technology and engineering. All of them, however, would provide a new long-distance connection between regions of Australia, where there is presently only an inferior connection in place, or none at all.

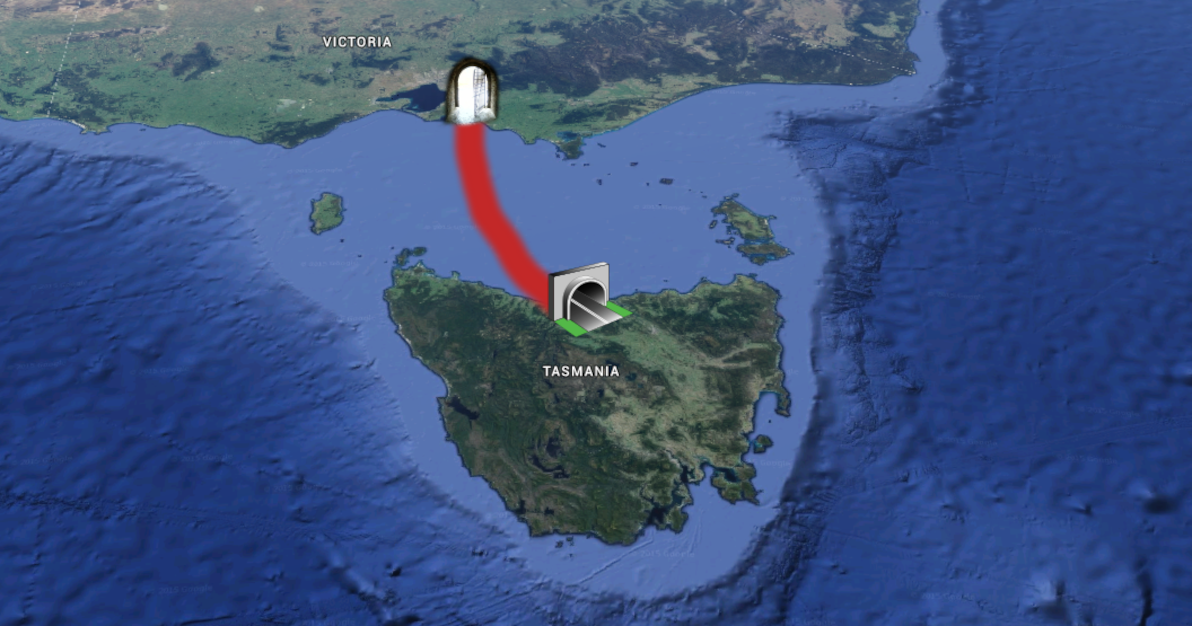

Tunnel to Tasmania

Let me begin with the most nut-brained idea of all: a tunnel from Victoria to Tasmania!

As the sole major region of Australia that's not on the continental landmass, currently the only options for reaching Tasmania are by sea or by air. The idea of a tunnel (or bridge) to Tasmania is not new, it has been sporadically postulated for over a century (although never all that seriously). There's a long and colourful discussion of routes, cost estimates, and geographical hurdles at the Bass Strait Tunnel thread on Railpage. There's even a Facebook page promoting a Tassie Tunnel.

Image sources: Wikimedia Commons: Light on door at the end of tunnel; Wikimedia Commons: Tunnel icon2; satellite imagery courtesy of Google Earth.

Although it would be a highly beneficial piece of infrastructure, that would in the long-term (among other things) provide a welcome boost to Tasmania's (and Australia's) economy, sadly the Tassie Tunnel is probably never going to happen. The world's longest undersea tunnel to date (under the Tsugaru Strait in Japan) spans only 54km. A tunnel under the Bass Strait, directly from Victoria to Tasmania, would be at least 200km long; although if it went via King Island (to the northwest of Tas), it could be done as two tunnels, each one just under 100km. Both the length and the depth of such a tunnel make it beyond the limits of contemporary engineering.

Aside from the engineering hurdle – and of course the monumental cost – it also turns out that the Bass Strait is Australia's main seismic hotspot (just our luck, what with the rest of Australia being seismically dead as a doornail). The area hasn't seen any significant undersea volcanic activity in the past few centuries, but experts warn that it could start letting off steam in the near future. This makes it hardly an ideal area for building a colossal tunnel.

Railway from Mt Isa to Tennant Creek

Great strides have been made in connecting almost all the major population centres of Australia by rail. The first significant long-distance rail link in Oz was the line from Sydney to Melbourne, which was completed in 1883 (although a change-of-gauge was required until 1962). The Indian Pacific (Sydney to Perth), a spectacular trans-continental achievement and the nation's longest train line – not to mention one of the great railways of the world – is the real backbone on the map, and has been operational since 1970. The newest and most long-awaited addition, The Ghan (Adelaide to Darwin), opened for business in 2004.

Image source: Fly With Me.

Today's nation-wide rail network (with regular passenger service) is, therefore, at an impressive all-time high. Every state and territory capital is connected (except for Hobart – a Tassie Tunnel would fix that!), and numerous regional centres are in the mix too. Despite the fact that many of the lines / trains are old and clunky, they continue (often stubbornly) to plod along.

If you look at the map, however, you might notice one particularly glaring gap in the network, particularly now that The Ghan has been established. And that is between Mt Isa in Queensland (the terminus of The Inlander service from Townsville), and Tennant Creek in the Northern Territory (which The Ghan passes through). At the moment, travelling continuously by rail from Townsville to Darwin would involve a colossal horse-shoe journey via Sydney and Adelaide, which only an utter nutter would consider embarking upon. Whereas with the addition of this relatively small (1,000km or so) extra line, the journey would be much shorter, and perfectly feasible. Although still long; there's no silver bullet through the outback.

A railway from Mt Isa to Tennant Creek – even though it would traverse some of the most remote and desolate land in Australia – is not a pipe dream. It's been suggested several times over the past few years. As with the development of the Townsville to Mt Isa railway a century ago, it will need the investment of the mining industry in order to actually happen. Unfortunately, the current economic situation means that mining companies are unlikely to invest in such a project at this time; what's more, The Inlander is a seriously decrepit service (at risk of being decommissioned) on an ageing line, making it somewhat unsuitable for joining up with a more modern line to the west.

Nonetheless, I have high hopes that we will see this railway connection built in the not-too-distant future, when the stars are next aligned.

Highway to Cape York

Australia's northernmost region, the Cape York Peninsula, is also one of the country's last truly wild frontiers. There is now a sealed all-weather highway all the way around the Australian mainland, and there's good or average road access to the key towns in almost all regional areas. Cape York is the only place left in Oz that lacks such roads, and that's also home to a non-trivial population (albeit a small 20,000-ish people, the majority Aborigines, in an area half the size of Victoria). Other areas in Oz with no road access whatsoever, such as south-west Tasmania, and most of the east of Western Australia, are lacking even a trivial population.

The biggest challenge to reliable transport in the Cape is the wet season: between December and April, there's so much rainfall that all the rivers become flooded, making roads everywhere impassable. Aside from that, the Cape also presents other obstacles, such as being seriously infested with crocodiles.

There are two main roads that provide access to the Cape: the Peninsula Developmental Road (PDR) from Lakeland to Weipa, and the Northern Peninsula Road (NPR), from the junction north of Coen on to Bamaga. The PDR is slowly improving, but the majority of it is still unsealed and is closed for much of the wet season. The NPR is worse: little (if any) of the route is sealed, and a ferry is required to cross the Jardine River (approaching the road's northern terminus), even at the height of the dry season.

Image source: Eco Citizen Australia.

A proper Cape York Highway, all the way from Lakeland to The Tip, is in my opinion bound to get built eventually. I've seen mention of a prediction that we should expect it done by 2050; if that estimate can be met, I'd call it a great achievement. To bring the Cape's main roads up to highway standard, they'd need to be sealed all the way, and there would need to be reasonably high bridges over all the rivers. Considering the very extreme weather patterns up that way, the route will never be completely flood-proof (much as the fully-sealed Barkly Highway through the Gulf of Carpentaria, south of the Cape, isn't flood-proof either); but if a journey all the way to The Tip were possible in a 2WD vehicle for most of the year, that would be a grand accomplishment.

High-speed rail on the Eastern seaboard

Of all the proposals being put forward here, this is by far the most well-known and the most oft talked about. Many Australians are in agreement with me, on the fact that a high-speed rail link along the east coast is sorely needed. Sydney to Canberra is generally touted as an appropriate first step, Sydney to Melbourne is acknowledged as the key component, and Sydney to Brisbane is seen as a very important extension.

There's a dearth of commentary out there regarding this idea, so I'll refrain from going into too much detail. In particular, the topic has been flooded with conversation since the fairly recent (2013) government-funded feasibility study (to the tune of AUD$20 million) into the matter.

Sadly, despite all the good news – the glowing recommendations of the government study; the enthusiasm of countless Australians; and some valiant attempts to stave off inertia – Australia has been waiting for high-speed rail an awfully long time, and it's probably going to have to keep on waiting. Because, with the cost of a complete Brisbane-Sydney-Canberra-Melbourne network estimated at around AUD$100 billion, neither the government nor anyone else is in a hurry to cough up the requisite cash.

This is the only proposal in this article, about an infrastructure link to complement another one (of the same mode) that already exists. I've tried to focus on links that are needed where currently there is nothing at all. However, I feel that this propoal belongs here, because despite its proud and important history, the ageing eastern seaboard rail network is rapidly becoming an embarrassment to the nation.

Image source: Adam Bandt MP.

The corner of Australia where 90% of the population live, deserves (and needs) a train service for the future, not one that belongs in a museum. The east coast interstate trains still run on diesel, as the lines aren't even electrified outside of the greater metropolitan areas. The network's few (remaining) passenger services share the line with numerous freight trains. There are still a plethora of old-fashioned level crossings. And the majority of the route is still single-track, causing regular delays and seriously limiting the line's capacity. And all this on two of the world's busiest air routes, with the road routes also struggling under the load.

Come on, Aussie – let's join the 21st century!

Self-sustaining desert towns

My final idea, some may consider a little kookoo, but I truly believe that it would be of benefit to our great sunburnt country. As should be clear by now, immense swathes of Australia are empty desert. There are many dusty roads and 4WD tracks traversing the country's arid centre, and it's not uncommon for some of the towns along these routes to be 1,000km's or more distant from their nearest neighbour. This results in communities (many of them indigenous) that are dangerously isolated from each other and from critical services; it makes for treacherous vehicle journeys, where travellers must bring extra necessities such as petrol and water, just to last the distance; and it means that Australia as a whole suffers from more physical disconnects, robbing contiguity from our otherwise unified land.

Image source: news.com.au.

Good transport networks (road and rail) across the country are one thing, but they're not enough. In my opinion, what we need to do is to string out more desert towns along our outback routes, in order to reduce the distances of no human contact, and of no basic services.

But how to support such towns, when most outback communities are struggling to survive as it is? And how to attract more people to these towns, when nobody wants to live out in the bush? In my opinion, with the help of modern technology and of alternative agricultural methods, it could be made to work.

Towns need a number of resources in order to thrive. First and foremost, they need water. Securing sufficient water in the outback is a challenge, but with comprehensive conservation rules, and modern water reuse systems, having at least enough water for a small population's residential use becomes feasible, even in the driest areas of Australia. They also need electricity, in order to use modern tools and appliances. Fortunately, making outback towns energy self-sufficient is easier than it's ever been before, thanks to recent breakthroughs in solar technology. A number of these new technologies have even been pilot-tested in the outback.

In order to be self-sustaining, towns also need to be able to cultivate their own food in the surrounding area. This is a challenge in most outback areas, where water is scarce and soil conditions are poor. Many remote communities rely on food and other basic necessities being trucked in. However, a number of recent initiatives related to desert greening may help to solve this thorny (as an outback spinifex) problem.

Most promising is the global movement (largely founded and based in Australia) known as permaculture. A permaculture-based approach to desert greening has enjoyed a vivid and well-publicised success on several occasions; most notably, Geoff Lawton's project in the Dead Sea Valley of Jordan about ten years ago. There has been some debate regarding the potential ability of permaculture projects to green the desert in Australia. Personally, I think that the pilot projects to date have been very promising, and that similar projects in Australia would be, at the least, a most worthwhile endeavour. There are also various other projects in Australia that aim to create or nurture green corridors in arid areas.

There are also crazy futuristic plans for metropolis-size desert habitats, although these fail to explain in detail how such habitats could become self-sustaining. And there are some interesting projects in place around the world already, focused on building self-sustaining communities.

As for where to build a new corridor of desert towns, my preference would be to target an area as remote and as spread-out as possible. For example, along the Great Central Road (which is part of the "Outback Highway"). This might be an overly-ambitious route, but it would certainly be one of the most suitable.

And regarding the "tough nut" of how to attract people to come and live in new outback towns – when it's hard enough already just to maintain the precarious existing population levels – I have no easy answer. It has been suggested that, with the growing number of telecommuters in modern industries (such as IT), and with other factors such as the high real estate prices in major cities, people will become increasingly likely to move to the bush, assuming there's adequately good-quality internet access in the respective towns. Personally, as an IT professional who has worked remotely on many occasions, I don't find this to be a convincing enough argument.

I don't think that there's any silver bullet to incentivising a move to new desert towns. "Candy dangling" approaches such as giving away free houses in the towns, equipping buildings with modern sustainable technologies, or even giving cash gifts to early pioneers – these may be effective in getting a critical mass of people out there, but it's unlikely to be sufficient to keep them there in the long-term. Really, such towns would have to develop a local economy and a healthy local business ecosystem in order to maintain their residents; and that would be a struggle for newly-built towns, the same as it's been a struggle for existing outback towns since day one.

In summary

Love 'em or hate 'em, admire 'em or attack 'em, there's my list of five infrastructure projects that I think would be of benefit to Australia. Some are more likely to happen than others; unfortunately, it appears that none of them is going to be fully realised any time soon. Feedback welcome!

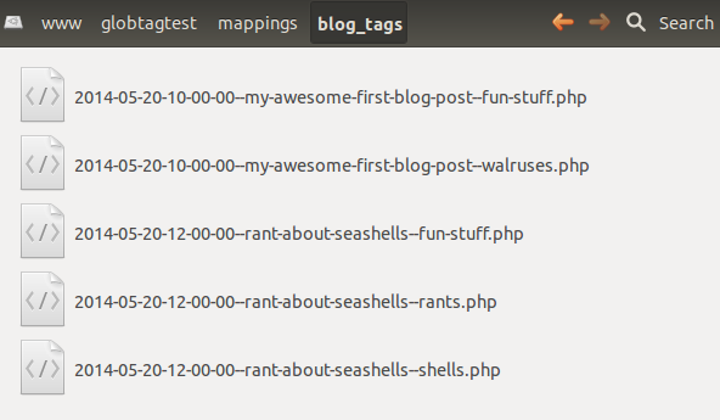

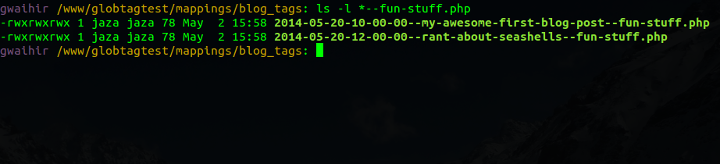

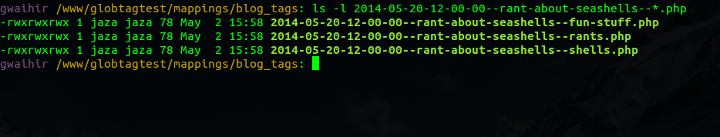

]]>If you're not insane, then yes, that's right! However, for a recent little personal project of mine, I decided to go nuts and experiment. Check it out, this is my "mapping data" store:

And check it out, this is me querying the data store:

And again:

And that's all there is to it. Many-to-many tagging data stored in a list of files, with content item identifiers and tag identifiers embedded in each filename. Querying is by simple directory listing shell commands with wildcards (also known as "globbing").

Is it user-friendly to add new content? No! Does it allow the rich querying of SQL and friends? No! Is it scalable? No!

But… Is the basic querying it allows enough for my needs? Yes! Is it fast (for a store of up to several thousand records)? Yes! And do I have the luxury of not caring about user-friendliness or scalability in this instance? Yes!

Implementation

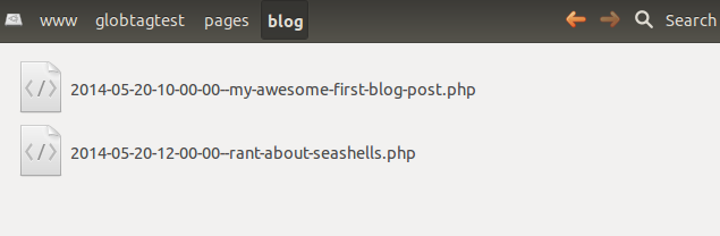

For the project in which I developed this system, I implemented the querying with some simple PHP code. For example, this is my "content item" store:

These are the functions to do some basic querying on all content:

<?php

/**

* Queries for all blog pages.

*

* @return

* List of all blog pages.

*/

function blog_query_all() {

$files = glob(BASE_FILE_PATH . 'pages/blog/*.php');

if (!empty($files)) {

foreach (array_keys($files) as $k) {

$files[$k] = str_replace(BASE_FILE_PATH . 'pages/blog/',

'',

$files[$k]);

}

rsort($files);

}

return $files;

}

/**

* Queries for blog pages with the specified year / month.

*

* @param $year

* Year.

* @param $month

* Month

*

* @return

* List of blog pages with the specified year / month.

*/

function blog_query_byyearmonth($year, $month) {

$files = glob(BASE_FILE_PATH . 'pages/blog/' .

$year . '-' . $month . '-*.php');

if (!empty($files)) {

foreach (array_keys($files) as $k) {

$files[$k] = str_replace(BASE_FILE_PATH . 'pages/blog/',

'',

$files[$k]);

}

}

return $files;

}

/**

* Gets the previous blog page (by date).

*

* @param $full_identifier

* Full identifier of current blog page.

*

* @return

* Full identifier of previous blog page.

*/

function blog_get_prev($full_identifier) {

$files = blog_query_all();

$curr_index = array_search($full_identifier . '.php', $files);

if ($curr_index !== FALSE && $curr_index < count($files)-1) {

return str_replace('.php', '', $files[$curr_index+1]);

}

return NULL;

}

/**

* Gets the next blog page (by date).

*

* @param $full_identifier

* Full identifier of current blog page.

*

* @return

* Full identifier of next blog page.

*/

function blog_get_next($full_identifier) {

$files = blog_query_all();

$curr_index = array_search($full_identifier . '.php', $files);

if ($curr_index !== FALSE && $curr_index !== 0) {

return str_replace('.php', '', $files[$curr_index-1]);

}

return NULL;

}

And these are the functions to query content by tag:

<?php

/**

* Queries for blog pages with the specified tag.

*

* @param $slug

* Tag slug.

*

* @return

* List of blog pages with the specified tag.

*/

function blog_query_bytag($slug) {

$files = glob(BASE_FILE_PATH .

'mappings/blog_tags/*--' . $slug . '.php');

if (!empty($files)) {

foreach (array_keys($files) as $k) {

$files[$k] = str_replace(BASE_FILE_PATH . 'mappings/blog_tags/',

'',

$files[$k]);

}

rsort($files);

}

return $files;

}

/**

* Gets a blog page's tags based on its full identifier.

*

* @param $full_identifier

* Blog page's full identifier.

*

* @return

* Tags.

*/

function blog_get_tags($full_identifier) {

$files = glob(BASE_FILE_PATH .

'mappings/blog_tags/' . $full_identifier . '*.php');

$ret = array();

if (!empty($files)) {

foreach ($files as $f) {

$ret[] = str_replace(BASE_FILE_PATH . 'mappings/blog_tags/' .

$full_identifier . '--',

'',

str_replace('.php', '', $f));

}

}

return $ret;

}

That's basically all the "querying" that this blog app needs.

In summary

What I've shared here, is part of the solution that I recently built when I migrated Jaza's World Trip (my travel blog from 2007-2008) away from (an out-dated version of) Drupal, and into a new database-free custom PHP thingamajig. (I'm considering writing a separate article about what else I developed, and I'm also considering cleaning it up and releasing it as a biolerplate PHP project template on GitHub… although not sure if it's worth the effort, we shall see).

This is an old blog site that I wanted to "retire", i.e. to migrate off a CMS platform, and into more-or-less static files. So, the filesystem-based data store that I developed in this case was a good match, because:

- No new content will be added to the site in the future

- Migrating the site to a different server (in the hypothetical future) would consist of simply copying all the files, and the new server would only need to support PHP (and PHP is the most commonly-supported web server technology in the world)

- If the data store performs well with the current volume of content, that's great; I don't care if it doesn't scale to millions of records (due to e.g. files-per-directory OS limits being reached, glob performance worsening), because it will never have that many

Most sites that I develop are new, and they don't fit this use case at all. They need a content management admin interface. They need to scale. And they usually need various other features (e.g. user login) that also commonly rely on a traditional database backend. However, for this somewhat unique use-case, building a database-free tagging data store was a fun experiment!

]]>

Image source: Sticky Comics.

Being now acquainted with my new toy, I believe I can safely say that my reluctance was not (entirely) based on my being a "phone dinosaur", an accusation that some have levelled at me. Apart from the fact that they offer "a tonne of features that I don't need", I'd assert that the current state-of-the-art in smartphones suffers some serious usability, accessibility, and convenience issues. In short: these babies ain't so smart as their purty name suggests. These babies still have a lotta growin' up to do.

Image source: sondasmcschatter.

Touchy

Mobile phones with few buttons are all the rage these days. This is principally thanks to the demi-g-ds at Apple, who deign that we mere mortals should embrace all that is white with chrome bezel.

Apple has been waging war on the button for some time. For decades, the Mac mouse has been a single-button affair, in contrast to the two- or three-button standard PC rodent. Since the dawn of the iEra, a single (wheel-like) button has predominated all iShtuff. (For a bit of fun, watch how this single-button phenomenon reached its unholy zenith with the unveiling of the MacBook Wheel). And, most recently, since Apple's invention of the i(AmTheOneTrue)Phone (of which all other smartphones are but a paltry and pathetic imitation attempted by mere mortals), smartphones have been almost by definition "big on touch-screen, low on touch-button".

Image source: Crazy Art Ideas.

I'm not happy about this. I like buttons. You can feel buttons. There is physical space between each button. Buttons physically move when you press them.

You can't feel the icons on a touch screen. A touch screen is one uninterrupted physical surface. And a touch screen doesn't provide any tactile response when pressed.

There is active ongoing research in this field. Just this year, the world's first fully-functional bumpy touchscreen prototype was showcased, by California-based Tactus. However, so far no commercial smartphones have been developed using this technology. Hopefully, in another few years' time, the situation will be different; but for the current state-of-the-art smartphones, the lack of tactile feedback in the touch screens is a serious usability issue.

Related to this, is the touch-screen keyboard that current-generation smartphones provide. Seriously, it's a shocker. I wouldn't say I have particularly fat fingers, nor would I call myself a luddite (am I not a web developer?). Nevertheless, touch-screen keyboards frustrate the hell out of me. And, as I understand it, I'm not alone in my anguish. I'm far too often hitting a letter adjacent to the one I poked. Apart from the lack of extruding keys / tactile feedback, each letter is also unmanageably small. It takes me 20 minutes to write an e-mail on my smartphone, that I can write in about 4 minutes on my laptop.

Touch screens have other issues, too. Manufacturers are struggling to get touch sensitivity level spot-on: from my personal experience, my Galaxy S3 is far too hyper-sensitive, even the lightest brush of a finger sets it off; whereas my fiancée's iPhone 4 is somewhat under-sensitive, it almost never responds to my touch until I start poking it hard (although maybe it just senses my anti-Apple vibes and says STFU). The fragility of touch screens is also of serious concern – as a friend of mine recently joked: "these new phones are delicate little princesses". Fortunately, I haven't had any shattered or broken touch-screen incidents as yet (only a small superficial scratch so far); but I've heard plenty of stories.

Before my recent switch to Samsung, I was a Nokia boy for almost 10 years – about half that time (the recent half) with a 6300; and the other half (the really good ol' days) with a 3100. Both of those phones were "bricks", as flip-phones never attracted me. Both of them were treated like cr@p and endured everything (especially the ol' 3100, which was a wonderfully tough little bugger). Both had a regular keypad (the 3100's keypad boasted particularly rubbery, well-spaced buttons), with which I could write text messages quickly and proficiently. And both sported more button real-estate than screen real-estate. All good qualities that are naught to be found in the current crop of touch-monsters.

Great, but can you make calls with it?

After the general touch-screen issues, this would have to be my next biggest criticism of smartphones. Big on smart, low on phone.

Image source: Ars Technica.

Smartphones let you check your email, update your Facebook status, post your phone-camera-taken photos on Instagram, listen to music, watch movies, read books, find your nearest wood-fired pizza joint that's open on Mondays, and much more. They also, apparently, let you make and receive phone calls.

It's not particularly hard to make calls with a smartphone. But, then again, it's not as easy as it was with "dumb phones", nor is it as easy as it should be. On both of the smartphones that I'm now most familiar with (Galaxy S3 and iPhone 4), calling a contact requires more than the minimum two clicks ("open contacts", and "press call"). On the S3, this can be done with a click and a "swipe right", which (although I've now gotten used to it) felt really unintuitive to begin with. Plus, there's no physical "call" button, only a touch-screen "call" icon (making it too easy to accidentally message / email / Facebook someone when you meant to call them, and vice-versa).

Receiving calls is more problematic, and caused me significant frustration to begin with. Numerous times, I've rejected a call when I meant to answer it (by either touching the wrong icon, or by the screen getting brushed as I extract the phone from my pocket). And really, Samsung, what crazy-a$$ Gangman-style substances were you guys high on, when you decided that "hold and swipe in one direction to answer, hold and swipe in the other direction to reject" was somehow a good idea? The phone is ringing, I have about five seconds, so please don't make me think!

In my opinion, there REALLY should be a physical "answer / call" button on all phones, period. And, on a related note, rejecting calls and hanging up (which are tasks just as critical as are calling / answering) are difficulty-fraught too; and there also REALLY should be a physical "hang up" button on all phones, period. I know that various smartphones have had, and continue to have, these two physical buttons; however, bafflingly, neither the iPhone nor the Galaxy include them. And once again, Samsung, one must wonder how many purple unicorns were galloping over the cubicles, when you decided that "let's turn off the screen when you want to hang up, and oh, if by sheer providence the screen is on when you want to hang up, the hang-up button could be hidden in the slid-up notification bar" was what actual carbon-based human lifeforms wanted in a phone?

Hot and shagged out

Two other critical problems that I've noticed with both the Galaxy and the iPhone (the two smartphones that are currently considered the crème de la crème of the market, I should emphasise).

Firstly, they both start getting quite hot, after just a few minutes of any intense activity (making a call, going online, playing games, etc). Now, I understand that smartphones are full-fledged albeit pocked-sized computers (for example, the Galaxy S3 has a quad-core processor and 1-2GB of RAM). However, regular computers tend to sit on tables or floors. Holding a hot device in your hands, or keeping one in your pocket, is actually very uncomfortable. Not to mention a safety hazard.

Secondly, there's the battery-life problem. Smartphones may let you do everything under the sun, but they don't let you do it all day without a recharge. It seems pretty clear to me that while smartphones are a massive advancement compared to traditional mobiles, the battery technology hasn't advanced anywhere near on par. As many others have reported, even with relatively light use, you're lucky to last a full day without needing to plug your baby in for some intravenous AC TLC.

In summary

I've had a good ol' rant, about the main annoyances I've encountered during my recent initiation into the world of smartphones. I've focused mainly on the technical issues that have been bugging me. Various online commentaries have discussed other aspects of smartphones: for example, the oft-unreasonable costs of owning one; and the social and psychological concerns, such as aggression / meanness, impatience / chronic boredom, and endemic antisocial behaviour (that last article also mentions another concern that I've written about before, how GPS is eroding navigational ability). While in general I agree with these commentaries, personally I don't feel they're such critical issues – or, to be more specific, I guess I feel that these issues already existed and already did their damage in the "traditional mobile phone" era, and that smartphones haven't worsened things noticeably. So, I won't be discussing those themes in this article.

Anyway, despite my scathing criticism, the fact is that I'm actually very impressed with all the cool things that smartphones can do; and yes, although I was dragged kicking and screaming, I have also succumbed and joined the "dark side" myself, and I must admit that I've already made quite thorough use of many of my smartphone's features. Also, it must be remembered that – although many people already claim that they "can hardly remember what life was like before smartphones" – this is a technology that's still in its infancy, and it's only fair and reasonable that there are still numerous (technical and other) kinks yet to be ironed out.

]]>A denormalised query result is quite adequate, if you plan to process the result set further – as is very often the case, e.g. when the result set is subsequently prepared for output to HTML / XML, or when the result set is used to populate data structures (objects / arrays / dictionaries / etc) in programming memory. But what if you want to export the result set directly to a flat format, such as a single CSV file? In this case, denormalised form is not ideal. It would be much better, if we could aggregate all that many-to-many data into a single result set containing no duplicate data, and if we could do that within a single SQL query.

This article presents an example of how to write such a query in MySQL – that is, a query that's able to aggregate complex many-to-many relationships, into a result set that can be exported directly to a single CSV file, with no additional processing necessary.

Example: a lil' Bio database

For this article, I've whipped up a simple little schema for a biographical database. The database contains, first and foremost, people. Each person has, as his/her core data: a person ID; a first name; a last name; and an e-mail address. Each person also optionally has some additional bio data, including: bio text; date of birth; and gender. Additionally, each person may have zero or more: profile pictures (with each picture consisting of a filepath, nothing else); web links (with each link consisting of a title and a URL); and tags (with each tag having a name, existing in a separate tags table, and being linked to people via a joining table). For the purposes of the example, we don't need anything more complex than that.

Here's the SQL to create the example schema:

CREATE TABLE person (

pid int(10) unsigned NOT NULL AUTO_INCREMENT,

firstname varchar(255) NOT NULL,

lastname varchar(255) NOT NULL,

email varchar(255) NOT NULL,

PRIMARY KEY (pid),

UNIQUE KEY email (email),

UNIQUE KEY firstname_lastname (firstname(100), lastname(100))

) ENGINE=MyISAM DEFAULT CHARSET=utf8 AUTO_INCREMENT=1;

CREATE TABLE tag (

tid int(10) unsigned NOT NULL AUTO_INCREMENT,

tagname varchar(255) NOT NULL,

PRIMARY KEY (tid),

UNIQUE KEY tagname (tagname)

) ENGINE=MyISAM DEFAULT CHARSET=utf8 AUTO_INCREMENT=1;

CREATE TABLE person_bio (

pid int(10) unsigned NOT NULL,

bio text NOT NULL,

birthdate varchar(255) NOT NULL DEFAULT '',

gender varchar(255) NOT NULL DEFAULT '',

PRIMARY KEY (pid),

FULLTEXT KEY bio (bio)

) ENGINE=MyISAM DEFAULT CHARSET=utf8;

CREATE TABLE person_pic (

pid int(10) unsigned NOT NULL,

pic_filepath varchar(255) NOT NULL,

PRIMARY KEY (pid, pic_filepath)

) ENGINE=MyISAM DEFAULT CHARSET=utf8;

CREATE TABLE person_link (

pid int(10) unsigned NOT NULL,

link_title varchar(255) NOT NULL DEFAULT '',

link_url varchar(255) NOT NULL DEFAULT '',

PRIMARY KEY (pid, link_url),

KEY link_title (link_title)

) ENGINE=MyISAM DEFAULT CHARSET=utf8;

CREATE TABLE person_tag (

pid int(10) unsigned NOT NULL,

tid int(10) unsigned NOT NULL,

PRIMARY KEY (pid, tid)

) ENGINE=MyISAM DEFAULT CHARSET=utf8;And here's the SQL to insert some sample data into the schema:

INSERT INTO person (firstname, lastname, email) VALUES ('Pete', 'Wilson', 'pete@wilson.com');

INSERT INTO person (firstname, lastname, email) VALUES ('Sarah', 'Smith', 'sarah@smith.com');

INSERT INTO person (firstname, lastname, email) VALUES ('Jane', 'Burke', 'jane@burke.com');

INSERT INTO tag (tagname) VALUES ('awesome');

INSERT INTO tag (tagname) VALUES ('fantabulous');

INSERT INTO tag (tagname) VALUES ('sensational');

INSERT INTO tag (tagname) VALUES ('mind-boggling');

INSERT INTO tag (tagname) VALUES ('dazzling');

INSERT INTO tag (tagname) VALUES ('terrific');

INSERT INTO person_bio (pid, bio, birthdate, gender) VALUES (1, 'Great dude, loves elephants and tricycles, is really into coriander.', '1965-04-24', 'male');

INSERT INTO person_bio (pid, bio, birthdate, gender) VALUES (2, 'Eccentric and eclectic collector of phoenix wings. Winner of the 2003 International Small Elbows Award.', '1982-07-20', 'female');

INSERT INTO person_bio (pid, bio, birthdate, gender) VALUES (3, 'Has purply-grey eyes. Prefers to only go out on Wednesdays.', '1990-11-06', 'female');

INSERT INTO person_pic (pid, pic_filepath) VALUES (1, 'files/person_pic/pete1.jpg');

INSERT INTO person_pic (pid, pic_filepath) VALUES (1, 'files/person_pic/pete2.jpg');

INSERT INTO person_pic (pid, pic_filepath) VALUES (1, 'files/person_pic/pete3.jpg');

INSERT INTO person_pic (pid, pic_filepath) VALUES (3, 'files/person_pic/jane_on_wednesday.jpg');

INSERT INTO person_link (pid, link_title, link_url) VALUES (2, 'The Great Blog of Sarah', 'http://www.omgphoenixwingsaresocool.com/');

INSERT INTO person_link (pid, link_title, link_url) VALUES (3, 'Catch Jane on Blablablabook', 'http://www.blablablabook.com/janepurplygrey');

INSERT INTO person_link (pid, link_title, link_url) VALUES (3, 'Jane ranting about Thursdays', 'http://www.janepurplygrey.com/thursdaysarelame/');

INSERT INTO person_tag (pid, tid) VALUES (1, 3);

INSERT INTO person_tag (pid, tid) VALUES (1, 4);

INSERT INTO person_tag (pid, tid) VALUES (1, 5);

INSERT INTO person_tag (pid, tid) VALUES (1, 6);

INSERT INTO person_tag (pid, tid) VALUES (2, 2);Querying for direct CSV export

If we were building, for example, a simple web app to output a list of all the people in this database (along with all their biographical data), querying this database would be quite straightforward. Most likely, our first step would be to query the one-to-one data: i.e. query the main 'person' table, join on the 'bio' table, and loop through the results (in a server-side language, such as PHP). The easiest way to get at the rest of the data, in such a case, would be to then query each of the many-to-many relationships (i.e. user's pictures; user's links; user's tags) in separate SQL statements, and to execute each of those queries once for each user being processed.

In that scenario, we'd be writing four different SQL queries, and we'd be executing SQL numerous times: we'd execute the main query once, and we'd execute each of the three secondary queries, once for each user in the database. So, with the sample data provided here, we'd be executing SQL 1 + (3 x 3) = 10 times.

Alternatively, we could write a single query which joins together all of the three many-to-many relationships in one go, and our web app could then just loop through a single result set. However, this result set would potentially contain a lot of duplicate data, as well as a lot of NULL data. So, the web app's server-side code would require extra logic, in order to deal with this messy result set effectively.

In our case, neither of the above solutions is adequate. We can't afford to write four separate queries, and to perform 10 query executions. We don't want a single result set that contains duplicate data and/or excessive NULL data. We want a single query, that produces a single result set, containing one person per row, and with all the many-to-many data for each person aggregated into that person's single row.

Here's the magic SQL that can make our miracle happen:

SELECT person_base.pid,

person_base.firstname,

person_base.lastname,

person_base.email,

IFNULL(person_base.bio, '') AS bio,

IFNULL(person_base.birthdate, '') AS birthdate,

IFNULL(person_base.gender, '') AS gender,

IFNULL(pic_join.val, '') AS pics,

IFNULL(link_join.val, '') AS links,

IFNULL(tag_join.val, '') AS tags

FROM (

SELECT p.pid,

p.firstname,

p.lastname,

p.email,

IFNULL(pb.bio, '') AS bio,

IFNULL(pb.birthdate, '') AS birthdate,

IFNULL(pb.gender, '') AS gender

FROM person p

LEFT JOIN person_bio pb

ON p.pid = pb.pid

) AS person_base

LEFT JOIN (

SELECT join_tbl.pid,

IFNULL(

GROUP_CONCAT(

DISTINCT CAST(join_tbl.pic_filepath AS CHAR)

SEPARATOR ';;'

),

''

) AS val

FROM person_pic join_tbl

GROUP BY join_tbl.pid

) AS pic_join

ON person_base.pid = pic_join.pid

LEFT JOIN (

SELECT join_tbl.pid,

IFNULL(

GROUP_CONCAT(

DISTINCT CONCAT(

CAST(join_tbl.link_title AS CHAR),

'::',

CAST(join_tbl.link_url AS CHAR)

)

SEPARATOR ';;'

),

''

) AS val

FROM person_link join_tbl

GROUP BY join_tbl.pid

) AS link_join

ON person_base.pid = link_join.pid

LEFT JOIN (

SELECT join_tbl.pid,

IFNULL(

GROUP_CONCAT(

DISTINCT CAST(t.tagname AS CHAR)

SEPARATOR ';;'

),

''

) AS val

FROM person_tag join_tbl

LEFT JOIN tag t

ON join_tbl.tid = t.tid

GROUP BY join_tbl.pid

) AS tag_join

ON person_base.pid = tag_join.pid

ORDER BY lastname ASC,

firstname ASC;If you run this in a MySQL admin tool that supports exporting query results directly to CSV (such as phpMyAdmin), then there's no more fancy work needed on your part. Just click 'Export -> CSV', and you'll have your results looking like this:

pid,firstname,lastname,email,bio,birthdate,gender,pics,links,tags

3,Jane,Burke,jane@burke.com,Has purply-grey eyes. Prefers to only go out on Wednesdays.,1990-11-06,female,files/person_pic/jane_on_wednesday.jpg,Catch Jane on Blablablabook::http://www.blablablabook.com/janepurplygrey;;Jane ranting about Thursdays::http://www.janepurplygrey.com/thursdaysarelame/,

2,Sarah,Smith,sarah@smith.com,Eccentric and eclectic collector of phoenix wings. Winner of the 2003 International Small Elbows Award.,1982-07-20,female,,The Great Blog of Sarah::http://www.omgphoenixwingsaresocool.com/,fantabulous

1,Pete,Wilson,pete@wilson.com,Great dude, loves elephants and tricycles, is really into coriander.,1965-04-24,male,files/person_pic/pete1.jpg;;files/person_pic/pete2.jpg;;files/person_pic/pete3.jpg,,sensational;;mind-boggling;;dazzling;;terrificThe query explained

The most important feature of this query, is that it takes advantage of MySQL's ability to perform subqueries. What we're actually doing, is we're performing four separate queries: one query on the main person table (which joins to the person_bio table); and one on each of the three many-to-many elements of a person's bio. We're then joining these four queries, and selecting data from all of their result sets, in the parent query.

The magic function in this query, is the MySQL GROUP_CONCAT() function. This basically allows us to join together the results of a particular field, using a delimiter string, much like the join() array-to-string function in many programming languages (i.e. like PHP's implode() function). In this example, I've used two semicolons (;;) as the delimiter string.

In the case of person_link in this example, each row of this data has two fields ('link title' and 'link URL'); so, I've concatenated the two fields together (separated by a double-colon (::) string), before letting GROUP_CONCAT() work its wonders.

The case of person_tags is also interesting, as it demonstrates performing an additional join within the many-to-many subquery, and returning data from that joined table (i.e. the tag name) as the result value. So, all up, each of the many-to-many relationships in this example is a slightly different scenario: person_pic is the basic case of a single field within the many-to-many data; person_link is the case of more than one field within the many-to-many data; and person_tags is the case of an additional one-to-many join, on top of the many-to-many join.

Final remarks

Note that although this query depends on several MySQL-specific features, most of those features are available in a fairly equivalent form, in most other major database systems. Subqueries vary quite little between the DBMSes that support them. And it's possible to achieve GROUP_CONCAT() functionality in PostgreSQL, in Oracle, and even in SQLite.

It should also be noted that it would be possible to achieve the same result (i.e. the same end CSV output), using 10 SQL query executions and a whole lot of PHP (or other) glue code. However, taking that route would involve more code (spread over four queries and numerous lines of procedural glue code), and it would invariably suffer worse performance (although I make no guarantees as to the performance of my example query, I haven't benchmarked it with particularly large data sets).

This querying trick was originally written in order to export data from a Drupal MySQL database, to a flat CSV file. The many-to-many relationships were referring to field tables, as defined by Drupal's Field API. I made the variable names within the subqueries as generic as possible (e.g. join_tbl, val), because I needed to copy the subqueries numerous times (for each of the numerous field data tables I was dealing with), and I wanted to make as few changes as possible on each copy.

The trick is particularly well-suited to Drupal Field API data (known in Drupal 6 and earlier as 'CCK data'). However, I realised that it could come in useful with any database schema where a "flattening" of many-to-many fields is needed, in order to perform a CSV export with a single query. Let me know if you end up adopting this trick for schemas of your own.

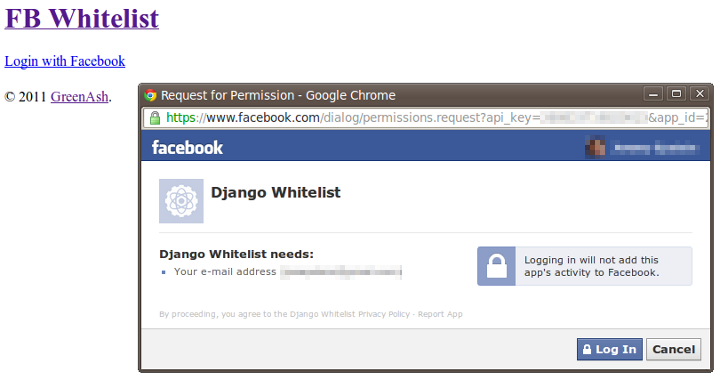

]]>The common workflow for Facebook user integration is: user is redirected to the Facebook login page (or is shown this page in a popup); user enters credentials; user is asked to authorise the sharing of Facebook account data with the non-Facebook source; a local account is automatically created for the user on the non-Facebook site; user is redirected to, and is automatically logged in to, the non-Facebook site. Also quite common is for the user's Facebook profile picture to be queried, and to be shown as the user's avatar on the non-Facebook site.

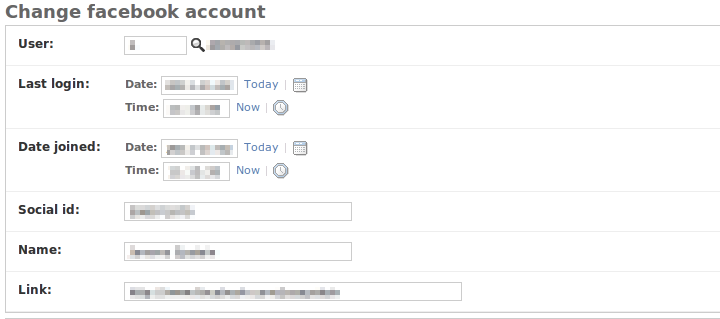

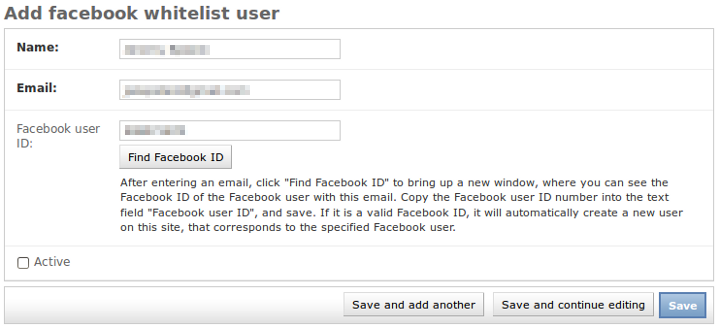

This article demonstrates how to achieve this common workflow in Django, with some added sugary sweetness: maintaning a whitelist of Facebook user IDs in your local database, and only authenticating and auto-registering users who exist on this whitelist.

Install dependencies

I'm assuming that you've already got an environment set up, that's equipped for Django development. I.e. you've already installed Python (my examples here are tested on Python 2.6 and 2.7), a database engine (preferably SQLite on your local environment), pip (recommended), and virtualenv (recommended). If you want to implement these examples fully, then as well as a dev environment with these basics set up, you'll also need a server to which you can deploy a Django site, and on which you can set up a proper public domain or subdomain DNS (because the Facebook API won't actually talk to or redirect back to your localhost, it refuses to do that).

You'll also need a Facebook account, with which you will be registering a new "Facebook app". We won't actually be developing a Facebook app in this article (at least, not in the usual sense, i.e. we won't be deploying anything to facebook.com), we just need an app key in order to talk to the Facebook API.

Here are the Python dependencies for our Django project. I've copy-pasted this straight out of my requirements.txt file, which I install on a virtualenv using pip install -E . -r requirements.txt (I recommend you do the same):

Django==1.3.0

-e git+http://github.com/Jaza/django-allauth.git#egg=django-allauth

-e git+http://github.com/facebook/python-sdk.git#egg=facebook-python-sdk

-e git+http://github.com/ericflo/django-avatar.git#egg=django-avatarThe first requirement, Django itself, is pretty self-explanatory. The next one, django-allauth, is the foundation upon which this demonstration is built. This app provides authentication and account management services for Facebook (plus Twitter and OAuth currently supported), as well as auto-registration, and profile pic to avatar auto-copying. The version we're using here, is my GitHub fork of the main project, which I've hacked a little bit in order to integrate with our whitelisting functionality.

The Facebook Python SDK is the base integration library provided by the Facebook team, and allauth depends on it for certain bits of functionality. Plus, we've installed django-avatar so that we get local user profile images.

Once you've got those dependencies installed, let's get a new Django project set up with the standard command:

django-admin.py startproject myproject

This will get the Django foundations installed for you. The basic configuration of the Django settings file, I leave up to you. If you have some experience already with Django (and if you've got this far, then I assume that you do), you no doubt have a standard settings template already in your toolkit (or at least a standard set of settings tweaks), so feel free to use it. I'll be going over the settings you'll need specifically for this app, in just a moment.

Fire up ye 'ol runserver, open your browser at http://localhost:8000/, and confirm that the "It worked!" page appears for you. At this point, you might also like to enable the Django admin (add 'admin' to INSTALLED_APPS, un-comment the admin callback in urls.py, and run syncdb; then confirm that you can access the admin). And that's the basics set up!

Register the Facebook app

Now, we're going to jump over to the Facebook side of the setup, in order to register our site as a Facebook app, and to then receive our Facebook app credentials. To get started, go to the Apps section of the Facebook Developers site. You'll probably be prompted to log in with your Facebook account, so go ahead and do that (if asked).

On this page, click the button labelled "Create New App". In the form that pops up, in the "App Display Name" field, enter a unique name for your app (e.g. the name of the site you're using this on — for the example app that I registered, I used the name "FB Whitelist"). Then, tick "I Agree" and click "Continue".